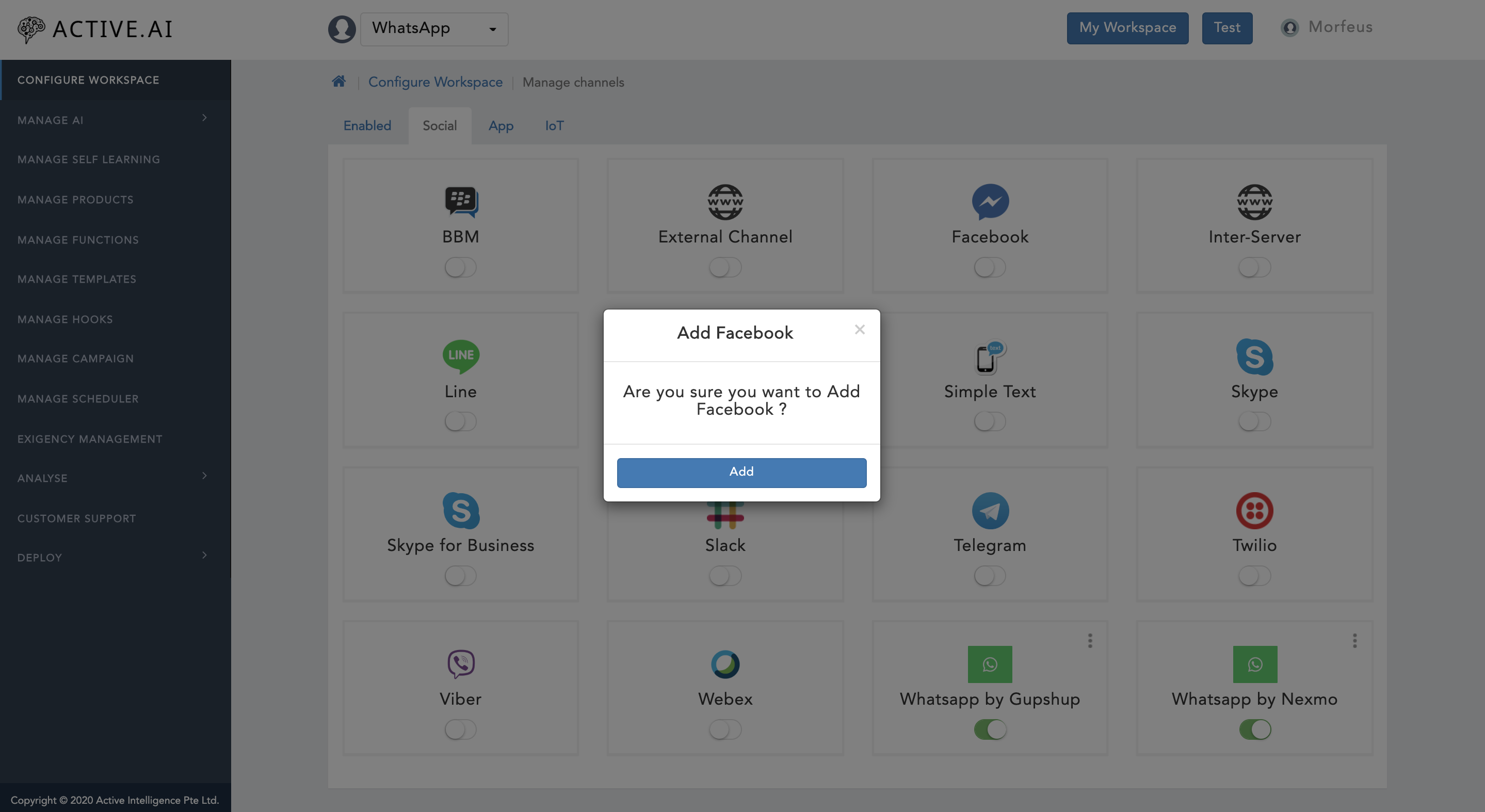

Channels

WebSDK Integration Guide

Key features

WebSDK is a light weight messaging sdk which can be embedded easily in web sites and hybrid mobile apps with minimal integration effort. Once integrated it allows end users to converse with the Conversational AI/bot on the Active AI platform on both text and voice. WebSDK has out the box support for multiple UX templates such as List and Carousel and supports extensive customization for both UX and functionality.

Supported Components

- A chatbot interface, where bubble chain ( bot and user bubble ) is the fundamental medium of communication is called as WebSDK.

- To enable rich browsing experience in WebSDK as per the flow demands, custom views gets invoked by the user events within WebSDK as an overlay, these extended views incorporate larger images, additional scrolling and specifically structured data, they are commonly called as Webviews. These webviews are bundled as clientweb , that needs to be hosted along with the WebSDK. Webviews are not part of the scope of WebSDK integrations.

Note: Components details given above is just for information based only due to security reason and maintaining a secure financial transaction sessions , it is a mandate to host the WebSDK and clientweb in the absolute same domain.

Supported Browsers

- Chrome

- Firefox

- Edge (Windows only)

- Safari (including iOS)

- Android webkit

Highlights

- Custom Theme

- Integrated Voice Support

- Analytics

- Templates

- FAQs

- Language - You can craft the bot response in your favourite language, See Properties on how it's done.

- Voice

- Cache - We use Service Worker caching for the bot with the

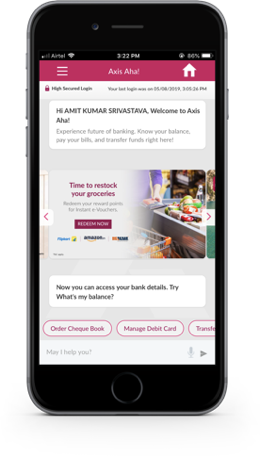

cache firststrategy, this will make the bot frame to load faster. When a new update is released, the changes will be cached first and then on the next load, the new changes will be loaded. - Post Login - Postlogin is a scenario where the user is already logged in the your app. So the user feels the bot is integrated with your app.

- Customer Segment - Segment you message for your priority customers, For ex: A

Hellomessage to customer whose segment is in free tier andHello ${username}for the priority customers. - Webview

- Deployments and Updates

- Security

Setup using CLI

Using the create-websdk-bot a bot can be build with less configuration

- Creating a Bot – How to create a new bot.

Quick Overview

npx create-websdk-bot <bot-name>

For ex:

npx create-websdk-bot stella

(npx comes with npm 5.2+ and higher, see instructions for older npm versions)

Prerequisites

You need to install a build tool Grunt.

bash

npm i grunt-cli -g

Creating a Bot

You’ll need to have Node v8.16.0 or Node 10.16.0 or later version on your machine. You can use nvm (macOS/Linux) or nvm-windows to easily switch Node versions between different projects.

To create a new bot:

npx create-websdk-bot <bot-name>

For ex:

npx create-websdk-bot stella

(npx comes with npm 5.2+ and higher, see instructions for older npm versions)

npm i create-websdk-bot -g --registry=http://activenpm.active.ai

and then

create-websdk-bot <bot-name>

For ex:

create-websdk-bot stella

Once the above command is execute, a few series of questions are asked regarding the properties you want in the bot, based on your selection a bot will be created.

You'll be prompted two version for building the bot, deployments-<bot-name> & Maven and also you can build a development & production version of the bot.

If you've chose deployments-<bot-name>, you can serve the static folder deployments-<bot-name>/morfeuswebsdk folder into a server, then bot is served from the folder. If you've chosen Maven as an option, then a WAR file would be generated.

Integration to Website

Get started immediately

- The SDK

- As part of standard deployment, a web application by name

morfeuswebsdkis generally installed in the Active.ai infrastructure. This web application is a bundled to consist and distribute the WebSDK from the server directly.

- As part of standard deployment, a web application by name

Include the JS SDK to your project

- Import the reference to the sdk.js from morfeuswebsdk public site in your hosted main html page where you intend the chat bot to render.

- Add an id as ‘webSdk’ to the script tag which enables the sdk to verify the url. For example - if your domain where morfeuswebsdk is hosted is https://ai.yourcompany.com/morfeuswebsdk, then

<script type="text/javascript" src="https://ai.yourcompany.com/morfeuswebsdk/libs/websdk/sdk.js" id="webSdk"></script>Invoke the WebSDK by adding the code snippet in a file

index.js(function() { let customerId = ""; let initAndShow = "1"; let showInWebview="0"; let endpointUrl = “https://ai.yourcompany.com/morfeus/v1/channels"; let desktop = { "chatWindowHeight": "90%", "chatWindowWidth": "25%", "chatWindowRight": "20px", "chatWindowBottom": "20px", "webviewSize": "full" }; let properties = { "customerId": customerId, "desktop": desktop, // screen Size of the chatbot for desktop version "initAndShow": initAndShow, // maximized or closed state . "showInWebview": showInWebview, "endpointUrl": endpointUrl, "botId": "XXXXXXXXXXXXX", // unique botId Instance specific "domain": "https://ai.yourcompany.com", // hosted domain address for websdk "botName": "default", "apiKey": "1234567", "dualMode": "0", "debugMode": "0", "timeout": 1000 * 60 * 15, "idleTimeout": 1000 * 60 * 15, "quickTags" : { "enable" : true, "overrideDefaultTags" : true, "tags" : ["Recharge", "Balance", "Transfer", "Pay bills"] } } websdk.initialize(properties); })();This will load the bot with the above said

properties, Click here to know the available properties that can be used when integrating the bot.

NOTE: To change the icon of the WebSDK bot, please change the

background-imageof the #ai-container .ai-launcher-open-icon class in thevendor-theme-chatbutton.cssfile.

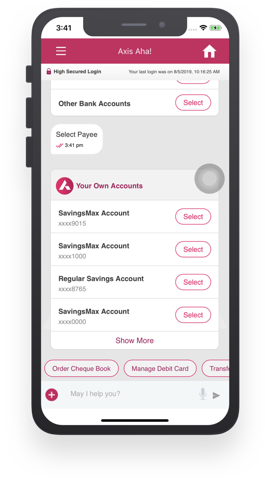

Post Login

Postlogin is a scenario where the user is already logged in the your app. We login the user by default, so the user feels that the bot is integrated in your app.

In your app, paste the following JavaScript snippet

var host = window.location.host;

var hostname = "https://" + host + "/morfeuswebsdk/libs/websdk/js/chatSDK.js";

if (host === 'router.triniti.ai') {

hostname = "https://" + host + "/apikey/<your-api-key>/morfeuswebsdk/libs/websdk/js/chatSDK.js";

}

var chatSDK = document.createElement('script');

chatSDK.type="text/javascript";

chatSDK.id="chatSDK";

chatSDK.src = hostname;

document.head.appendChild(chatSDK);

Also the below snippet ```js var url = document.getElementById("chatSDK").src.split("/").slice(0, 3).join("/");

function getParameterByName(name, url) {

if (!url) {

url = window.location.href;

}

name = name.replace(/[[]]/g, "\$&");

var regex = new RegExp("[?&]" + name + "(=([^&#]*)|&|#|$)"),

results = regex.exec(url);

if (!results) return null;

if (!results[2]) return '';

return decodeURIComponent(results[2].replace(/+/g, " "));

}

var properties = {

"url" : url + "/apikey/

Note: Please contact us for the API Key.

Custom Theme

The WebSDK bot can be customized as per your App/Website, The existing CSS of the bot can be override by your theme file.

Create a bot-theme.css file and upload to any static serving site and pass the url of the css file in properties as a config as shown below

"chatbotTheme": {

"themeFilePath": ["https://s3-ap-southeast-1.amazonaws.com/your-company/css/bot-theme.css"]

}

Classes to override the theme

To override the

font-familyof the bothtml, body { font-family: 'Roboto' !important; }To override the background the bot messages container

.messages { /* Your css here */ }To override the user chat bubble

.user-message-text { /* Your css here */ }To override the bot chat bubble

.bot-message-text { /* Your css here */ }To override the button

.button-div .btn-sm { /* Your css here */ }

NOTE: If the override doesn't happen, then use

!important.

Properties

The properties which can be customized in the bot and enabled and disabled in the WebSDK bot, these properties have to be enabled while initializing the bot as shown in the web integration

There are two ways you can configure the properties, one is passing through the index.js as mentioned in web and one more is INIT_DATA which can be configured through the admin portal.

Theme - To change the theme to match your App/Website

"chatbotTheme": { "themeFilePath": ["https://s3-ap-southeast-1.amazonaws.com/your-company/css/bot-theme.css"] }Postlogin - Postlogin case is a scenario where the user is already logged in the parent app(bank's application). So morfeus-controller have to pass X-CSRF-TOKEN in init request to ensure it's a postlogin case.

Analytics - This feature is about adding analytics management to the websdk container.

// To enable analytics for your bot { "analyticsProvider": "ga", "crossDomains": ["yourbank.com", "ai.yourbank.com"], "enabled": Boolean, "ids": { 'ga': "UA-1XY169AA3-1" } }DestroyOnClose - This feature is about destroying the instance of the bot completely from the parent page containing the chatbot

destroyOnClose : BooleanIdle timeout - This feature logs out the user after a certain period of time if the user is logged in and is inactive for a certain period of time.

ideltimeout : Number (in milliseconds)Bot Dimension - This feature is used to specify the dimensions of the chatbot in parent container.

"desktop" : { "chatWidth": "value in %", "chatHeight": "value in %", "chatRight": "value in px", "chatBottom": "value in px" }Quick Tags - This feature is used to add quick replies list in the chatbot. This usually appears in the bottom section of the chatbot.

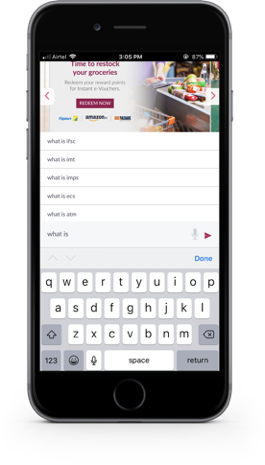

// To add quick tags for you bot "quickTags" : { "enable" : Boolean, "overrideDefaultTags" : Boolean, "tags" : Array, //[tag1, tag2, ..... tagn] }Auto suggest - This feature is used to present suggestions to user whenever user is typing a query in the chatbox. The auto suggest consists of the FAQ

"autoSuggestConfig" : { "enableSearchRequest" : Boolean, "enabled" : Boolean, "noOfLetters" : Number // to trigger the autosuggest after the number of letters }Show Postback Value - This feature is used to show the user selected value, ie; Button or List Item.

"showPostbackValue" : BooleanSlim Scroll - This feature is used to enable slim scroll bar for Internet Explorer browser

"enableSlimScroll" : BooleanPage Context - This feature is to pass the page details on where the bot resides, This is used for analytics on where the bot is residing.

"pageContext": StringLanguage

"i18nSupport" : { "enabled": Boolean, "langCode": "en" }NOTE: Supported languages - English[en], Hindi[hi], Spanish[es], Thai[th], Japanese[ja] & Chinese[zh]

Hide the text input box in the footer of the bot

"buttonFlow": BooleanDate Format - This feature is about keeping a particular date format when chatbot is showing last login date to the user

lastLoginDateFormat : "Date format in string"VoiceSdk - This feature is used for mic support in hybrid sdk for mobile based platforms This feature can be enabled/disabled in websdk by adding/removing a flag called voiceSdkConfig object in INIT_DATA object through admin panel of morfeuswebsdk.

"voiceSdkConfig" : { "enableVoiceSdkHint" : Boolean, "maxVoiceSdkHint" : Number, "speechConfidenceThreshold" : Number, "customWords" : Array of String, //array of banking terminology/abbreviation i.e not easily recognised by native support "speechRate":{ "androidVoiceRate" : Number [0 - 1], "iosVoiceRate" : Number [0 - 1] } }MicrosoftSpeechSdk - It uses Microsoft Speech API developed by Microsoft to allow the use of speech recognition and speech synthesis. Users can use its Text-To-Speech(TTS) And Speech-To-Text(STT) feature by enabling their respective flags.

"microsoftSpeechSdkConfig": { "enable": false, //set this flag to false to disable STT. "apiKey": "", "apiRegion": "", "continousMode": true, "speak": false, // set this flag to false to disable TTS "lang-config": { "en" : { "lang" : "en-US", "voiceName" : "en-US-ZiraRUS" }, "ar": { "lang" : "ar-EG", "voiceName" : "ar-EG-Hoda" } } },Show Postback Utterance - This feature is used trigger external message in websdk from parent container.

"showPostbackUtterance": BooleanShow Close Button On Postlogin - This feature is used to remove close button in post login scenario.

"showCrossOnPostLogin": BooleanLocation Blocked Message - This is used to display custom location blocked message if user has denied allow location pop up in browser

"locationBlockedMsg": StringLive Chat Notification - If the current tab is inactive and if a manual chat is received, a Notification is shown with a sound, If the sound file is not defined, it will play the default sound.

"tabSwitchNotification": Boolean, "tabSwitchNotificationSound": "path/to/mp3/file"

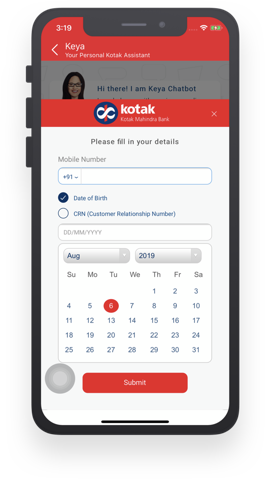

Webview

The WebSDK bot allows to open a webview, Webview is a separate project and is a secured frame and it's renders as a part of WebSDK. The Webview displays web pages as part of the WebSDK layout.

A common scenario in which using WebView is helpful is Secured Login Page, User Profile Update Page or Entering an OTP screen.

Customizing Templates

WebSDK allows you to create custom templates, Custom templates consist of three separate technologies: 1. HTML Elements 2. JavaScript 3. CSS

For ex. Say you're creating a custom User Profile Update Form templates

- Create a file

userProfileUpdate.jsinmorfeuswebsdk/src/main/webapp/js/. - Create a file

userProfileUpdate.htmlinmorfeuswebsdk/src/main/webapp/templates/custom/. - The HTML file should consist of Handlebars template, also the CSS is included in this HTML inside the

<style/>tag. - Mention the

userProfileUpdatefunction in the adaptor.js file which is located in the pathmorfeuswebsdk/src/main/webapp/js/. - Mention the

userProfileUpdate.jsin theGruntfile.jsof the morfeuswebsdk inside the tags task under the src section. - The WebSDK will render the custom template.

Sample Snippet

userProfileUpdate.js

(function(window){

function userProfileUpdateAdaptor(templateName, response){

var container = "messages";

// Your code goes here

loadDynamicCustomTemplate(templateName, container);

appendTemplate(templateName, container, {});

}

window.userProfileUpdateAdaptor = userProfileUpdateAdaptor;

})(window);

userProfileUpdate.html

<div class="user-profile-container">

<!-- Your HTML code goes here -->

<!-- You can include any jQuery plugin here -->

<!-- Also supports Handlebars template -->

</div>

<style>

/* Your CSS goes here */

</style>

adaptor.js

(function(window){

var adaptor = {

// Existing Custom Templates

"userProfileUpdateHTML" : function(templateName, data){

userProfileUpdateAdaptor(templateName, data);

}

};

window.adaptor = adaptor;

})(window);

Deployments and Updates

The latest WebSDK version will be published to the npm repository, For you to get the new version of the WebSDK, grab the crurrent version from npm and modify the version number in the Gruntfile.js of the bot generated, then re-build the bot using mvn clean install. This will generate a new version of the bot.

Security

The WebSDK follows guidelines for security which includes industry best practices. It has also multiple layer of authentication and provides complete protection for user sensitive data.

For additional security, where every request is sent with a security hash, as additional property has to be enabled in the index.js file

sAPIKey: String

sAPIKey - When the sAPIKey is set in the index.js, A unique timeStamp is passed in the request param and hash is set to the header which is combination of the request parameters, where the hash is generated using HMACSHA1 algorithm.

RTL Support

The WebSDK supports multi-language and it also extends it's support for the Arabic, Hebrew, Farsi and Urdu languages with simple config

"i18nSupport": {

"enabled": true,

"langCode": "ar", // Arabic

"rtl": Boolean

},

Customizing Web

List Of Customisable Features:

- Common Templates

- Customer Specific Templates

- Icons

- Minimize button

- Close button

- Maximize button

- Minimizedstate(3rd state)

- Splash screen

- Popularquery

- PostLogin and Prelogin

- Analytics

- Destroying the bot

- start over chat

- Idle timeout

- Bot dimensions

- Uploading a photo

- Feedback icon

- Teach icon

- Last login date Format

- Emoji

- Slim scroll

- Ssl Pinning

- SSE

- Push Notification

- Autosuggest

- Custom Webview Header

- Show Postback Utterance

- Custom Error

- Hide on Response

- Show Close Button On Post Login

- Focus On Query

- Location Blocked Message

- UnLinkify Email

- Custom Negative Feedback

- Postback on Related Faq

| Feature | Customisable | How |

|---|---|---|

| Common Templates | Yes | Common Templates can be customised by writing css in vendor-theme-chatbox.css and vendor-theme-chatbox.css. |

| Customer Specific Templates | Yes | Common Templates can be customised by writing css in vendor-theme-chatbox.css and vendor-theme-chatbox.css. |

| Feature | Customisable | How |

|---|---|---|

| Icons | Yes | Icons can be customised by replacing icons references in index.css and common templates. |

| Feature | Customisable | How |

|---|---|---|

| Minimize Button | Yes | The minimise button can be customised by changing it's image reference in desktopHeaderTemplate.html and mobileHeaderTemplate.html in common templates and it's style values in vendor-theme-chatbox.css. |

| Close Button | Yes | The close button can be customised by changing it's image reference in desktopHeaderTemplate.html and mobileHeaderTemplate.html in common templates and it's style values in vendor-theme-chatbox.css. |

| Maximize Button | Yes | This feature can be introduced in the websdk by providing a flag in index.js of the project.And can be customised by making changes in minimizedStateTemplate.html in common templates and style values in vendor-theme-chatbox.css. |

The flag that needs to be introduced in initParams of index.js of project is

json "minimizedState : false".

| Feature | Customisable | How |

|---|---|---|

| Splash Screen | Yes | Modify the splashScreenTemplate.html available in the common templates. Customize this template according to the requirement by adding style(css) in vendor-theme-chatbox.css. |

| Feature | Customisable | How |

|---|---|---|

| Popular Query - This feature displays a menu at the footer of the chatbot showing popular querieswhich could made by the user to the bot. | Yes | Can be customised by changing the display type of submenu in the payload coming in the init call. |

| Feature | Customisable | How |

|---|---|---|

| Prelogin | No | Default feature. No customisation needed. |

| Postlogin | Yes | Postlogin case is a scenario where the user is already logged in the parent app(bank's application). So morfeus-controller have to pass X-CSRF-TOKEN in init request to ensure it's a postlogin case. |

| Feature | Customisable | How |

|---|---|---|

| Analytics - This Feature about adding analytics management to the websdk container. | Yes | This feature can be enabled/disabled in websdk by adding/removing analytics flag object in initParams config object of index.js of the project. Below is the config structure to be added Type of the values to be added in the above object |

"analytics" : {

"enabled" : true/false,

"crossDomains" : [domain 1, domain 2, ...domain n],

"ids" : {

"analyticsServiceProviderName" : "apiKey"

}

}

| Feature | Customisable | How |

|---|---|---|

| DestroyOnClose - This Feature about destroying the instance of the bot completely from the parent page containing the chatbot | Yes | This feature can be enabled/disabled in websdk by adding/removing destroyOnClose flag in initParams config object of index.js of the project. Below is the flag to be added. |

destroyOnClose : true/false

| Feature | Customisable | How |

|---|---|---|

| Quick Tags - This Feature used to add quick replies list in the chatbot. This usually comes in the bottom section of the chatbot. | Yes | This feature can be enabled/disabled in websdk by adding/removing quickTags flag object in initParams config object of index.js of the project. This feature can further be customised by changing the payload coming in the init network call after the bot is rendered inorder to load quick tag options dynamically from the server. Also if the overrideDefaultTags is set to false, The quickTags can be modified from server response quick_replies, and if the response from the server is empty, the quickTags will loaded from index.js Below is the flag object to be added. |

"quickTags" : {

"enabled" : true/false,

"overrideDefaultTags" : true/false,

"tags" : ["tag1", "tag2"...."tagn"]

}

| Feature | Customisable | How |

|---|---|---|

| Start Over Chat - This Feature used reinitiate the chatbot by clearing all the current session chat messages if required and current user login session as well | Yes | This feature can be enabled/disabled in websdk by adding/removing startOverChat flag object in initParams config object of index.js of the project. Below is the flag object to be added. |

"startOverConfig" : {

"clearMessage" : true/false

}

| Feature | Customisable | How |

|---|---|---|

| Idle timeout - This feature logs out the user after a certain period of time if the user is logged in and is inactive for a certain period of time | Yes | The idle timeout in websdk can be changed by changing the value of idletimeout flag in initParams config object of index.js of the project. Below is the flag to be added. |

"idletimeout" : 'idleTimeoutValue'

| Feature | Customisable | How |

|---|---|---|

| Bot Dimension - This Feature used to specify the dimensions of the chatbot in parent container. | Yes | The dimension and position of chatBox window inside the parent container window can be managed by providing desktop flag object in the initParam config object. Below is the flag to be added. |

"desktop" : {

"chatWidth" : "value in %",

"chatHeight" : "value in %",

"chatRight" : "value in px",

"chatBottom" : "value in px"

}

| Feature | Customisable | How |

|---|---|---|

| Upload Image - This Feature used to upload user's image in the chatbox. | Yes (only style) | The is only customisable in look and feel wise by making changes in chatBoxTemplate.html's editImage modal in common templates. |

| Feature | Customisable | How |

|---|---|---|

| Teach Icon | Yes | The icon can be changed by replacing with the desired image in images folder of the project and replacing with appropriate image and making changes in the feedbackTemplate.html of common templates |

| Feedback Icons | Yes | The icon can be changed by replacing with the desired images in images folder of the project and replacing with appropriate images and making changes in the feedbackTemplate.html of common templates |

| Feature | Customisable | How |

|---|---|---|

| Date Format - This Feature about keeping a particular date format when chatbot is showing last login date to the user | Yes | It can be changed by keeping a flag lastLoginDateFormat in initParams object of the index.js file of projects. Below is the flag to be added. |

lastLoginDateFormat : "date format in string"

| Feature | Customisable | How |

|---|---|---|

| Emoji - Supporting emojis in websdk as a feature | Yes | 1. This feature can be enabled/disabled in websdk by adding/removing a flag called emojiEnabled in initParams config object of index.js of project. 2. And the different emojis supported in websdk can be mentioned in the emoji array flag where each element contains an array of emoji's. This also has to be added in initConfig params object of index.js of project. The mark up and style values and of the emoji box can be changed by customising emojiTemplate.html in common templates and vendor-theme.chatbox.css respectively. |

For Point 1

Below is the flag to be added:

enableEmoji : true/false

For Point 2

Below is the flag to be added:

emoji : [

["emoji code1","emoji code2","emoji code3" ....."emoji code n"],

["emoji code1","emoji code2","emoji code3" ....."emoji code n"],

.

.

["emoji code1","emoji code2","emoji code3" ....."emoji code n"]

]

| Feature | Customisable | How |

|---|---|---|

| SSL Pinning - This Feature implemented on the mobile implementation of websdk. It is a security check for certificates on network calls | Yes | This feature can be enabled/disabled in websdk by adding/removing a flag called sslE nabled in initParams config object of index.js of project. Below is the flag to be added - |

sslEnabled : true/false

| Feature | Customisable | How |

|---|---|---|

| Server Sent Event Feature - This Feature implemented when the chatbot is unable to continue chat with user further and chat is transferred to a human being | No |

| Feature | Customisable | How |

|---|---|---|

| Push Notification - This Feature used to send push notification updates to the user | Yes | This feature can be enabled/disabled in websdk by adding/removing a flag called pushConfig object in INIT_DATA object through admin panel of morfeuswebsdk. |

| Feature | Customisable | How |

|---|---|---|

| AutoSuggest - This Feature used to present suggestions to user whenever user is typing a query in the chatbox. | Yes | This feature can be enabled/disabled in websdk by adding/removing a flag called autoSuggestConfig object in INIT_DATA object through admin panel of morfeuswebsdk. Below is the sample config to be added. |

"autoSuggestConfig" : {

"enableSearchRequest" : true/false,

"enabled" : true/false,

" noOfLetters" : number

}

| Feature | Customisable | How |

|---|---|---|

| VoiceSdk - This Feature used for mic support in hybrid sdk for mobile based platforms | Yes | This feature can be enabled/disabled in websdk by adding/removing a flag called voiceSdkConfig object in INIT_DATA object through admin panel of morfeuswebsdk. Below is the sample payload config to be added |

"voiceSdkConfig" : {

"enableVoiceSdkHint" : true/false,

"maxVoiceSdkHint" : number of hints,

"speechConfidenceThreshold" : threshold value

}

| Feature | Customisable | How |

|---|---|---|

| Custom Webview Header - Used to insert customised Webview Header Template. | Yes | This feature has template dependency which can be customised by changed webviewHeaderTemplate.html from common templates and css style from vendor-theme-chatbox.css. This feature can be enabled/disabled in websdk by adding/removing a flag called customWebviewHeader in initParams config object of index.js of project. Below is the flag object to be added in initParams Config Object- |

"customWebviewHeader" : {

"enable" : true/false

}

| Feature | Customisable | How |

|---|---|---|

| Show Postback Utterance - This Feature used trigger external message in websdk from parent container. | Yes | This feature can be enabled/disabled in websdk by adding/removing a flag called showPostbackUtterance in initParams config object of index.js of project. |

Below is the flag to be added in initParams Config Object-

"showPostbackUtterance" : true/false

Type of value to be added in the above flag

showPostbackUtterance : Boolean

| Feature | Customisable | How |

|---|---|---|

| Custom Errors - This Feature used to handle various network response error scenarios in websdk. | Yes | This feature can be enabled/disabled in websdk by adding/removing a flag called customErrors in initParams config object of index.js of project. For various HTTP error statuses, project can define different messages in a function called handleErrorResponse of preflight.js file. Below is the flag to be added in initParams Config Object. |

"customErrors" : true/false

| Feature | Customisable | How |

|---|---|---|

| Hide On Response - This is Feature used to minimise chatbot when user is having conversation with the bot depending upon the template type coming from the network response | Yes | This feature can be enabled/disabled in websdk by adding removing a flag called hideOnResponseTemplate in initParams config object of index.js of project. Below is the flag to be added in initParams Config Object. |

"hideOnResponseTemplates" : ["templateName1",

"templateName2",...."templateName n"]

| Feature | Customisable | How |

|---|---|---|

| Show Close Button On Postlogin - This Feature used to remove close button in post login scenario. | Yes | This feature can be enabled/disabled in websdk by adding/removing a flag called showCrossOnPostLogin in initParams config object of index.js of project. Below is the flag to be added in initParams Config Object. |

"showCrossOnPostLogin" : true/false

| Feature | Customisable | How |

|---|---|---|

| Location Blocked Message - This is used to display custom location blocked message if user has denied allow location pop up in browser. | Yes | This feature can be enabled/disabled in websdk by adding/removing a flag called locationBlockedMsg in initParams config object of index.js of project. Below is the flag object to be added in initParams Config Object. |

"locationBlockedMsg" : true/false

| Feature | Customisable | How |

|---|---|---|

| Slim Scroll - This Feature used to enable slim scroll bar for Internet Explorer browser | Yes | This feature can be enabled/disabled in websdk by adding/removing a flag called enableSlimScroll in initParams config object of index.js of project. Below is the flag object to be added in initParams Config Object. |

"enableSlimScroll" : true/false

| Feature | Customisable | How |

|---|---|---|

| Unlinkify Email - This Feature used to remove url nature of any emails text coming in websdk cards | Yes | This feature can be enabled/disabled in websdk by adding/removing a flag called unLinkifyEmail in initParams config object of index.js of project. Below is the flag object to be added in initParams Config Object. |

"unLinkifyEmail" : true/false

| Feature | Customisable | How |

|---|---|---|

| Focus On Query - Focus on input box if the last message in websdk is a text | Yes | This feature can be enabled/disabled in websdk by adding/removing a flag |

called focusOnQuery in initParams config object of index.js of project. Below is the flag object to be added in initParams Config Object.

json

"focusOnQuery" : true/false

| Feature | Customisable | How |

|---|---|---|

| Custom Negative Feedback - Load an dynamic feedback template from network call rather than default feedback modal | Yes | m This feature can be enabled/disabled in websdk by adding/removing a flag called customNegativeFeedback in initParams config object of index.js of project. Below is the flag object to be added in initParams Config Object. |

"customNegativeFeedback" : true/false

| Feature | Customisable | How |

|---|---|---|

| If payload type is RELATED_FAQ and messageType in network request body needed is postback | Yes | This feature can be enabled/disabled in websdk by adding/removing a flag called postbackOnRelatedFaq in initParams config object of index.js of project. Below is the flag object to be added in initParams Config Object. |

"postbackOnRelatedFaq" : true/false

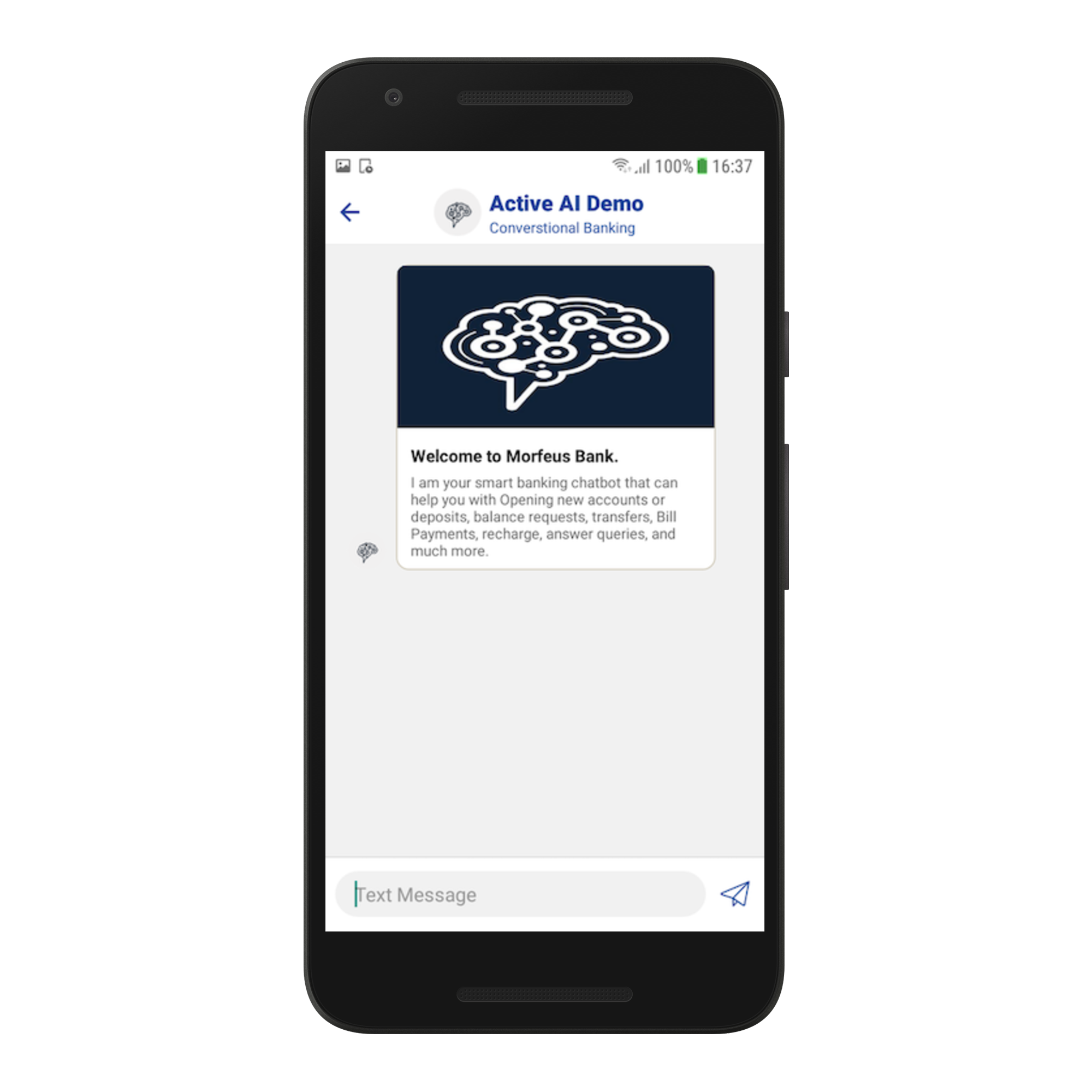

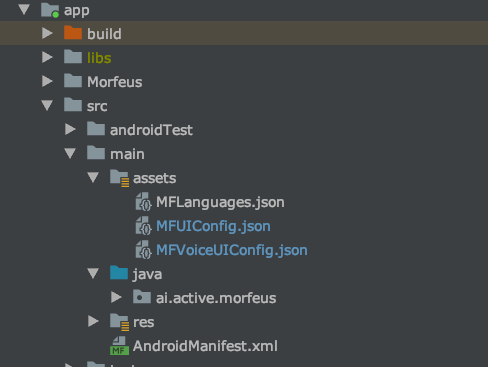

Morfeus Android Hybrid SDK

Key features

Morfeus Hybrid SDK is a lightweight messaging SDK that can be embedded easily in web sites and hybrid mobile apps with minimal integration effort. Once integrated it allows end-users to converse with the Conversational AI /bot on the Active AI platform on both text and voice. WebSDK has out the box support for multiple UX templates such as List and Carousel and supports extensive customization for both UX and functionality.

Supported Components

Please refer this link in WebSDK section

Device Support

All android phones with OS support 4.4.0 and above.

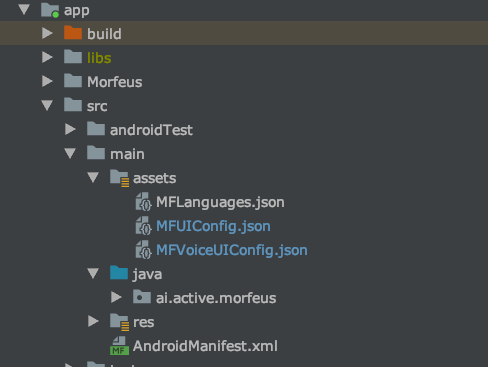

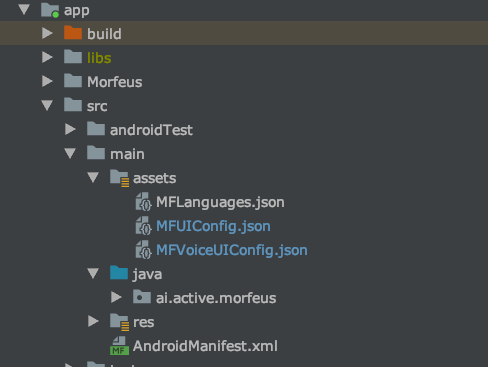

Setup and how to build

Please refer above WebSDK section of Setup and how to build before starting below steps.

1. Pre Requisites

- Android Studio 2.3+

2. Install and configure dependencies

Add the following lines to your project level build.gradle file.

allprojects {

repositories {

google()

jcenter()

maven {

url "http://repo.active.ai/artifactory/resources/android-sdk-release"

credentials {

username = "artifactory_username"

password = "artifactory_password"

}

}

}

}

To install SDK add the following configuration to your module(app) level build.gradle file.

dependencies {

...

// Voice SDK

implementation 'com.morfeus.android.voice:MFSDKVoice:2.4.10'

// gRPC

implementation 'io.grpc:grpc-okhttp:1.18.0'

implementation 'io.grpc:grpc-protobuf-lite:1.18.0'

implementation 'io.grpc:grpc-stub:1.18.0'

implementation 'io.grpc:grpc-android:1.18.0'

implementation 'javax.annotation:javax.annotation-api:1.2'

// OAuth2 for Google API

implementation('com.google.auth:google-auth-library-oauth2-http:0.7.0') {

exclude module: 'httpclient'

}

// Morfeus SDK

implementation 'com.morfeus.android:MFSDKHybridKit:2.4.20'

implementation 'com.morfeus.android:MFOkHttp:3.12.0'

implementation 'com.google.guava:guava:22.0-android'

implementation 'com.android.support:appcompat-v7:28.0.0'

implementation 'com.android.support:design:28.0.0'

...

}

Note: If you get 64k method limit exception during compile time then add following code into your app-level build.gradle file.

android {

defaultConfig {

multiDexEnabled true

}

}

dependencies {

implementation 'com.android.support:multidex:1.0.1'

}

Integration to Application

User can integrate chatbot at on any screen in application.Broadly it is divided into 2 sections * Public * Post Login

1. Public

Initialize the SDK

To initialize Morfeus SDK pass given BOT_ID, BOT_NAME and END_POINT_URL to MFSDKMessagingManagerKit. You must initialize Morfeus SDK once across the entire application.

Add the following lines to your Activity/Application where you want to initialize the Morfeus SDK.onCreate()of Application class is best place to initialize. If you have already initialized MFSDK, reinitializing MFSDK will throw MFSDKInitializationException.

try {

// Properties to pass before initializing sdk

MFSDKProperties properties = new MFSDKProperties

.Builder(END_POINT_URL)

.addBot(BOT_ID, BOT_NAME)

.setSpeechAPIKey(SPEECH_API_KEY)

.build();

// sMFSDK is public static field variable

sMFSDK = new MFSDKMessagingManagerKit

.Builder(activityContext)

.setSdkProperties(properties)

.build();

// Initialize sdk

sMFSDK.initWithProperties();

} catch (MFSDKInitializationException e) {

Log.e(TAG, "Failed to initializing MFSDK", e);

}

Properties:

| Property | Description |

|---|---|

| BOT_ID | The unique ID for the bot. |

| BOT_NAME | The bot name to display on top of chat screen. |

| END_POINT_URL | The bot API URL. |

Invoke Chat Screen

To invoke chat screen call showScreen() method of MFSDKMessagingManagerKit. Here, sMSDK is an instance variable of MFSDKMessagingManagerKit.

// Open chat screen

sMFSDK.showScreen(activityContext, BOT_ID);

You can get instance of MFSDKMessagingManagerKit by calling getInstance()of MFSDKMessagingManagerKit. Please make sure before calling getInstance() you have initialized the MFSDK. Please check the following code snippet.

try {

// Get SDK instance

MFSDKMessagingManager mfsdk = MFSDKMessagingManagerKit.getInstance();

// Open chat screen

mfsdk.showScreen(activityContext, BOT_ID);

} catch (Exception e) {

// Throws exception if MFSDK not initialised.

}

Compile and Run

Once the above code is added you can build and run your application. On launch of chat screen, welcome message will be displayed.

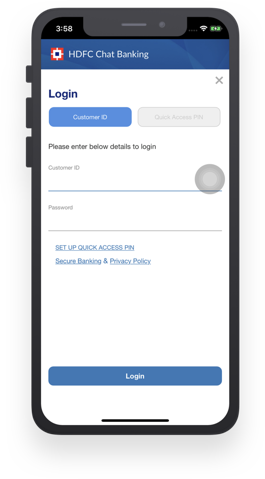

2. Post Login

You can pass set of user/session information to the MFSDK using MFSDKSessionProperties builder. In following example we are passing Customer ID to MFSDK.

HashMap<String, String> userInfoMap = new HashMap();

userInfo.put("CUSTOMER_ID", customerID);

userInfo.put("SESSION_ID", sessionID); // Pass your app sessionID

MFSDKSessionProperties sessionProperties = new MFSDKSessionProperties

.Builder()

.setUserInfo(userInfoMap)

.build();

// Open chat screen

mMFSdk.showScreen(LoginActivity.this, BOT_ID, sessionProperties);

Note: Please make sure length of customerID and sessionID should not be greater than 256 bytes if encyption of keys are required

Properties

Setting Chat Screen Header

Morfeus Android SDK provides feature to set header as native header. To enable native header setMFSDKProperties.Builder.showNativeHeader(boolean) to true. Please check below code snippet.

// Enable Native Header

MFSDKProperties.Builder sdkPropertiesBuilder = new MFSDKProperties

.Builder(BOT_URL)

…

.showNativeHeader(true)

…

.build();

You can configure native header properties by using “setHeader” method of MFSDKSessionProperties. You need to create MFSDKHeader object and set below properties according to requirement. In following example we are setting header background as color with title and left, right button.

If you want to set custom font to header title, please copy your custom font.ttf file to assets/fonts folder and provide font name withsetTitleFontName(fontName) method as shown in below code snippet.

MFSDKHeader header = new MFSDKHeader();

header.setHeaderHeight(MFSDKHeader.WRAP_CONTENT);

// Hex color code

header.setBackgroundColor("#CD1C5F");

header.setTitle("ActiveAI Bot!");

// Keep font file under assets/fonts folder

header.setTitleFontName("Lato-Medium.ttf");

header.setTitleColor("#FFFFFF");

header.setTitleFontSize(20); // unit is sp

header.setTitleAlignment(MFSDKHeader.Alignment.CENTER_ALIGN);

header.setLeftButtonImage("ic_action_nav");

header.setLeftButtonAction(MFSDKHeader.ButtonAction.NAVIGATION_BUTTON);

header.setRightButtonImage("ic_action_home");

header.setRightButtonAction(MFSDKHeader.ButtonAction.HOME_BUTTON);

MFSDKSessionProperties sessionProperties = new MFSDKSessionProperties.Builder()

...

.setHeader(header)

...

.build();

Handling Logout Event

To retrieve logout event, register MFLogoutListener with MFSDK. MFSDK will call onLogout(int logoutType) method when user logout from the current chat session. There are four type of logout method supported by MFSDK as listed below.

| Logout Type | Logout Code |

|---|---|

| Auto logout / Inactivity Timeout | 1001 |

| Forced logout | 1002 |

Please check following code sample.

//Note: mMFSdk is an instance variable of MFSDKMessagingManagerKit

mMFSdk.setLogoutListener(new MFLogoutListener() {

@Override

public void onLogout(int logoutType) {

}

}

Deeplink Callback

Using MFSDKMessagingHandler interface you can deep link your application with MFSDK. MFSDKMessagingHandler contains a callback method which will be called by MFSDK depending on the requirement. MFSearchResponseModel represents set of information required by Application to enable the deep linking.

MFSDKProperties properties = new MFSDKProperties.Builder(BASE_URL)

.setMFSDKMessagingHandler(new MFSDKMessagingHandler() {

@Override

public void onSearchResponse(MFSearchResponseModel model) {

}

@Override

public void onSSLCheck(String url, String requestCode) {

// No-op

}

@Override

public void onChatClose() {

// Retrieve callback when chat screen is closed

}

@Override

public void onEvent(String eventType, String payloadMap) {

// No-op

}

@Override

public void onHomemenubtnclick() {

// Retrieve home button click event

}

})

.build();

Adding Voice Feature

If you haven’t added required dependencies for voice than please add following dependencies in your project’s build.gradle file. Voice recognition has two medium of speech recognition and either of them can be used :

Google Speech recognition

Android Native Speech Recognition

a. Google Speech Recognition

Update following configuration to your module(app) level build.gradle file.

dependencies {

...

// Voice SDK dependencies

implementation 'com.morfeus.android.voice:MFSDKVoice:2.4.10'

implementation 'io.grpc:grpc-okhttp:1.18.0'

implementation 'io.grpc:grpc-protobuf-lite:1.18.0'

implementation 'io.grpc:grpc-stub:1.18.0'

implementation 'io.grpc:grpc-android:1.18.0'

implementation 'javax.annotation:javax.annotation-api:1.2'

implementation('com.google.auth:google-auth-library-oauth2-http:0.7.0') {

exclude module: 'httpclient'

}

...

}

To use google cloud speech feature we need to set google speech API key through setSpeechAPIKey(String apiKey) method ofMFSDKProperties builder.

try {

// Set speech API key

MFSDKProperties properties = new MFSDKProperties

.Builder(END_POINT_URL)

...

.setSpeechAPIKey("YourSpeechAPIKey")

...

.build();

} catch (MFSDKInitializationException e) {

Log.e("MFSDK", e.getMessage());

}

// Set speech provider type

MFSDKSessionProperties properties = new MFSDKSessionProperties

.Builder()

...

.setSpeechProviderForVoice(

MFSDKSessionProperties.SpeechProviderForVoice.GOOGLE_SPEECH_PROVIDER)

.setSpeechToTextLanguage("en-IN")

...

.build();

b. Android Speech Recognition

Update following configuration to your module(app) level build.gradle file.

dependencies {

...

// Voice SDK

implementation 'com.morfeus.android.voice:MFSDKVoice:2.4.10'

// OAuth2 for Google API

implementation('com.google.auth:google-auth-library-oauth2-http:0.7.0') {

exclude module: 'httpclient'

}

// Morfeus SDK

implementation 'com.morfeus.android:MFSDKHybridKit:2.4.10'

implementation 'com.morfeus.android:MFOkHttp:3.12.0'

implementation 'com.android.support:appcompat-v7:28.0.0'

implementation 'com.android.support:design:28.0.0'

implementation 'com.google.code.gson:gson:2.8.6'

implementation 'io.grpc:grpc-okhttp:1.18.0'

implementation 'com.google.guava:guava:22.0-android'

...

}

To use native android speech recognition feature we need to set ANDROID_SPEECH_PROVIDER as a property in setSpeechProviderForVoice method of MFSDKSessionProperties.

// Set speech provider type

MFSDKSessionProperties properties = new MFSDKSessionProperties

.Builder()

...

.setSpeechProviderForVoice(

MFSDKSessionProperties.SpeechProviderForVoice.ANDROID_SPEECH_PROVIDER)

.setSpeechToTextLanguage("en-IN")

...

.build();

Using Offline Mode (Optional)

Android provides feature to do speech recognition offline i.e. when device is not having internet connection.

To use above feature please set enableNativeVoiceOffline as true. If we set enableNativeVoiceOffline as true, framework will use default voice recognition package of device and hence will reduce voice recognition accuracy. So we recommend not to use this becasue user experience will not be good.By default offline mode is false.

// Set speech provider type

MFSDKSessionProperties properties = new MFSDKSessionProperties

.Builder()

...

.setSpeechProviderForVoice(

MFSDKSessionProperties.SpeechProviderForVoice.ANDROID_SPEECH_PROVIDER)

.enableNativeVoiceOffline(false)

.setSpeechToTextLanguage("en-IN")

...

.build();

c. Set Speech-To-Text language

In MFSDKHybridKit, English(India) is the default language set for Speech-To-Text. You can change STT language by passing valid language code using setSpeechToTextLanguage("lang-Country")method ofMFSDKSessionProperties.Builder. You can find a list of supported language code here.

// Set speech to text language

MFSDKSessionProperties properties = new MFSDKSessionProperties

.Builder()

...

.setSpeechToTextLanguage("en-IN")

...

.build();

d. Set Text-To-Speech language

English(India) is the default language set for Text-To-Speech. You can change TTS language by passing valid language code using setTextToSpeechLanguage("lang-Country") method of MFSDKSessionProperties.Builder.You can find a list of supported language code here.

// Set text to speech language

MFSDKSessionProperties properties = new MFSDKSessionProperties

.Builder()

...

.setTextToSpeechLanguage("en_IN")

...

.build();

Enable Analytics

By default, analytics is disabled in SDK. To enable analytics set enableAnalytics(true) and pass analytics provider and id detail with MFSDKProperpties. Please check the following code snippet to enable analytics.

try {

// Pass analytics properties

MFSDKProperties properties = new MFSDKProperties

.Builder(END_POINT_URL)

...

.enableAnalytics(true)

.setAnalyticsProvider("Your Analytics provider code")

.setAnalyticsId("Your Analytics ID")

...

.build();

} catch (MFSDKInitializationException e) {

Log.e("MFSDK", e.getMessage());

}

Close Chatbot

Call newcloseScreen(String botId, boolean withAnimation)method of MFSDKMessagingManager to close chat screen with/without exit animation. Set second parameter to false to close screen without animation.

// Close chat screen without exit animation

sMFSdk.closeScreen(BOT_ID, false);

Customising WebSDK

Please refer this link in WebSDK section ~Coming Soon~

Customizing WebViews

Please refer this link in WebSDK section ~Coming Soon~

Deployments & Updates

Please refer this link in WebSDK section ~Coming Soon~

Security

SSL Pinning

MFSDK has support for SSL (Secure Sockets Layer), to enable encrypted communication between client and server. MFSDK doesn't trust SSL certificates stored in the device's trust store. Instead, it only trusts the certificates which are pass to the MFSDKProperties

Please check the following code snippet to enable SSL pinning.

MFSDKProperties sdkProperties = new MFSDKProperties

.Builder(BOT_END_POINT_URL)

.enableSSL(true, new String[] {PUBKEY_CA}) // Enable SSL check

...

.build();

When SSL is enabled, MFSDK verify the certificates which are passed to the MFSDKProperties

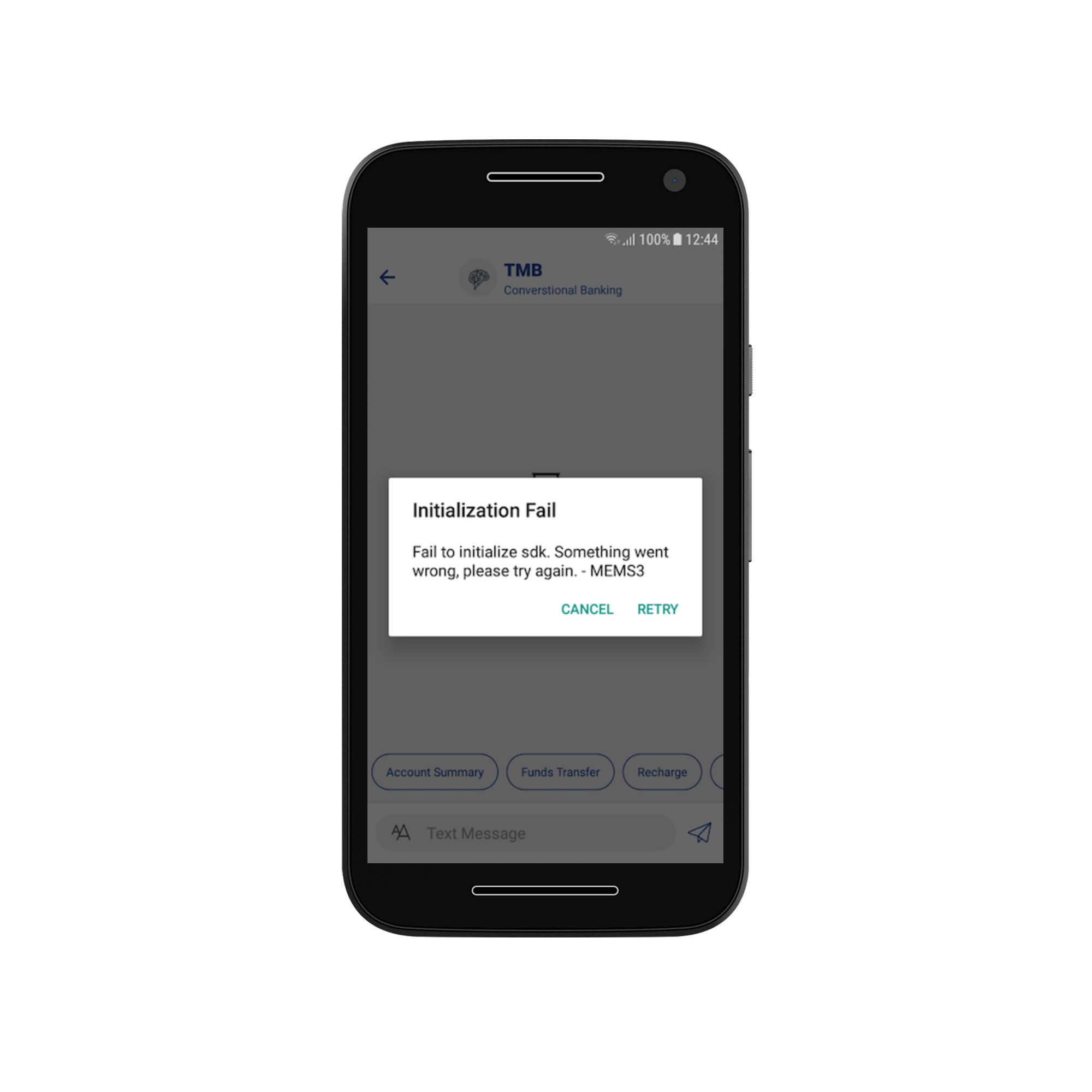

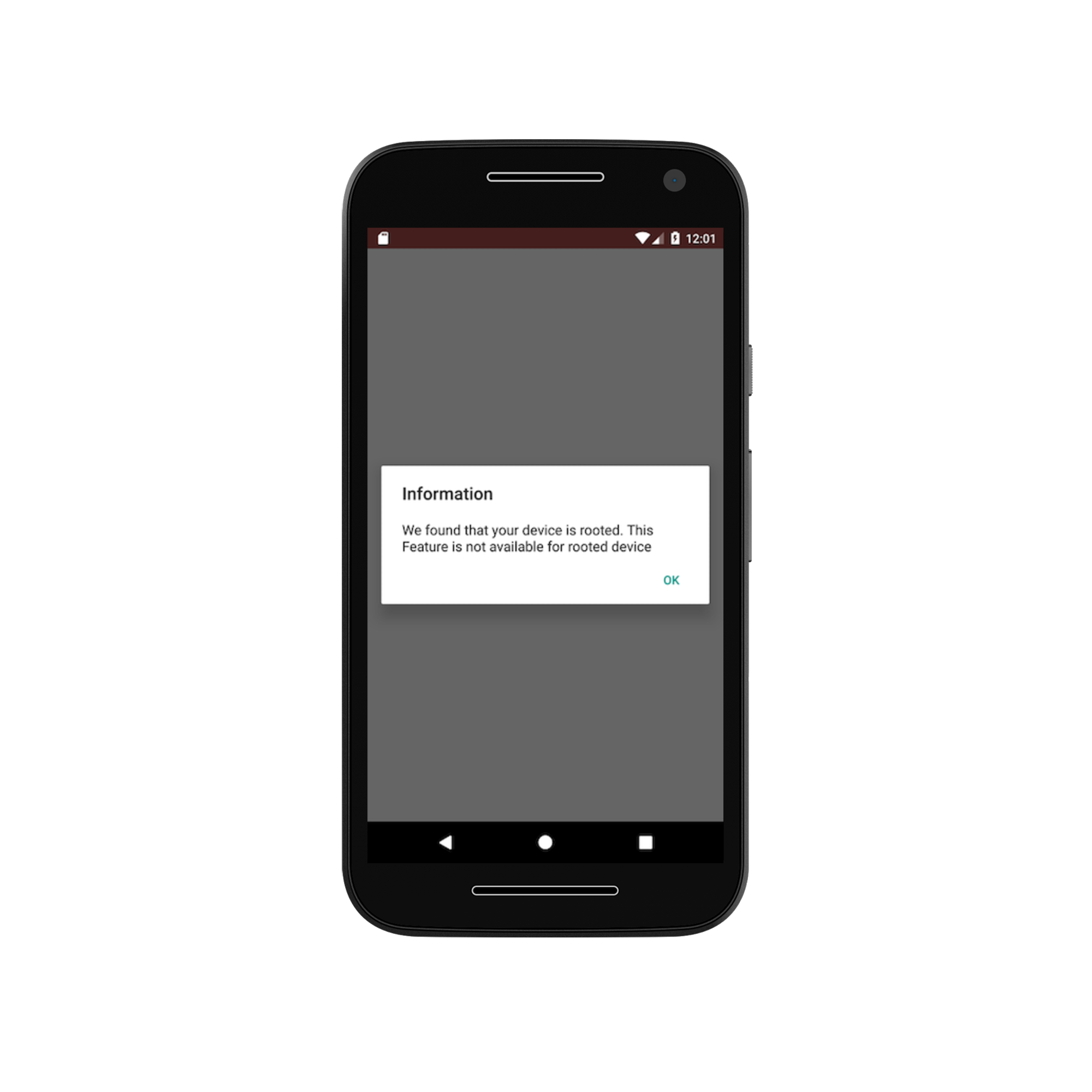

Rooted Device Check

You can prevent MFSDK from running on a rooted device. If enabled, MFSDK will not work on a rooted device.

You can use "enableRootedDeviceCheck" method of MFSDKProperties.Builder to enable this feature.

Please check the following code snippet to enable the rooted device check.

MFSDKProperties sdkProperties = new MFSDKProperties

.Builder(BOT_END_POINT_URL)

.enableRootedDeviceCheck(true) // Enable rooted device check

...

.build();

Content Security(Screen capture)

You can prevent user/application from taking the screenshot of the chat. You can use "disableScreenShot" method of

MFSDKProperties.Builder to enable this feature. By default, MFSDK doesn't prevent the user from taking the screenshot.

Please check following code snippet.

MFSDKProperties sdkProperties = new MFSDKProperties

.Builder(BOT_END_POINT_URL)

.disableScreenShot(true) // Prevent screen capture

...

.build();

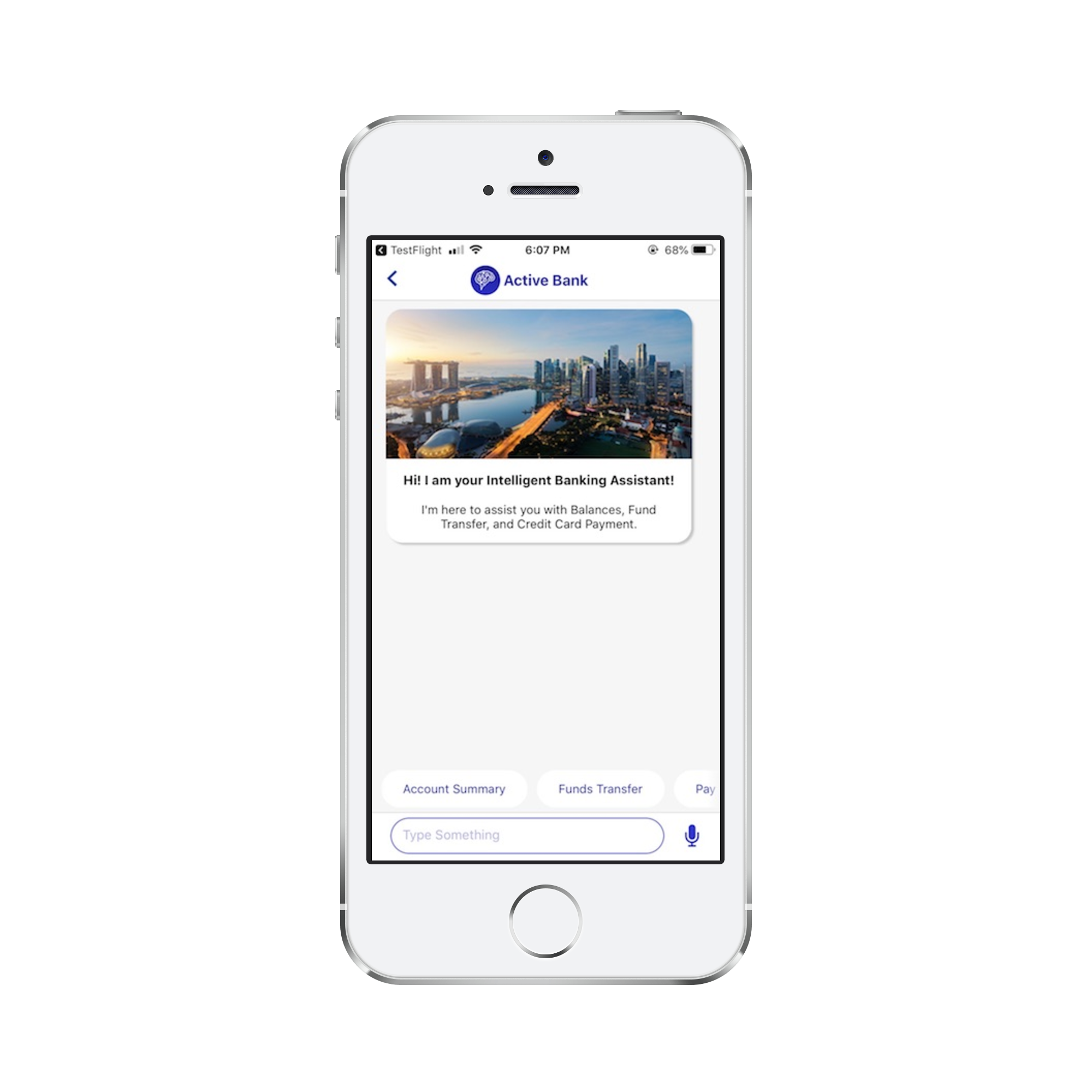

Morfeus iOS Hybrid SDK

Morfeus Hybrid SDK is a light weight messaging sdk which can be embedded easily in web sites and hybrid mobile apps with minimal integration effort. Once integrated it allows end users to converse with the Conversational AI /bot on the Active AI platform on both text and voice. WebSDK has out the box support for multiple UX templates such as List and Carousel and supports extensive customization for both UX and functionality.

Supported Components

Please refer this link in WebSDK section

Device Support

All iOS phones with OS support 8.0 and above.

Setup and how to build

Please refer above WebSDK section of Setup and how to build before starting below steps.

1. Prerequisites

- OS X (10.11.x)

- Xcode 8.3 or higher

- Deployment target - iOS 8.0 or higher

2. Install and configure dependencies

a.Install Cocoapods

CocoaPods is a dependency manager for Objective-C, which automates and simplifies the process of using 3rd-party libraries in your projects. CocoaPods is distributed as a ruby gem, and is installed by running the following commands in Terminal App:

$ sudo gem install cocoapods

$ pod setup

b. Update .netrc file

The Morfeus iOS SDK are stored in a secured artifactory. Cocoapods handles the process of linking these frameworks with the target application. When artifactory requests for authentication information when installing MFSDKHybridKit, cocoapods reads credential information from the file.netrc, located in ~/ directory.

The .netrc file format is as explained: we specify machine(artifactory) name, followed by login, followed by password, each in separate lines. There is exactly one space after the keywords machine, login, password.

machine <NETRC_MACHINE_NAME>

login <NETRC_LOGIN>

password <NETRC_PASSWORD>

One example of .netrc file structure with sample credentials is as below. Please check with the development team for the actual credentials to use.

Steps to create or update .netrc file

1. Start up Terminal in mac

2. Type "cd ~/" to go to your home folder

3. Type "touch .netrc", this creates a new file, If a file with name .netrc not found.

4. Type "open -a TextEdit .netrc", this opens .netrc file in TextEdit

5. Append the machine name and credentials shared by development team in above format, if it does not exist already.

6. Save and exit TextEdit

c. Install the pod

To integrate 'MFSDKHybridKit' into your Xcode project, specify the below code in your Podfile

source 'https://github.com/CocoaPods/Specs.git'

#Voice support is available from iOS 8.0 and above

platform :ios, '7.1'

target 'TargetAppName' do

pod '<COCOAPOD_NAME>'

end

Once added above code, run install command in your project directory, where your "podfile" is located.

$ pod install

If you get an error like "Unable to find a specification for

$ pod repo update

When you want to update your pods to latest version then run below command.

$ pod update

Note: If we get "401 Unauthorized" error, then please verify your .netrc file and the associated credentials.

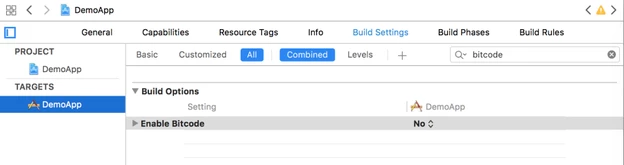

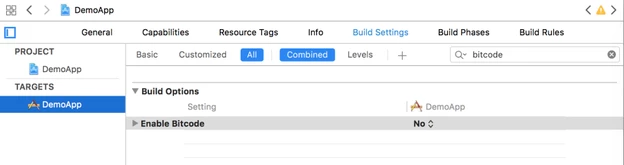

d. Disable bitcode

Select target open "Build Settings" tab and set "Enable Bitcode" to "No".

e. Give permission

Search for “.plist” file in the supporting files folder in your Xcode project. Update NSAppTransportSecurity to describe your app’s intended HTTP connection behavior. Please refer apple documentation and choose the best configuration for your app. Below is one sample configuration.

<key>NSAppTransportSecurity</key>

<dict>

<key>NSAllowsArbitraryLoads</key>

<true/>

</dict>

Integration to Application

User can integrate chatbot at on any screen in application.Broadly it is divided into 2 sections * Public * Post Login

1. Public

Invoke the SDK

To invoke chat screen, create MFSDKProperties, MFSDKSessionProperties and then call the method showScreenWithWorkSpaceId:fromViewController:withSessionProperties to present the chat screen. Please find below code sample.

// Add this to the .h of your file

#import "ViewController.h"

#import <MFSDKMessagingKit/MFSDKMessagingKit.h>

@interface ViewController ()<MFSDKMessagingDelegate>

@end

// Add this to the .m of your file

@implementation ViewController

// Once the button is clicked, show the message screen -(IBAction)startChat:(id)sender

{

MFSDKProperties *params = [[MFSDKProperties alloc] initWithDomain:@"<END_POINT_URL>"];

params.workSpaceId = <WORK_SPACE_ID>;

params.messagingDelegate = self;

params.enableScheduledBackgroundRefresh = YES;

//optional for ios13 Support

params.sdkStatusBarColor = <UIColor>;//color to be set for status-bar

params.botModalPresentationStyle = PresentationFullScreen;

[[MFSDKMessagingManager sharedInstance] initWithProperties:params];

MFSDKSessionProperties *sessionProperties = [[MFSDKSessionProperties alloc]init];

sessionProperties.userInfo = [[NSDictionary alloc] initWithObjectsAndKeys:@"KEY",@"VALUE", nil];

[[MFSDKMessagingManager sharedInstance] showScreenWithWorkSpaceId:@"<WORK_SPACE_ID>" fromViewController:self withSessionProperties:sessionProperties];

}

@end

Properties:

| Property | Description |

|---|---|

| BOT_ID | The unique ID for the bot. |

| BOT_NAME | The bot name to display on top of chat screen. |

| END_POINT_URL | The bot API URL. |

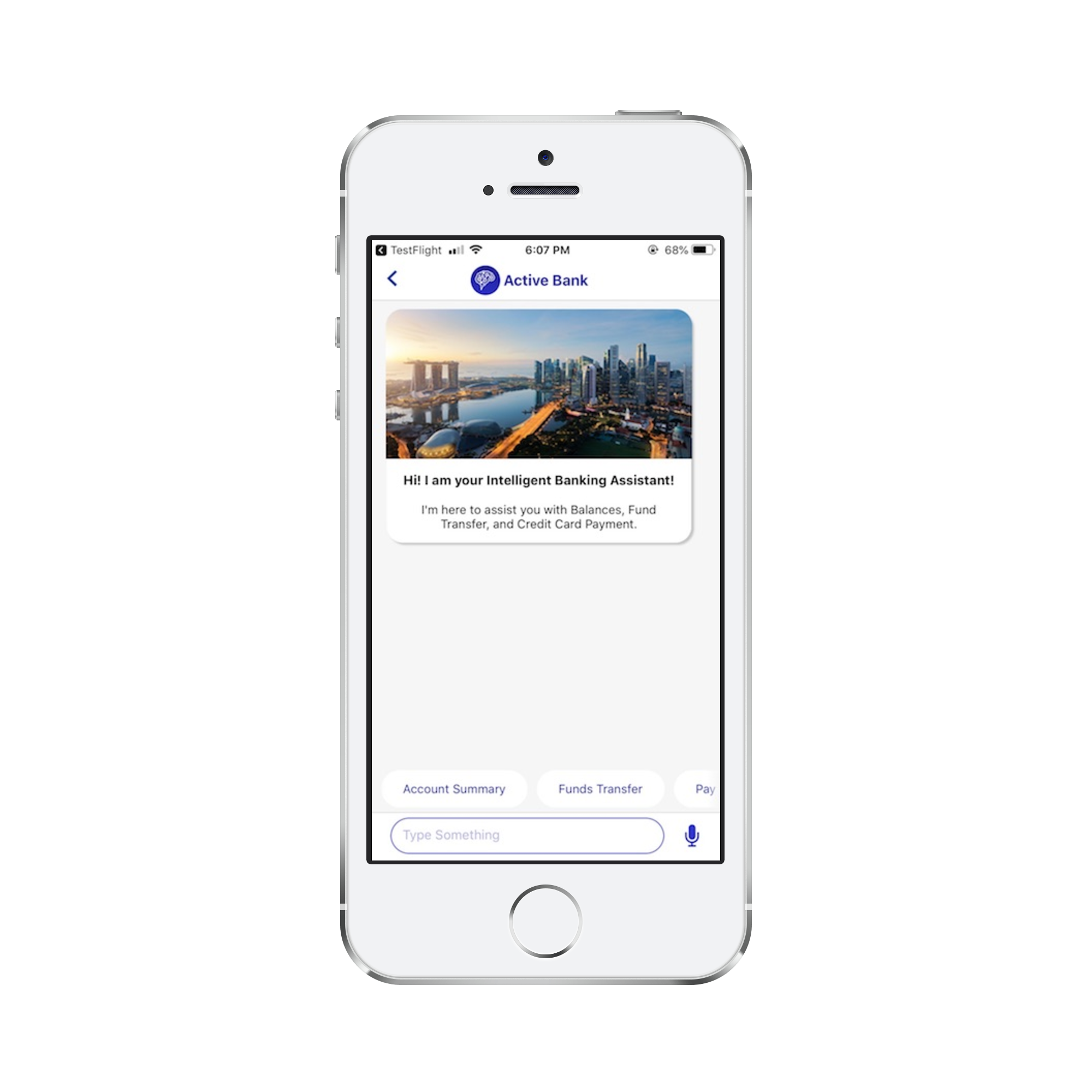

Compile and Run

Once above code is added we can build and run. On launch of chat screen, welcome message will be displayed.

2. Post Login

You can pass set of key value pairs to the MFSDK using userInfo(NSDictionary) in MFSDKSessionProperties. In following example we are passing Customer ID, Session ID to MFSDK.

MFSDKSessionProperties *sessionProperties = [[MFSDKSessionProperties alloc]init];

sessionProperties.userInfo = @{@"CUSTOMER_ID": @"<CUSTOMER_ID_VALUE>", @"SESSION_ID": @"<SESSION_ID_VALUE>", nil];

[[MFSDKMessagingManager sharedInstance] showScreenWithWorkSpaceId:@"<WORK_SPACE_ID>" fromViewController:self withSessionProperties:sessionProperties];

Note: Please make sure length of customerID and sessionID should not be greater than 256 bytes if encyption of keys are required

Properties

Setting Chat Screen Header

Morfeus iOS SDK provides feature to set header as native header.You can set native header by using “setHeader” method of MFSDKSessionProperties.You need to create MFSDKHeader object and set below properties according to requirement. In following example we are setting header background as color with left and right button.

MFSDKProperties *params = [[MFSDKProperties alloc] initWithDomain:@"<END_POINT_URL>"];

[params addBot:@"<BOT_ID>" botName:@"<BOT_NAME>"];

params.messagingDelegate = self;

params.showNativeNavigationBar = YES;

[[MFSDKMessagingManager sharedInstance] initWithProperties:params];

MFSDKSessionProperties *sessionProperties = [[MFSDKSessionProperties alloc]init];

MFSDKHeader *headerObject = [[MFSDKHeader alloc]init];

headerObject.isCustomNavigationBar = YES;

headerObject.titleText = @"ActiveAI Bot !";

headerObject.titleColor = @"#FFFFFF";

headerObject.titleFontName = @"Lato_Medium";

headerObject.titleFontSize = 16.00f;

headerObject.rightButtonAction = HOME_BUTTON;

headerObject.rightButtonImage = @"hdr_home";

headerObject.leftButtonAction = BACK_BUTTON;

headerObject.leftButtonImage = @"hdr_menu_icon";

headerObject.backgroundColor = @"#CD1C5F";

[sessionProperties setHeader:headerObject];

Handling Logout Event

To retrieve logout event, implement the MFSDKMessagingDelegate. MFSDK will call onLogout: method when user logout from the current chat session. There are three type of logout methods supported by MFSDK as listed below.

| Logout Type | Logout Code |

|---|---|

| Auto logout / Inactivity Timeout | 1001 |

| Forced logout | 1002 |

Please check following code sample.

// Add this to the header of your file

#import "ViewController.h"

#import <MFSDKMessagingKit/MFSDKMessagingKit.h>

@interface ViewController ()<MFSDKMessagingDelegate>

@end

@implementation ViewController

-(IBAction)startChat:(id)sender

{

...

//Show chat screen

}

-(void)onLogout:(NSInteger)logoutType

{

NSLog(@"logoutType: %@",logoutType);

}

@end

Handling Close Event

To retrieve close event, implement the MFSDKMessagingDelegate. MFSDK will call onChatClose method when user touches back button, which results in closure of chat screen.

Please check following code sample.

// Add this to the header of your file

#import "ViewController.h"

#import <MFSDKMessagingKit/MFSDKMessagingKit.h>

@interface ViewController ()<MFSDKMessagingDelegate>

@end

@implementation ViewController

-(IBAction)startChat:(id)sender

{

...

//Show chat screen

}

-(void)onChatClose

{

NSLog(@"Chat screen closed, perform necessary action");

}

@end

Deeplink Callback

You can deep link your application with MFSDK by implementing the MFSDKMessagingDelegate. If Active AI chat bot is not able to answer, framework will call onSearchResponse method of MFSDKMessagingDelegate and “MFSearchResponseModel” model will be passed. User can invoke respective screen from this method depending on properties set in the model.

| Property | Description |

|---|---|

| MFSearchResponseModel | This model contains 3 properties keyValue, menuCode & payload which will be used to navigate to respective screen in application. |

// Add this to the header of your file

#import "ViewController.h"

#import <MFSDKMessagingKit/MFSDKMessagingKit.h>

@interface ViewController ()<MFSDKMessagingDelegate>

@end

@implementation ViewController

-(IBAction)startChat:(id)sender

{

MFSDKProperties *params = [[MFSDKProperties alloc] initWithDomain:@"<END_POINT_URL>"];

params.messagingDelegate = self;

[[MFSDKMessagingManager sharedInstance] initWithProperties:params];

...

//Show chat screen

}

-(void)onSearchResponse:(MFSearchResponseModel *)model

{

//handle code to display native screens

NSLog(@"onSearchResponse: %@",model.menuCode);

}

@end

Adding Voice Feature

MFSDKHybridKit supports text to speech and speech to text feature. It has two medium of speech recognition and either of them can be used :

Google Speech recognition

iOS Native Speech Recognition

a. Google Speech recognition

The minimum iOS deployment target for voice feature is iOS 8.0. The pod file also needs to be updated with the minimum deployment target for voice feature. Speech API key can be passed using speechAPIKey in MFSDKSessionProperties as below.

MFSDKHybridKit supports text to speech and speech to text feature. The minimum iOS deployment target for voice feature is iOS 8.0. The pod file also needs to be updated with the minimum deployment target for voice feature. Speech API key can be passed using speechAPIKey in MFSDKSessionProperties as below.

MFSDKSessionProperties *sessionProperties = [[MFSDKSessionProperties alloc]init];

sessionProperties.speechAPIKey = @"<YOUR_SPEECH_API_KEY>";

[[MFSDKMessagingManager sharedInstance] showScreenWithBotID:@"<BOT_ID>" fromViewController:self withSessionProperties:sessionProperties];

Search for “.plist” file in the supporting files folder in your Xcode project. Add needed capabilities like below and appropriate description.

<key>NSSpeechRecognitionUsageDescription</key>

<string>SPECIFY_REASON_FOR_USING_SPEECH_RECOGNITION</string>

<key>NSMicrophoneUsageDescription</key>

<string>SPECIFY_REASON_FOR_USING_MICROPHONE</string>

b. Native Speech recognition

MFSDKHybridKit supports text to speech and speech to text feature. The minimum iOS required to target native speech recognition feature is iOS 10.0. Speech provider needs to be set as SpeechProviderNative for the property called speechProviderForVoice in MFSDKSessionProperties.

MFSDKSessionProperties *sessionProperties = [[MFSDKSessionProperties alloc]init];

sessionProperties.speechProviderForVoice = SpeechProviderNative;

[[MFSDKMessagingManager sharedInstance] showScreenWithWorkSpaceId:@"<WORK_SPACE_ID>" fromViewController:self withSessionProperties:sessionProperties];

Search for “.plist” file in the supporting files folder in your Xcode project. Add needed capabilities like below and appropriate description.

<key>NSSpeechRecognitionUsageDescription</key>

<string>SPECIFY_REASON_FOR_USING_SPEECH_RECOGNITION</string>

<key>NSMicrophoneUsageDescription</key>

<string>SPECIFY_REASON_FOR_USING_MICROPHONE</string>

Both the permission should be provided by the user in order to make apple’s native speech recognition work.

c. Set Speech-To-Text language

English(India) is the default language set for Speech-To-Text. You can change STT language by passing valid language code using speechToTextLanguage property of MFSDKSessionProperties. You can find list of supported language code here.

MFSDKSessionProperties *sessionProperties = [[MFSDKSessionProperties alloc]init];

sessionProperties.shouldSupportMultiLanguage = YES;

sessionProperties.speechToTextLanguage = @"en-IN";

d. Set Text-To-Speech language

English(India) is the default language set for Text-To-Speech. You can change STT language by passing valid language code using textToSpeechLanguage property of MFSDKSessionProperties.Please set language code as per apple guidelines.

MFSDKSessionProperties *sessionProperties = [[MFSDKSessionProperties alloc]init];

sessionProperties.shouldSupportMultiLanguage = YES;

sessionProperties.textToSpeechLanguage = @"en-IN";

Enable Analytics

By default, analytics is disabled in SDK. To enable analytics set enableAnalytics to YESand pass analytics provider and id detail with MFSDKProperpties. Please check the following code snippet to enable analytics

// Add this to the header of your file

#import "ViewController.h"

#import <MFSDKMessagingKit/MFSDKMessagingKit.h>

@interface ViewController ()<MFSDKMessagingDelegate>

@end

@implementation ViewController

-(IBAction)startChat:(id)sender

{

MFSDKProperties *params = [[MFSDKProperties alloc] initWithDomain:@"<END_POINT_URL>"];

params.enableAnalytics = YES;

params.analyticsProvider = @"YOUR_ANALYTICS_PROVIDER_CODE";

params.analyticsId = @"YOUR_ANALYTICS_ID";

...

[[MFSDKMessagingManager sharedInstance] initWithProperties:params];

...

//Show chat screen

}

@end

Close Chatbot

To close the chat screen with smooth animation call the closeScreenWithBotID: with the

[[MFSDKMessagingManager sharedInstance] closeScreenWithBotID:@"<WORK_SPACE_ID>"];

To close the chat screen without animation call closeScreenWithBotID:withAnimation: with the

[[MFSDKMessagingManager sharedInstance] closeScreenWithBotID:@"<WORK_SPACE_ID>" withAnimation:NO];

Customising WebSDK

Please refer this link in WebSDK section

Customizing WebViews

Please refer this link in WebSDK section

Deployments & Updates

Please refer this link in WebSDK section

Security

SSL Pinning

MFSDK has a built-in support for SSL (Secure Sockets Layer), to enable encrypted communication between client and server. MFSDK doesn't trust SSL certificates stored in device's trust store. Instead, it only trusts the certificates by checking its SSL Hash keys using TrustKit SSL pinning validation technique.

Please check following code snippet to enable SSL pinning.

MFSDKProperties *params = [[MFSDKProperties alloc]initWithDomain:self.defaultBaseURL];

[params enableSSL:YES sslPins:@[SSL_HASH_KEY_1,SSL_HASH_KEY_2]];

Here we can pass SSL Hash key of certificate for ssl pinning validation

Note: Please make sure SSL Hash key which you are providing is associated with Bot end-point URL.

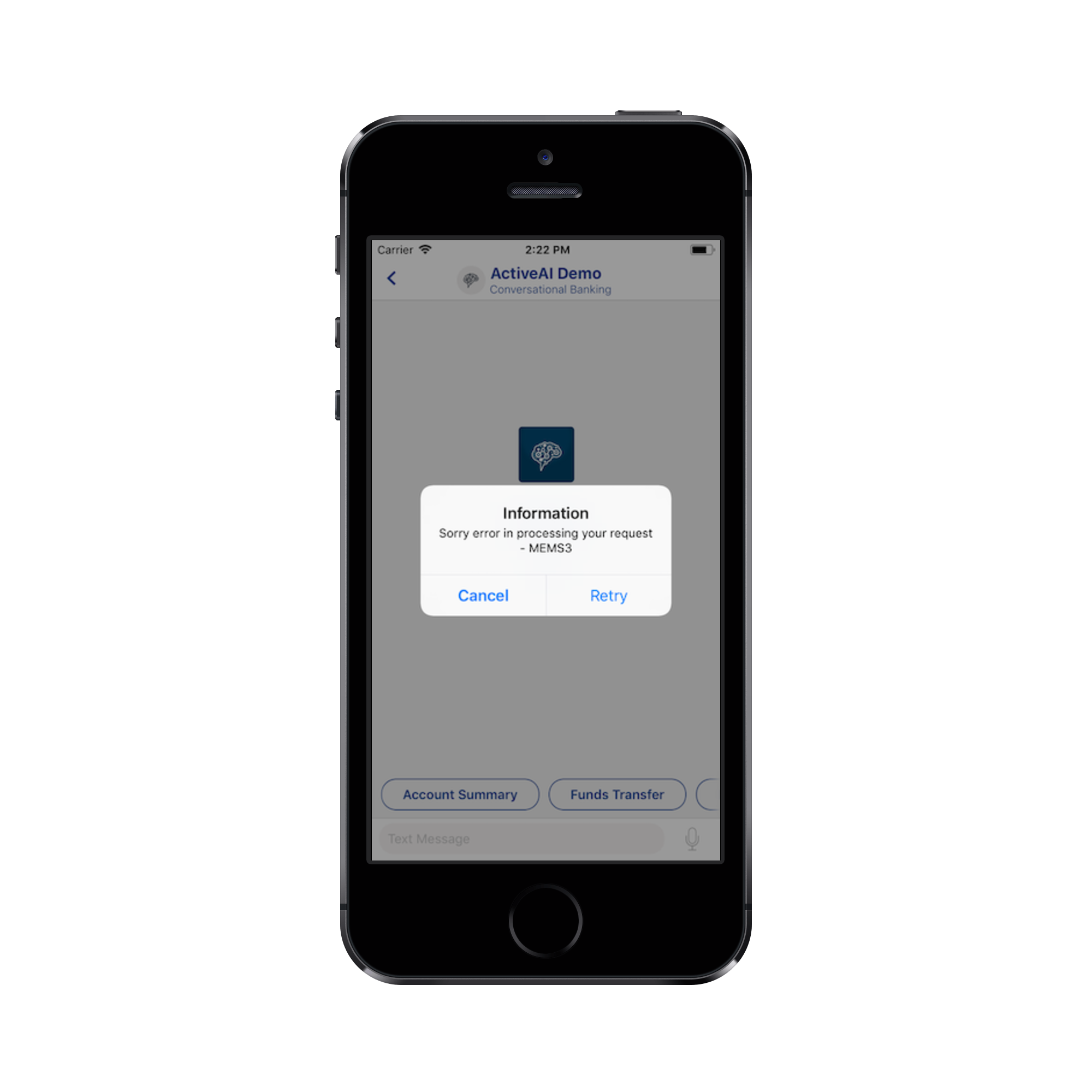

Below is the snapshot when SSL is enabled, but there is a failure in validating secure connection.

Rooted Device Check

MFSDK has a built-in support for rooted device check.If enabled,application will not work on rooted device.

Please check following code snippet to enable rooted device check.

MFSDKProperties *params = [[MFSDKProperties alloc]initWithDomain:self.defaultBaseURL];

[params enableCheck:YES/NO];

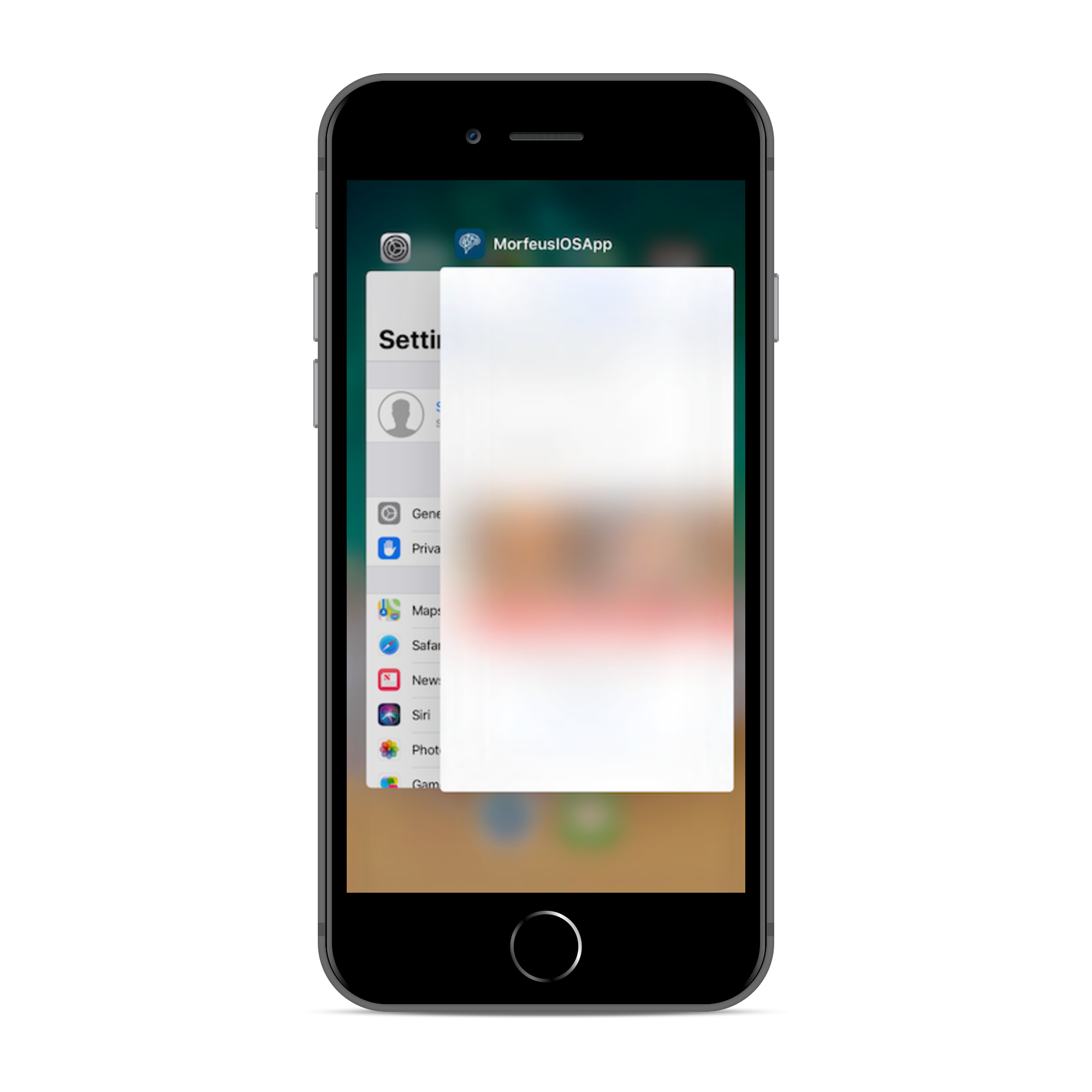

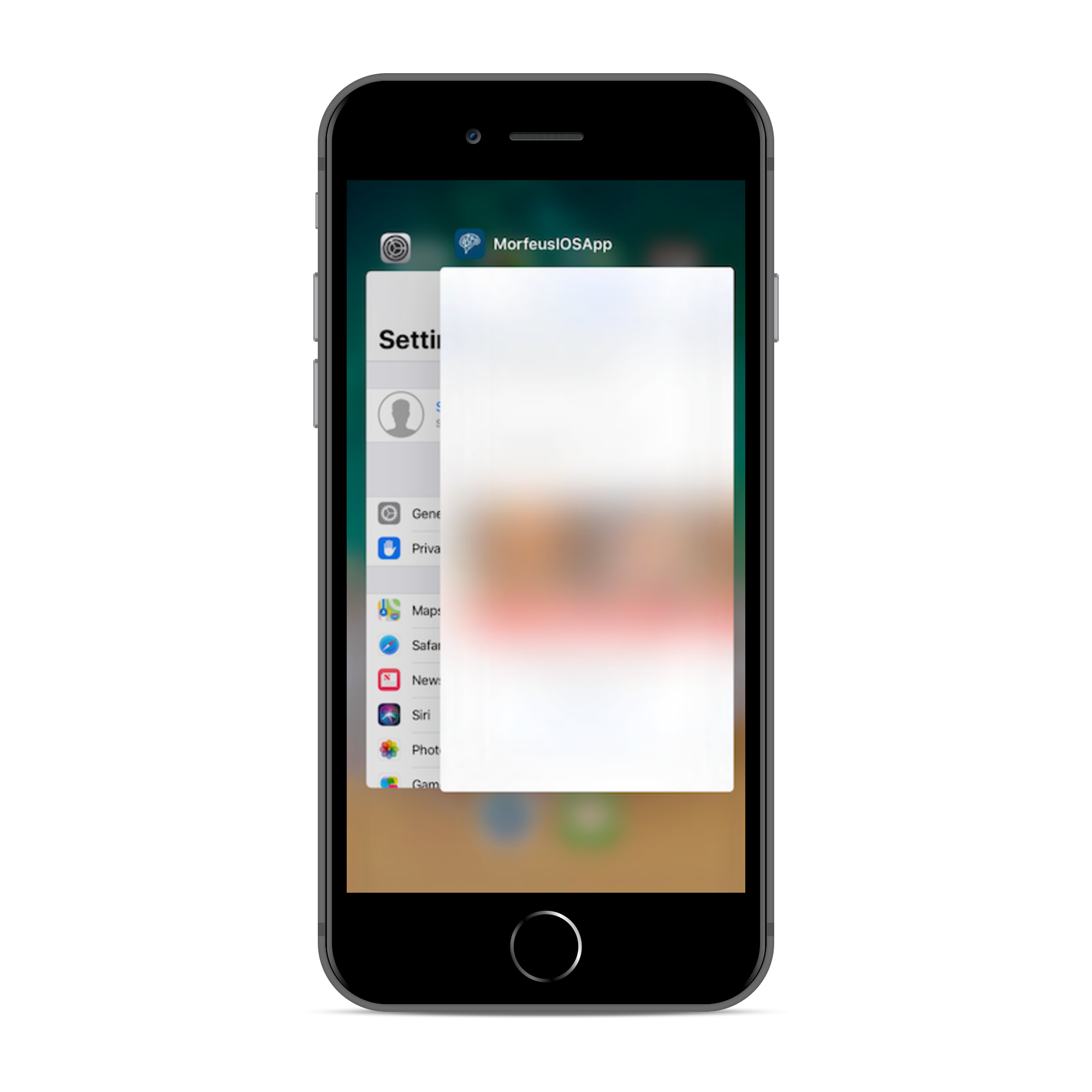

Content Security in Minimise State

MFSDK has a built-in support for hiding content in minimise state. If enabled, user will not able to see content in minimise case. The contents will be blurred as shown in snapshot below.

Please check following code snippet to enable content security in minimise state.

MFSDKProperties *params = [[MFSDKProperties alloc]initWithDomain:self.defaultBaseURL];

..

params.hideContentInBackground = YES;

..

[[MFSDKMessagingManager sharedInstance] initWithProperties:sdkProperties];

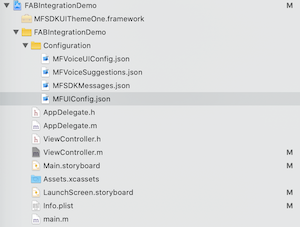

Morfeus iOS Native SDK

Key features

Morfeus Native SDK is a light weight messaging sdk which can be embedded easily in native mobile apps with minimal integration effort. Once integrated it allows end users to converse with the Conversational AI /bot on the Active AI platform on both text and voice. The SDK has out the box support for multiple UX templates such as List and Carousel and supports extensive customization for both UX and functionality. The SDK has inbuilt support for banking grade security features.

Supported Components

Current SDK support below inbuilt message templates

| Splash | Welcome | Cards | Carousal |

|---|---|---|---|

|

|

|

|

| Suggestion | Quick Replies | List | Webview |

|---|---|---|---|

|

|

|

|

Device Support

All iOS phones with OS support 8.0 and above.

Setup and how to build

1. Prerequisites

- OS X (10.11.x)

- Xcode 8.3 or higher

- Deployment target - iOS 8.0 or higher

2. Install and configure dependencies

a. Install Cocoapods

CocoaPods is a dependency manager for Objective-C, which automates and simplifies the process of using 3rd-party libraries in your projects.CocoaPods is distributed as a ruby gem, and is installed by running the following commands in Terminal App:

$ sudo gem install cocoapods

$ pod setup

b. Update .netrc file

The Morfeus iOS SDK are stored in a secured artifactory. Cocoapods handles the process of linking these frameworks with the target application. When artifactory requests for authentication information when installing MFSDKWebKit, cocoapods reads credential information from the file .netrc, located in ~/ directory.

The .netrc file format is as explained: we specify machine(artifactory) name, followed by login, followed by password, each in separate lines. There is exactly one space after the keywords machine, login, password.

machine <NETRC_MACHINE_NAME>

login <NETRC_LOGIN>

password <NETRC_PASSWORD>

Steps to create or update .netrc file

1.Start up Terminal in mac 2.Type "cd ~/" to go to your home folder 3.Type "touch .netrc", this creates a new file, If a file with name .netrc not found. 4.Type "open -a TextEdit .netrc", this opens .netrc file in TextEdit 5.Append the machine name and credentials shared by development team in above format, if it does not exist already. 6.Save and exit TextEdit

c. Install the pod

To integrate 'MorfeusMessagingKit' into your Xcode project, specify the below code in your Podfile

source 'https://github.com/CocoaPods/Specs.git'

platform :ios, '8.0'

target 'TargetAppName' do

pod '<COCOAPOD_NAME>'

end

Once added above code, run install command in your project directory, where your "podfile" is located.

$ pod install

If you get an error like "Unable to find a specification for

$ pod repo update

When you want to update your pods to latest version then run below command.

$ pod update

Note: If we get "401 Unauthorized" error, then please verify your .netrc file and the associated credentials.

d. Disable bitcode

Select target open "Build Settings" tab and set "Enable Bitcode" to "No".

e. Give Permission:

Search for “.plist” file in the supporting files folder in your Xcode project. Add various privacy permissions as needed. Please refer apple documentation and choose the best configuration for your app. Below is one sample configuration.

<key>NSCameraUsageDescription</key>

<string>Specify the reason for your app to access the device’s camera</string>

<key>NSContactsUsageDescription</key>

<string>Specify the reason for your app to access the user’s contacts</string>

<key>NSLocationWhenInUseUsageDescription</key>

<string>Specify the reason for your app to access the user’s location information</string>

<key>NSFaceIDUsageDescription</key>

<string>Specify the reason for your app to use Face ID</string>

<key>NSMicrophoneUsageDescription</key>

<string>Specify the reason for your app to access any of the device’s microphones</string>

<key>NSSpeechRecognitionUsageDescription</key>

<string>Specify the reason for your app to send user data to Apple’s speech recognition servers</string>

<key>NSPhotoLibraryUsageDescription</key>

<string>Specify the reason for your app to access the user’s photo library</string>

Integration to Application

User can integrate chatbot at on any screen in application.Broadly it is divided into 2 sections * Public * Post Login

1. Public

Initialize the SDK

To invoke chat screen, create MFSDKProperties, MFSDKSessionProperties and then call the method showScreenWithBotID:fromViewController:withSessionProperties to present the chat screen. Please find below code sample.

AppDelegate.m

// Add this to the .h of your file

#import "ViewController.h"

#import <MFSDKMessagingKit/MFSDKMessagingKit.h>

@interface ViewController ()<MFSDKMessagingDelegate>

@end

// Add this to the .m of your file

@implementation ViewController

// Once the button is clicked, show the message screen -(IBAction)startChat:(id)sender

{

MFSDKProperties *params = [[MFSDKProperties alloc] initWithDomain:@"<END_POINT_URL>"];

[params addBot:@"<BOT_ID>" botName:@"<BOT_NAME>"];

params.messagingDelegate = self;

[[MFSDKMessagingManager sharedInstance] initWithProperties:params];

MFSDKSessionProperties *sessionProperties = [[MFSDKSessionProperties alloc]init];

sessionProperties.language = @"en";

[[MFSDKMessagingManager sharedInstance] showScreenWithBotID:@"<BOT_ID>" fromViewController:self withSessionProperties:sessionProperties];

}

@end

Properties:

| Property | Description |

|---|---|

| BOT_ID | The unique ID for the bot. |

| BOT_NAME | The bot name to display on top of chat screen. |

| END_POINT_URL | The bot API URL. |

3. Compile and Run

Once above code is added we can build and run. On launch of chat screen, welcome message will be displayed.

4. Debugging

While running application you can check logs in the Console with identifier<MFSDKLog>.

2. Post Login

To process any query specific to user account, authentication is required. If such authentication details already available, it can be passed to Morfeus SDK as key-value pairs. While making request with server, Morfeus SDK sends the key-value pair in JSON request. If server can validate the details, then authentication will be maintained in Morfeus SDK.

Providing User/Session Information

You can pass set of key value pairs to the MFSDK using userInfo(NSDictionary) in MFSDKSessionProperties. In following example we are passing Customer ID, Session ID to MFSDK.

// Add this to the header of your file

#import "ViewController.h"

#import <MFSDKMessagingKit/MFSDKMessagingKit.h>

// Add this to the body

@implementation ViewController

// Once the button is clicked, show the message screen

-(void)startChat

{

MFSDKSessionProperties *sessionProperties = [[MFSDKSessionProperties alloc]init];

sessionProperties.userInfo = @{@"CUSTOMER_ID": @"<CUSTOMER_ID_VALUE>", @"SESSION_ID": @"<SESSION_ID_VALUE>", nil];

[[MFSDKMessagingManager sharedInstance] showScreenWithBotID:@"<BOT_ID>" fromViewController:self withSessionProperties:sessionProperties];

}

@end

Properties:

| Property | Description |

|---|---|

| sessionProperties.userInfo | userInfo is a dictionary which will have user information for post login |

| SESSION_ID | session id to be passed by the project |

| CUSTOMER_ID | customer id to be passed by the project |

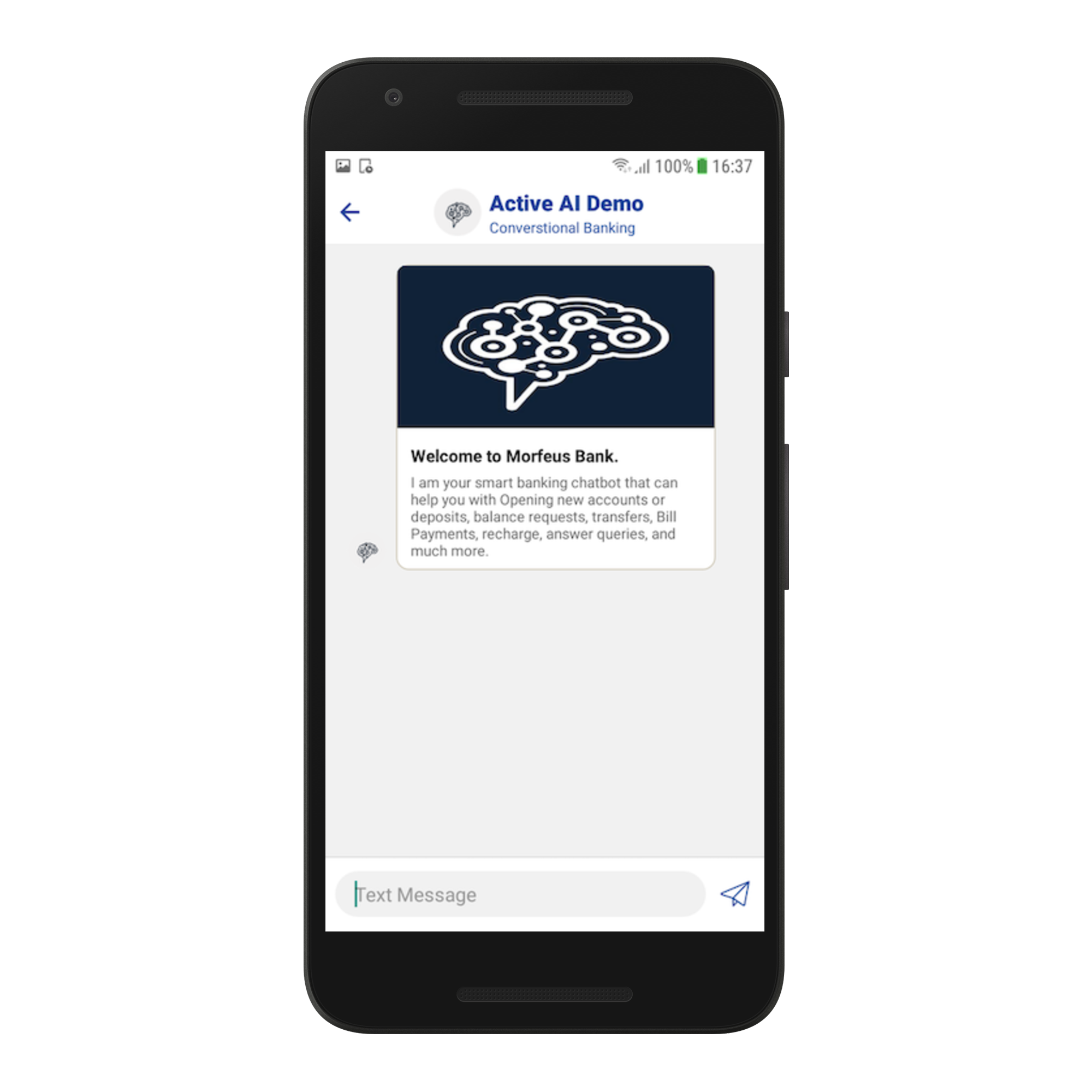

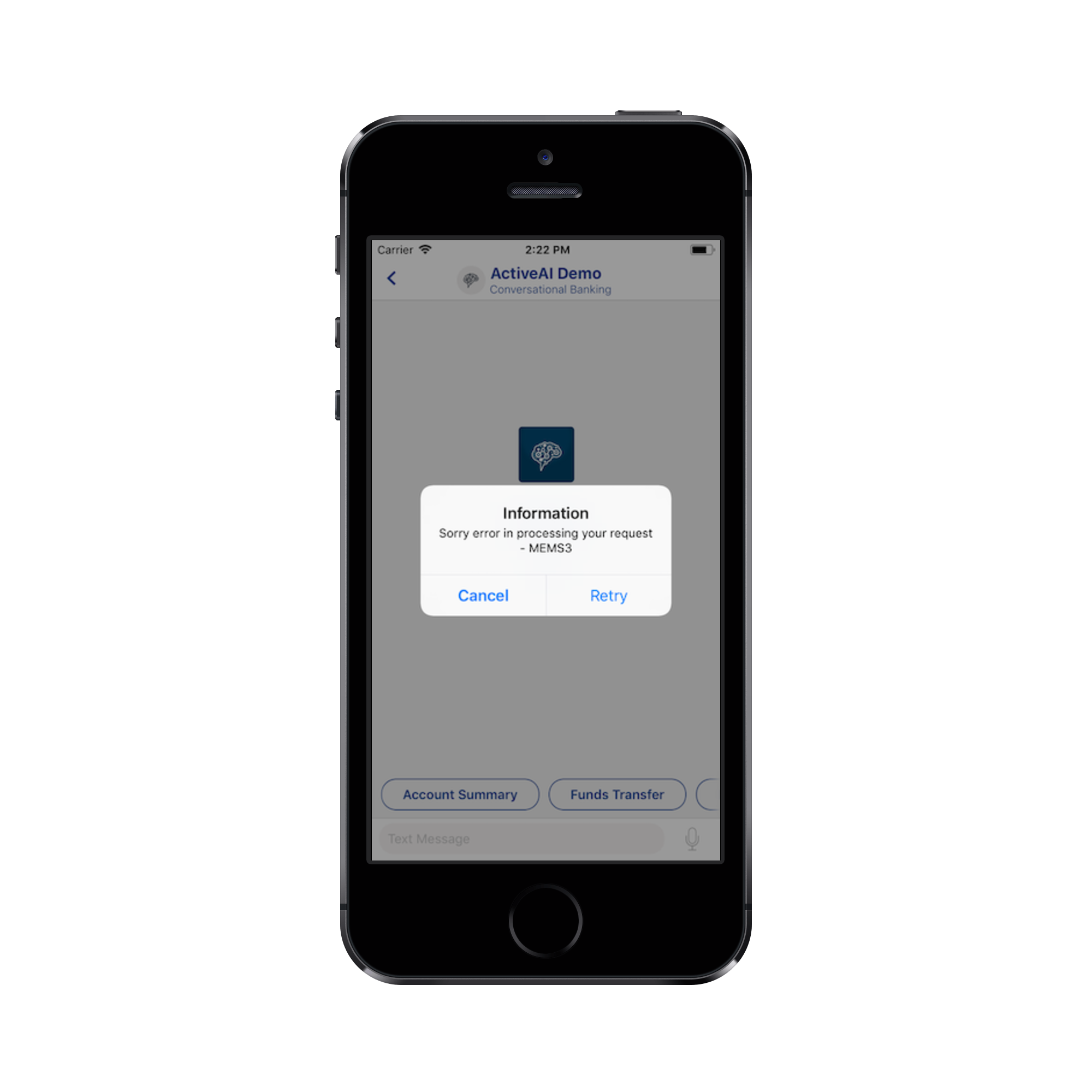

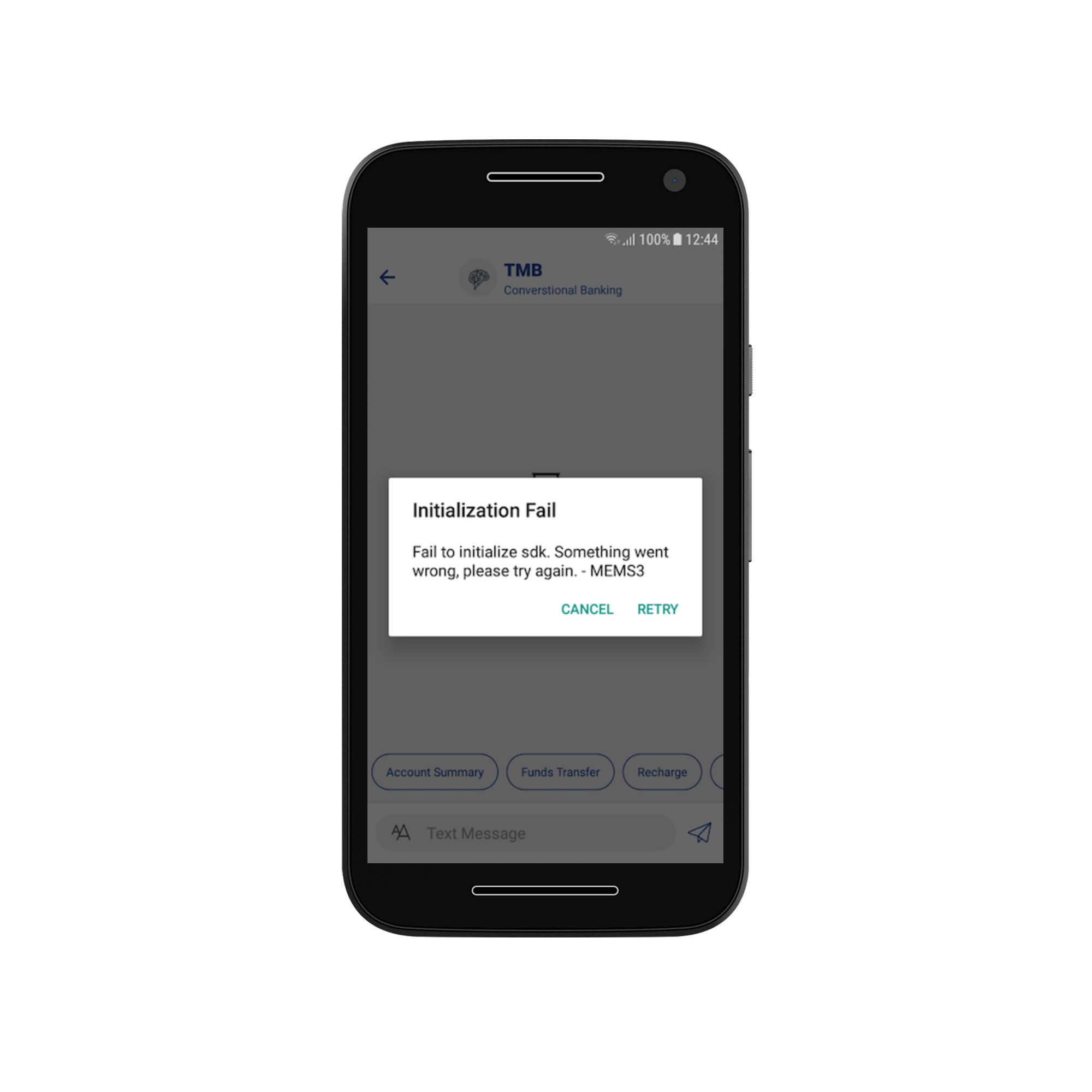

Error Codes

These are the basic error codes and their description. Error code message can be customised in MFLanguages.json file.

| Error Code | Description |

|---|---|

| MEMS1 | HTTP client error i.e. from 400 to 449 |

| MEMS2 | HTTP internal server error i.e. http 500 |

| MEMS3 | SSL handshake exception |

| MEMS4 | No internet connection |

| MEMS50 | Invalid server response, i.e. fail to parse response |

| MEMS51 | Invalid server response, i.e. empty message |

| MEMD1 | Fail to upload file |

| MEMD2 | Fail to download file |

| MEMV1 | Google speech API error |

Properties

Adding Voice Feature

MorfeusMessagingKit has an UI that accepts voice input and displays responses from server. This UI is designed to make the interaction voice friendly. To display the voice page set the screenToDisplay to display voice screen as shown below.

MFSDKSessionProperties *sessionProperties = [[MFSDKSessionProperties alloc]init];

sessionProperties.screenToDisplay = MFScreenVoice;//displays voice screen

params.googleVoiceKey = @"<SPEECH_API_KEY>";

[[MFSDKMessagingManager sharedInstance] showScreenWithBotID:@"<BOT_ID>" fromViewController:<VIEW_CONTROLLER> withSessionProperties:sessionProperties];

Below are the options available for screenToDisplay

| Property | Description |

|---|---|

| MFScreenMessageVoice | Displays both chat and voice screen, user can swipe left/right to navigate between them. |

| MFScreenVoice | Display only voice screen. |

| MFScreenMessage | Display only chat screen. |

Handling Push Notification

Morfeus SDK supports push notification and displays it to user.

- When Morfeus screen is on top, Morfeus SDK moves to appropriate morfeus screen. eg., if its a new message, it refreshes the chat screen

- When Morfeus screen is not on top, Morfeus SDK displays a tappable HUD in the top. If the HUD tapped, the appropriate morfeus screen is moved

To handle morfeus push notification, the app delegate should call the methods defined in MFSDKApplicationDelegate. These callbacks gives control to Morfeus SDK to handle the APNS when it is related to Morfeus and silently ignores when it’s not related to Morfeus.

A sample implementation for APNS is as described below.

1. Handling Apple Push Call Back

AppDelegate.m

- (void)application:(UIApplication *)app didRegisterForRemoteNotificationsWithDeviceToken:(NSData *)deviceToken

{

[[MFSDKApplicationDelegate sharedAppDelegate] application:app didRegisterForRemoteNotificationsWithDeviceToken:deviceToken];

}

-(void)application:(UIApplication *)application didFailToRegisterForRemoteNotificationsWithError:(NSError *)error

{

[[MFSDKApplicationDelegate sharedAppDelegate] application:application didFailToRegisterForRemoteNotificationsWithError:error];

}

- (void)userNotificationCenter:(UNUserNotificationCenter *)center willPresentNotification:(UNNotification *)notification withCompletionHandler:(void (^)(UNNotificationPresentationOptions options))completionHandler API_AVAILABLE(ios(10.0));

{

[[MFSDKApplicationDelegate sharedAppDelegate] userNotificationCenter:center willPresentNotification:notification withCompletionHandler:completionHandler];

}

-(void)userNotificationCenter:(UNUserNotificationCenter *)center didReceiveNotificationResponse:(UNNotificationResponse *)response withCompletionHandler:(void (^)(void))completionHandler

API_AVAILABLE(ios(10.0)){

[[MFSDKApplicationDelegate sharedAppDelegate] userNotificationCenter:center didReceiveNotificationResponse:response withCompletionHandler:completionHandler];

}

- (void)application:(UIApplication *)application didReceiveRemoteNotification:(NSDictionary *)userInfo

{

[[MFSDKApplicationDelegate sharedAppDelegate]application:application didReceiveRemoteNotification:userInfo];

}

- (void)application:(UIApplication *)application didReceiveRemoteNotification:(NSDictionary *)userInfo fetchCompletionHandler:(void (^)(UIBackgroundFetchResult))completionHandler

{