Manage a Workspace

Workspaces are logical containers which host your Conversational AI bot/VA . Morfeus supports multi tenancy and you can create multipe bots with a logical separation with diiferent access controls for all bot related configuration including data , workflows and fulfillments , settings and customer data .

A Workspace can be thought of as a place where the user journey for different scenarios is described. It includes the training data for the AI engine and workflow to execute the Actions/Fulfillments.

Workspace Types

We are supporting two types of workspaces.

- CognitiveQnA(FAQs) Workspaces

In this type of workspace, you can configure only FAQs and Small talk, this is apt for information only Conversational AI bots. This workspace will not support transaction flows via intents. - Conversational Workspaces

This type of workspace, supports the full Conversational AI capabilities including both QnA(FAQs) and intents

Either you can configure your bot to give a response only for the user's query by selecting FAQ Workspace or you can configure your bot to perform transactions also by selecting Conversational Workspace.

If you don't select any workspace type then by default it will be FAQs only workspace.

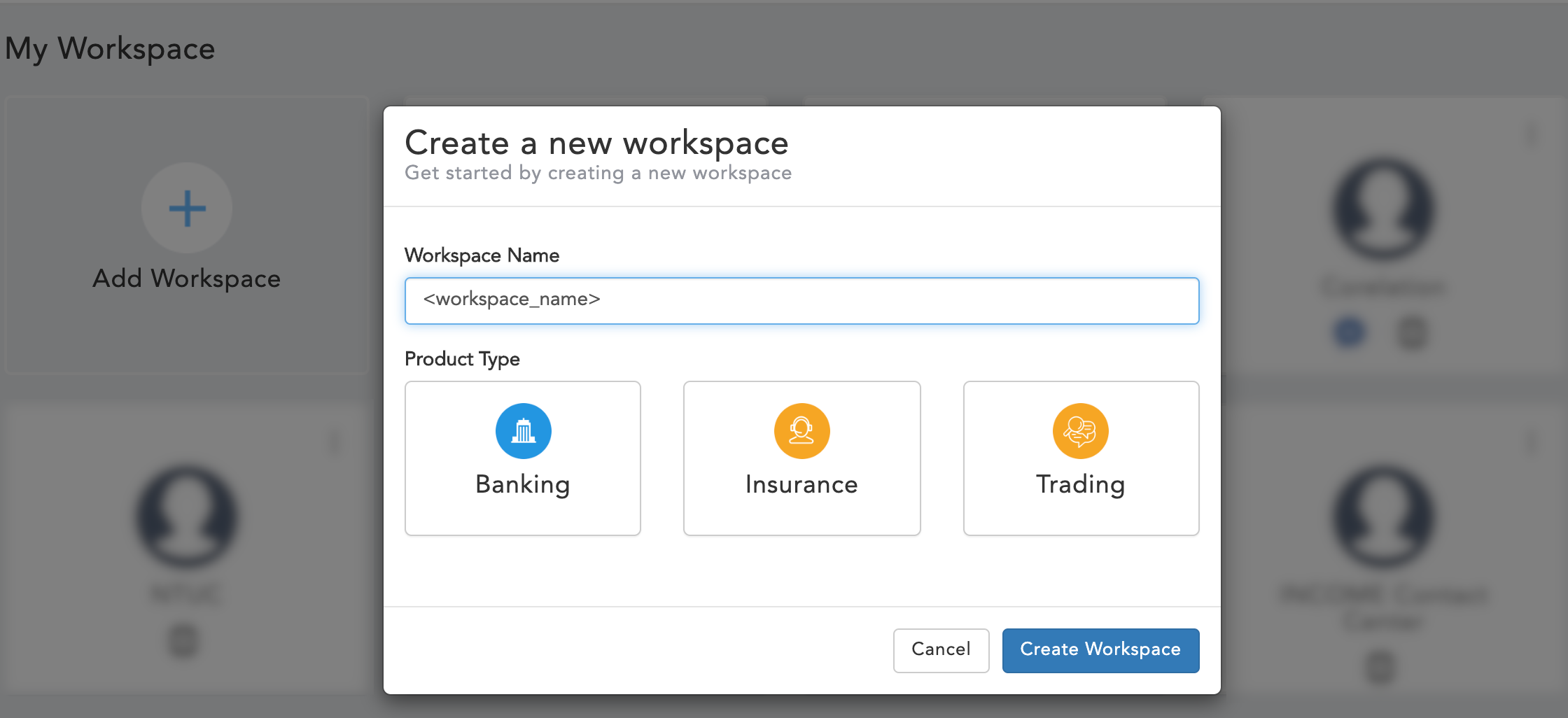

Create a CognitiveQnA (FAQs) Workspace

In this type of workspace, you can configure only FAQs and Small talk, this is apt for information only Conversational AI bots. This workspace will not support transaction flows via intents.

You can create a CognitiveQnA(FAQs) Workspace by following below steps:

- Click on "Add workspace".

- Mention the name of the workspace in the prompted window.

- Do not select any domain.

- Click on create a workspace.

FAQ Workspaces requires SmallTalk, FAQs, Spell Checker and Rule Validator out of all Conversational AI modules.

Create a Conversational Workspace

Converstaional Workspaces facilitate bots to provide on-par human chatting experience for the user and performs transactions along with responding to user's queries. This type of workspace is very useful to create a conversational AI experience.

You can create a Conversational Workspace by following below steps:

- Click on "Add workspace".

- Mention the name of the workspace in the prompted window.

- Select "Banking (Retail Banking, Corporate Banking or Retail lending) / Insurance / Trading / Custom" domain.

- Click on create a workspace.

Workspace creation will load base data and default data to the workspace as following :

Git sync will done while workspace creation will load faqs,intents,entities,spellcheck,smalltalk as part of base data

- Git url : To get Base Data, click here

- Git workspace : english_base

Base data Git sync default configuration exits in config.properties file

Path of file admin-backend-api/src/main/resources/properties/config.properties

Base data contains the minmal data to start the generate and train

Default data is also loaded to workspace

Default data is loaded to provide domain specific("Banking (Retail Banking, Corporate Banking or Retail lending) / Insurance / Trading / Custom") data

As part of default data templates,messages,hooks,workspace config,bot rules and ai rules will be loaded which are configurable

For Conversational Workspaces, all the AI data modules will be in use as mentioned under Conversational AI Modules.

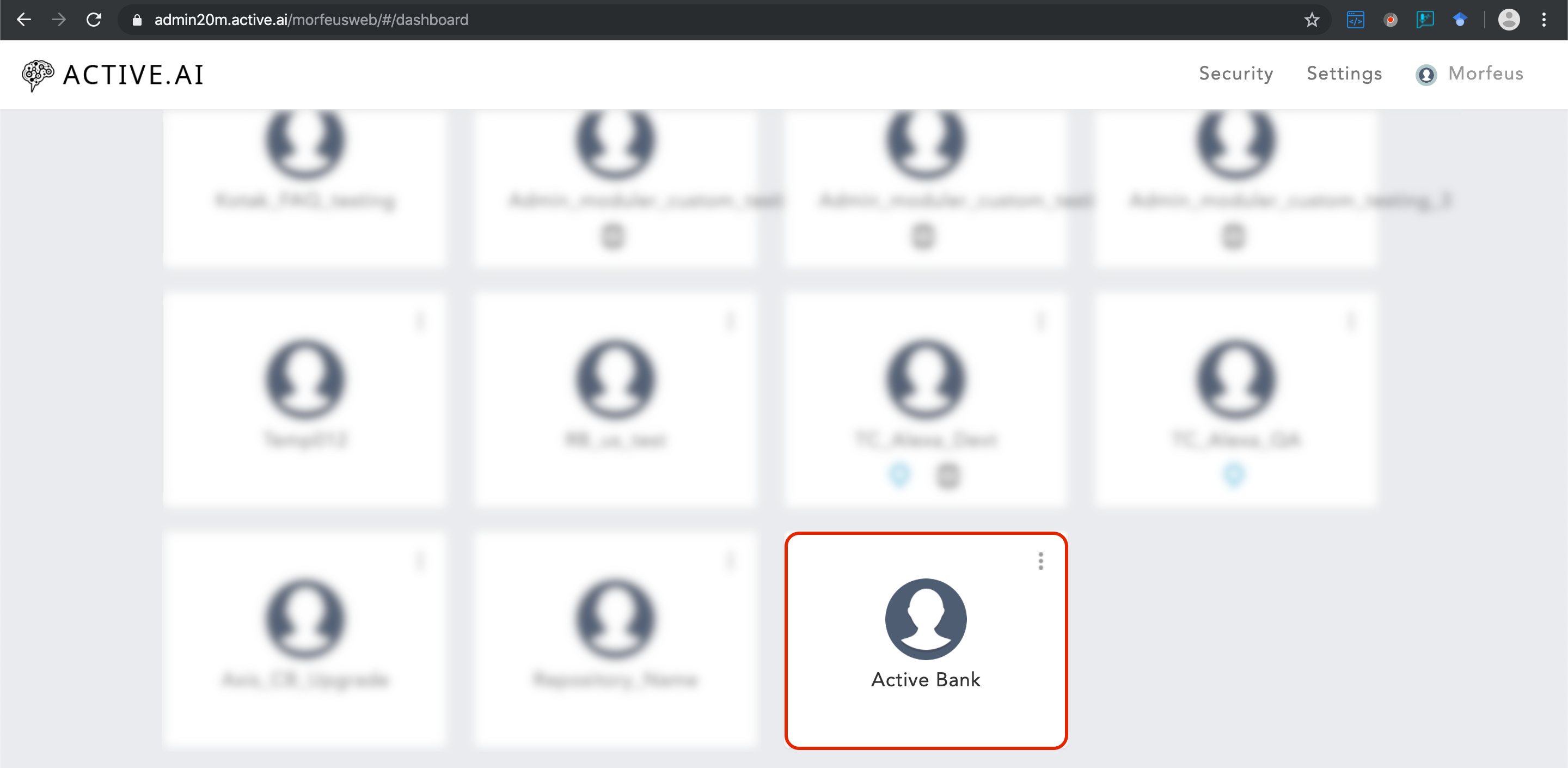

Select a Workspace

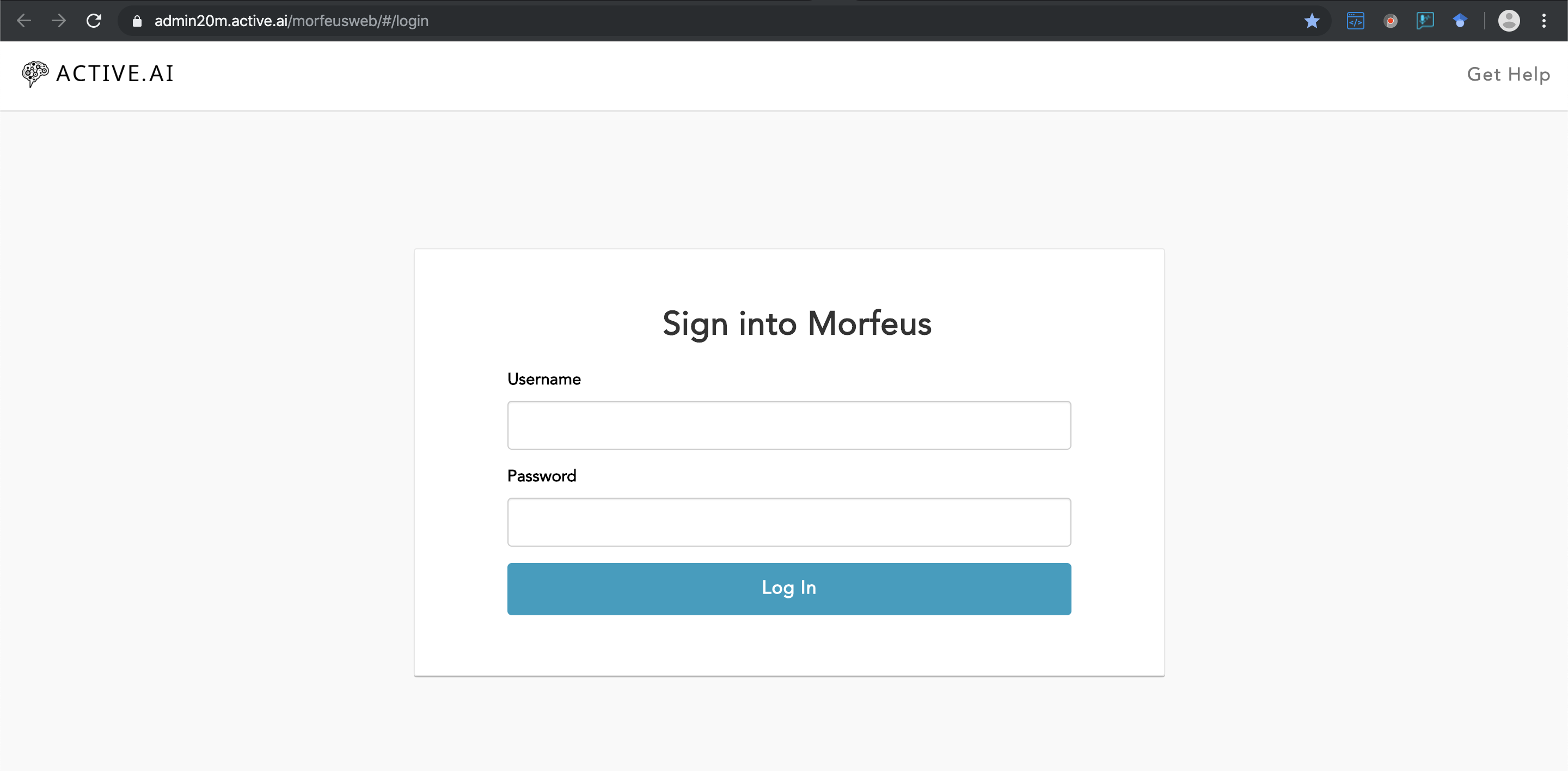

- Login to the Admin portal to access/create a workspace

- Click on desired Workspace

- Login to Admin Portal

- Select your workspace

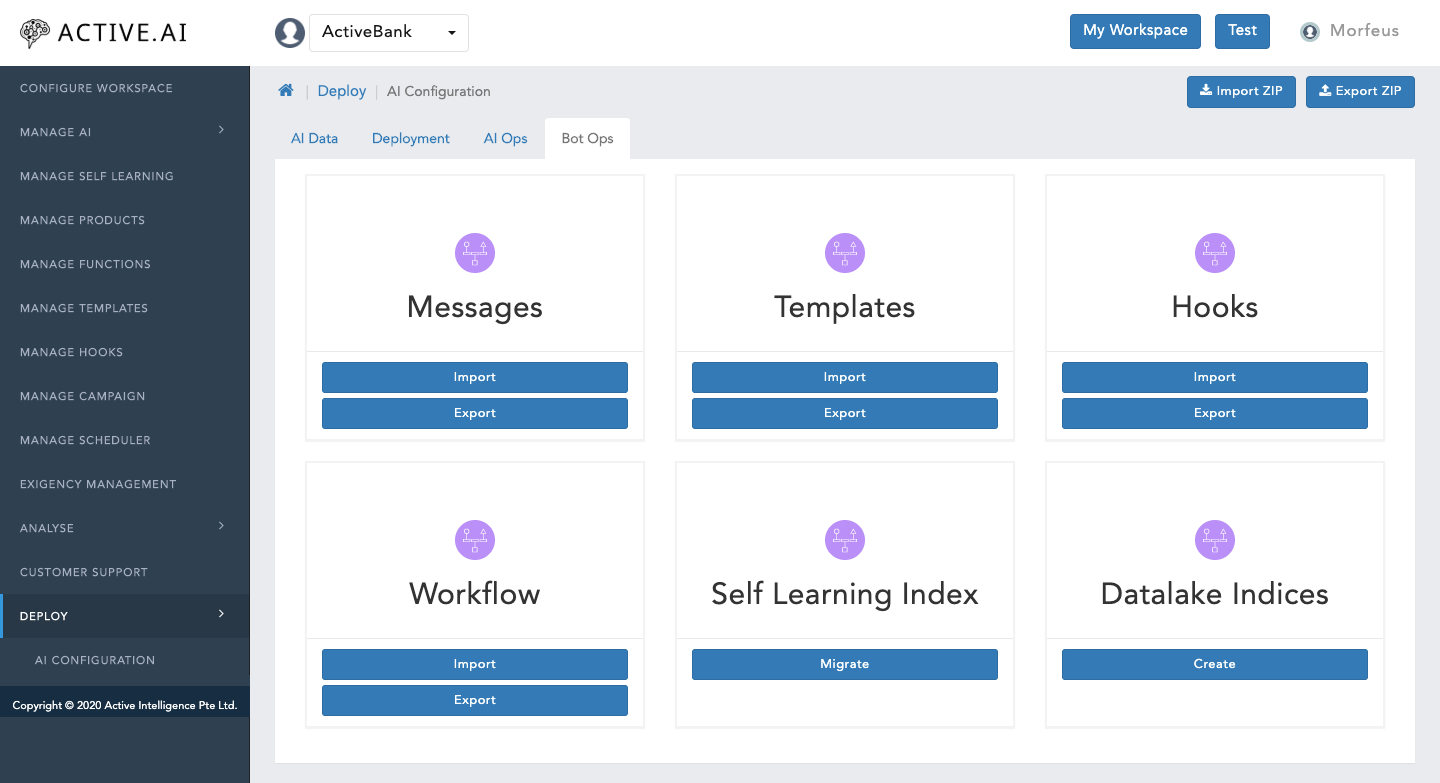

Export/Import Workspace

If you want to migrate your workspace configuration or clone it you can export and import your workspace. The export zip file contains all the artefacts of the workspace including Channels, Functions, Hooks, Rules, AI Data, Templates, Workflows, etc. If you are importing into an existing workspace the existing configuration will be over writen.

Importing a Workspace

You can import a workspace configuration by following these steps:

- Goto Morfeus Admin portal

- Click on 'Add Workspace' under My workspace

- Enter Workspace Name

- Select Product Type

- Click on Create Workspace

- Go to dashboard

- Click on menu icon of the created workspace click on 'Import Workspace'

- Upload a ZIP file that contains Configurations, Rules, Hooks, Functions, Templates, etc.

- Click 'Yes' on popup. (Are you sure you want to overwrite ?)

Exporting a Workspace

You can export the workspace by following these steps:

- Goto Morfeus Admin portal

- Go to Dashboard

- Navigate to the workspace(which you want to export)

- Click on menu icon on that workspace

- Click on 'Export Workspace'

It will download a ZIP file containing Channels, Functions, Hooks, Products, Rules, AI Data , Templates, Workflows, etc.

Deleting a Workspace

If you want to delete the not required or unnecessary workspaces then you can delete those workspaces by following these steps:

- Goto Morfeus Admin portal

- Go to Dashboard

- Navigate to the workspace(which you want to export)

- Click on menu icon on that workspace

- Click on 'Delete Workspace'

- Click 'Delete' on popup. (Are you sure you want to delete workspace?)

It will delete all the configuration of your workspace. And the workspace will be no longer available on your dashboard.

Managing Admin Users

This feature will allow you to manage the users for your workspace by adding the users, managing the users to your workspace. So this section will take you through how to add users, manage users, etc.

Eg; If you want to share your workspace with your colleagues or with someone for testing or other perspectives then you can add them as a user to the workspace and also you can manage the users for your workspace.

Note: Super admin, workspace admin or a user with the appropriate security profile can add or manage the user.

Eg; You can give them access to your workspace to the user as admin, Security, Operations, Customer Support, etc to test or contribute in your workspace to make your bot respond better.

Adding Users/ User ACL

This feature will allow you to add a user to your workspace. You can add users to the workspace by following these steps:

- Goto Morfeus portal

- Click on 'Security'

- Click on 'Add User'

- Enter the details (Name, phone number, email, country)

- Provide access 'role' (Admin, Business, Customer support, Data(Smalltalk/FAQ), Data, Security, Operations, Reports, Tech operations)

- Select 'User Verify Type' (Internal, Active Directory)

- Select 'Authorization type' (Root, Maker, Checker)

- Assign a workspace (you can assign for multiple & all also)

- Click on Add

Enabling and Disabling Users

You can Enable/Disable a user for your workspace by following these steps:

- Goto Morfeus portal

- Click on 'Security'

- Click on the edit icon (For which user you want to enable/disable)

- Click on Enable/Disable

User Audit Trail

The user audit trail feature is to audit the violation/access issue that happened on the APIs. You can even check the APIs(which are violated) with the user's name, IP Address, date & time. Also, you can export those Audit Trail by clicking on the 'Export' button.

Eg; If the user has tried to hit some API & API has refused the connection or sent unauthorized error etc.

- Goto Morfeus portal

- Click on 'Security'

- Navigate to Audit Trail

User Authentications

You can add the user to access or manage your workspace. From a user authentication perspective, you can manage the user's authentication type like how you are allowing them to log in to your workspace & manage your workspace. We are supporting two types of authentication(User Verify Type) as Internal or Active Directory.

You can provide authentication for the added users or while adding the users as:

Internal: It will authorize the user based on credentials that are stored for that user.

Active Directory: It will authorize the user based on AD/LDAP that will be configured in the AD/LDAP 'Configuration' section under 'Settings'

Azure AD SSO

let's start to find out how we can do integrate admin with Azure AD using SAML implementation.

We can divide this into three parts

1) Azure AD portal Configuration

2) SAML-SSO configuration

3) Admin configuration

Azure AD Portal Configuration

Refer to quick starthere

post was done with the configurations kindly add User Attributes and claims as shared in the below screenshot as we are using the same to create a session

adid is added as an additional property u can map any value which we are mapping inside the employeeId of admin user

*Always Reply URL should match with the endpoint which ur requesting in saml-sso

SAML-SSO configuration

- Get the war fromArtifactory

- If u want to do customization clone the project from theBitbucket

- deploy to the place where morfeusadmin exists

refer the below properties and update as explained in below

- saml.discovery.entity-id=https://sts.windows.net/1ea4687b-53b1-4285-babf-3f92fe915792/

- spring.thymeleaf.cache=false

- spring.thymeleaf.enabled=true

- spring.security.saml2.network.read-timeout=10000

- spring.security.saml2.network.connect-timeout=5000

- spring.security.saml2.service-provider.basePath=https://localhost:8443/saml-sso/

- spring.security.saml2.service-provider.sign-metadata=false

- spring.security.saml2.service-provider.sign-requests=false

- spring.security.saml2.service-provider.want-assertions-signed=true

- spring.security.saml2.service-provider.single-logout-enabled=true

- spring.security.saml2.service-provider.encrypt-assertions=false

- spring.security.saml2.service-provider.name-ids=urn:oasis:names:tc:SAML:2.0:nameid-format:persistent, urn:oasis:names:tc:SAML:1.1:nameid-format:emailAddress, urn:oasis:names:tc:SAML:1.1:nameid-format:unspecified

- spring.security.saml2.service-provider.keys.active.name=sp-signing-key-1

- spring.security.saml2.service-provider.providers[0].alias=enter-into-saml-sso-alias

- spring.security.saml2.service-provider.providers[0].metadata=https://login.microsoftonline.com/1ea4687b-53b1-4285-babf-3f92fe915792/federationmetadata/2007-06/federationmetadata.xml?appid=8e34231d-e07e-44bd-a5b1-732aa0be5974

- spring.security.saml2.service-provider.providers[0].skip-ssl-validation=true

- spring.security.saml2.service-provider.providers[0].link-text=enter-into-saml-sso-link-text

- spring.security.saml2.service-provider.providers[0].authentication-request-binding=urn:oasis:names:tc:SAML:2.0:bindings:HTTP-POST

- app-context-path=/morfeusweb/#/dashboard

saml.discovery.entity-id : You can copy the value from Azure Ad Identifier and replace the value as shown in the above image

spring.security.saml2.service-provider.basePath : Path in which application deployed

ex:- Our application is deployed in server https://localhost:8443 and war name is saml-sso hence we need to mention https://localhost:8443/saml-sso/

spring.security.saml2.service-provider.providers[0].metadata : You can copy the value from App Federation Metadata Url and replace the value as shown in the above image

app-context-path : In which application needs to be redirect post successful authentication.

can also give complete endpoint as shown below

https://localhost:8553/morfeusweb/#/dashboard

rest were optional configuration

All the above were default available values with updated properties u can name the file as saml-sso.properties and place anywhere in the server and mention the path in catalina.sh as shown below

JAVA_OPTS="$JAVA_OPTS -Dsaml.sso.resources=/Users/userName/Documents/active_apps/develop/properties"

where properties folder should contain the file named saml-sso.properties which you have created with updated values

Admin configuration(https://localhost:8443/morfeusweb/)

Workspace settings > SSO Tab > search for " SSO URL" and provide value in which saml-sso has hosted something like (https://localhost:8443/saml-sso/)( Default Value: NA )

Workspace settings > SSO Tab > search for "Default Role" and u can choose dropdown based on ur requirement, Below table gives Accessibility based on roles ( Default Value: Admin )

| Role | Accessability |

|---|---|

| Admin | Can access everything |

| Business | Analyse, Manage Product, Manage Template, Manage campaign |

| Customer Support | Customer Support |

| Data - SmallTalk/FAQ | samll talk, faq,self learning |

| Data | Manage AI |

| Security | Security Page |

| Operations | Deploy(AI and Workspace Configuration) |

| Reports | Analyse |

| Tech Operator | Configure workspace |

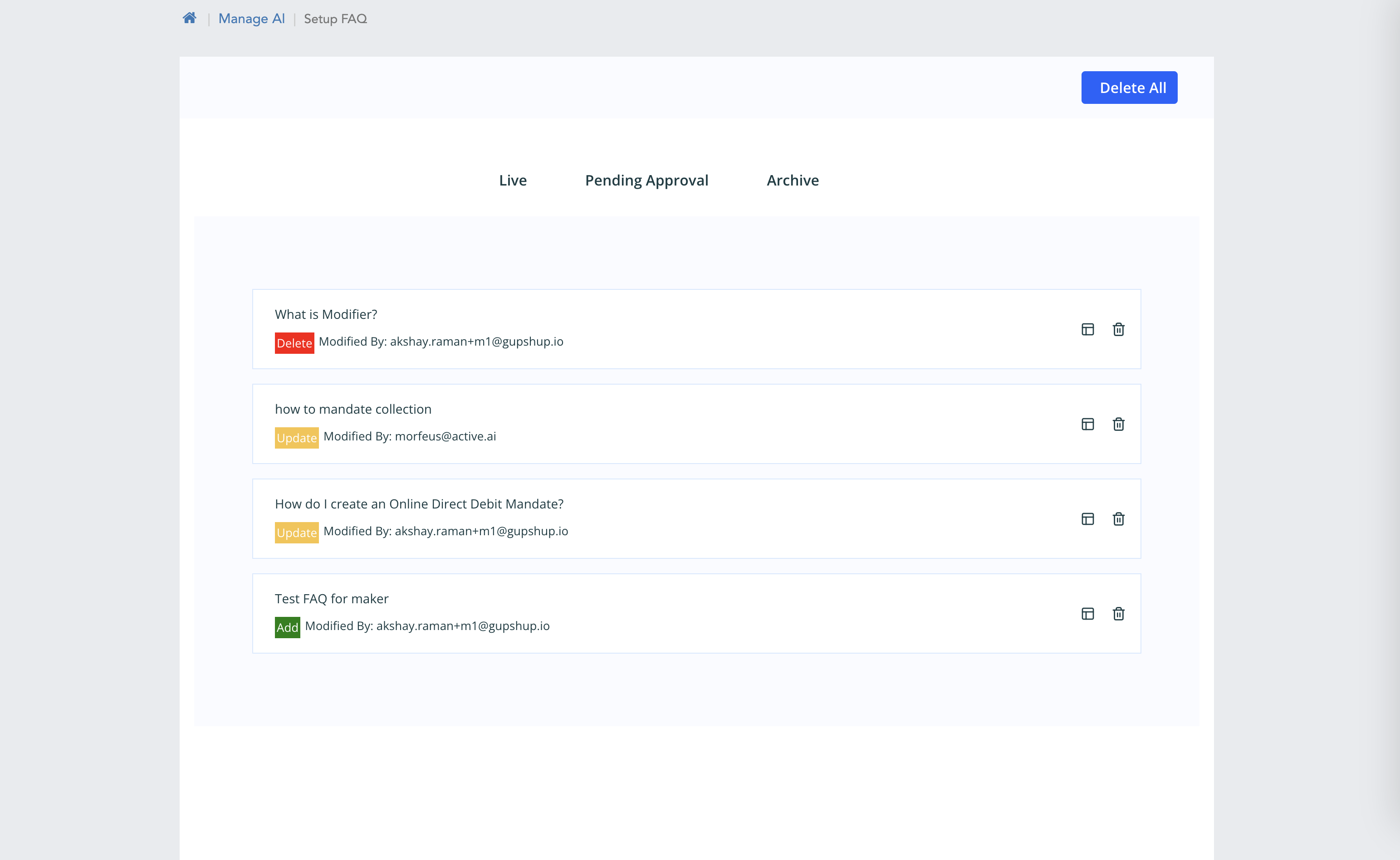

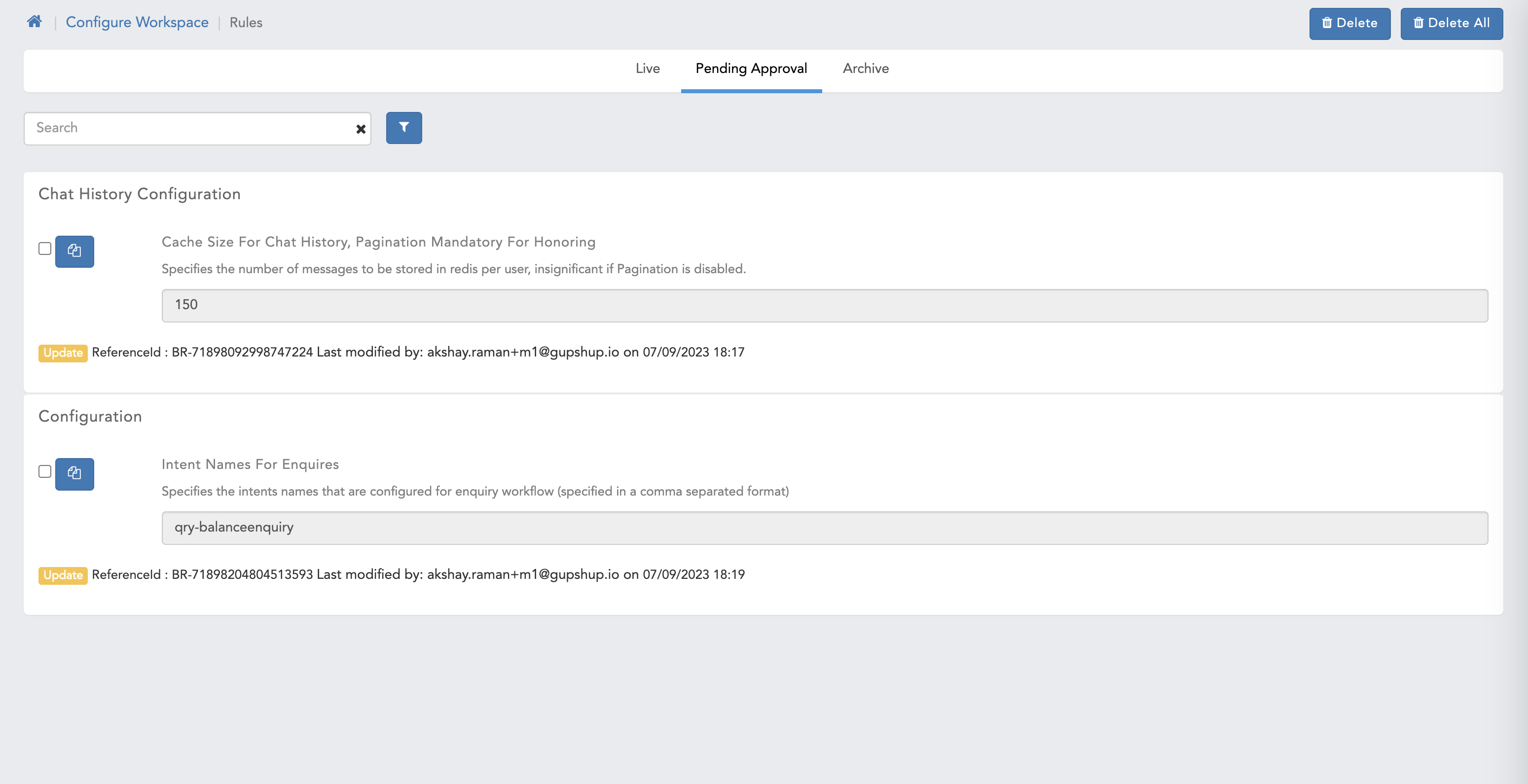

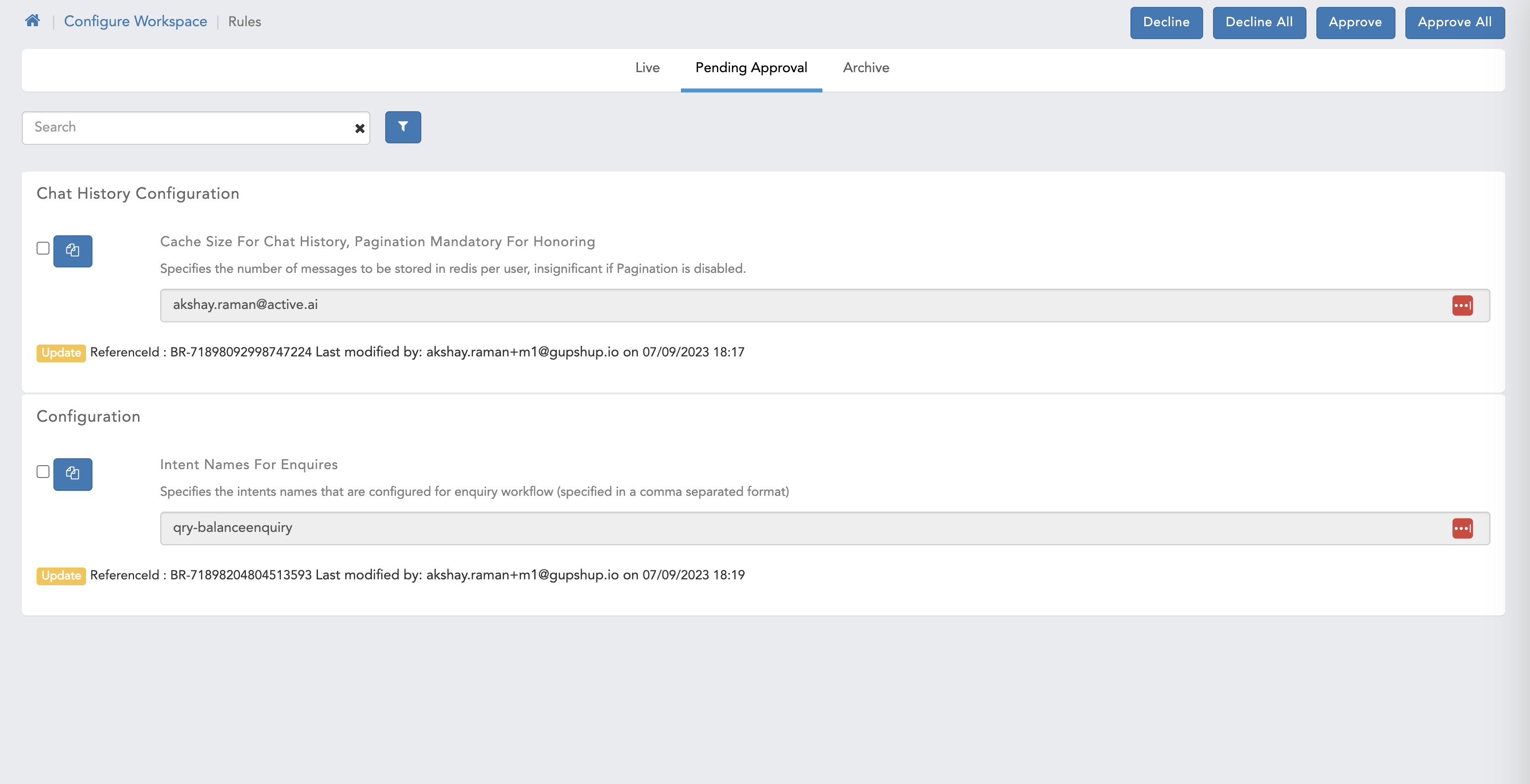

Maker Checker

Maker checker allows us to not directly modify the certain things, in this there are two types of user one is maker and other is checker. A maker user creates/update the data but this first goes to to checker user now checker user can either approve this requerst or rejects the request.

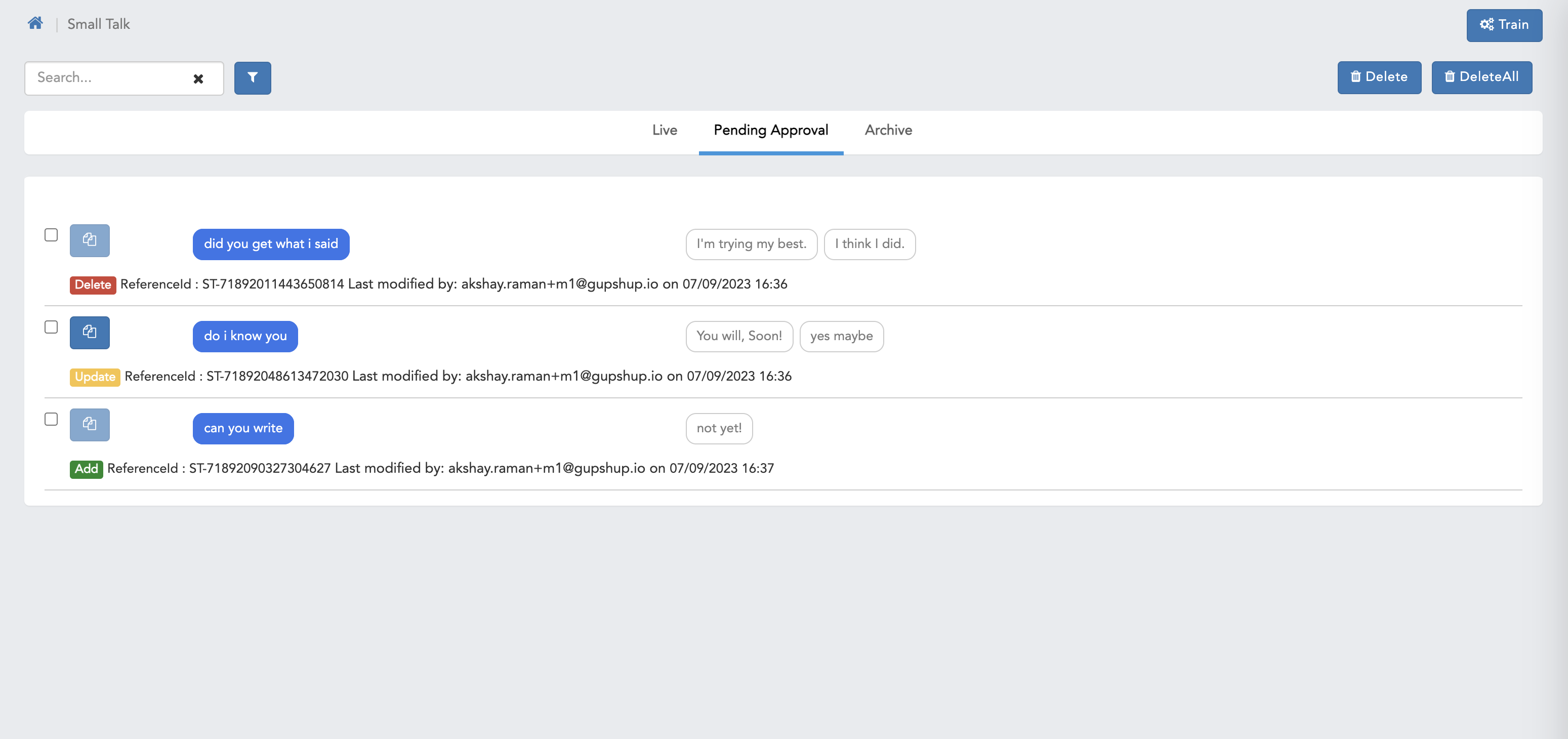

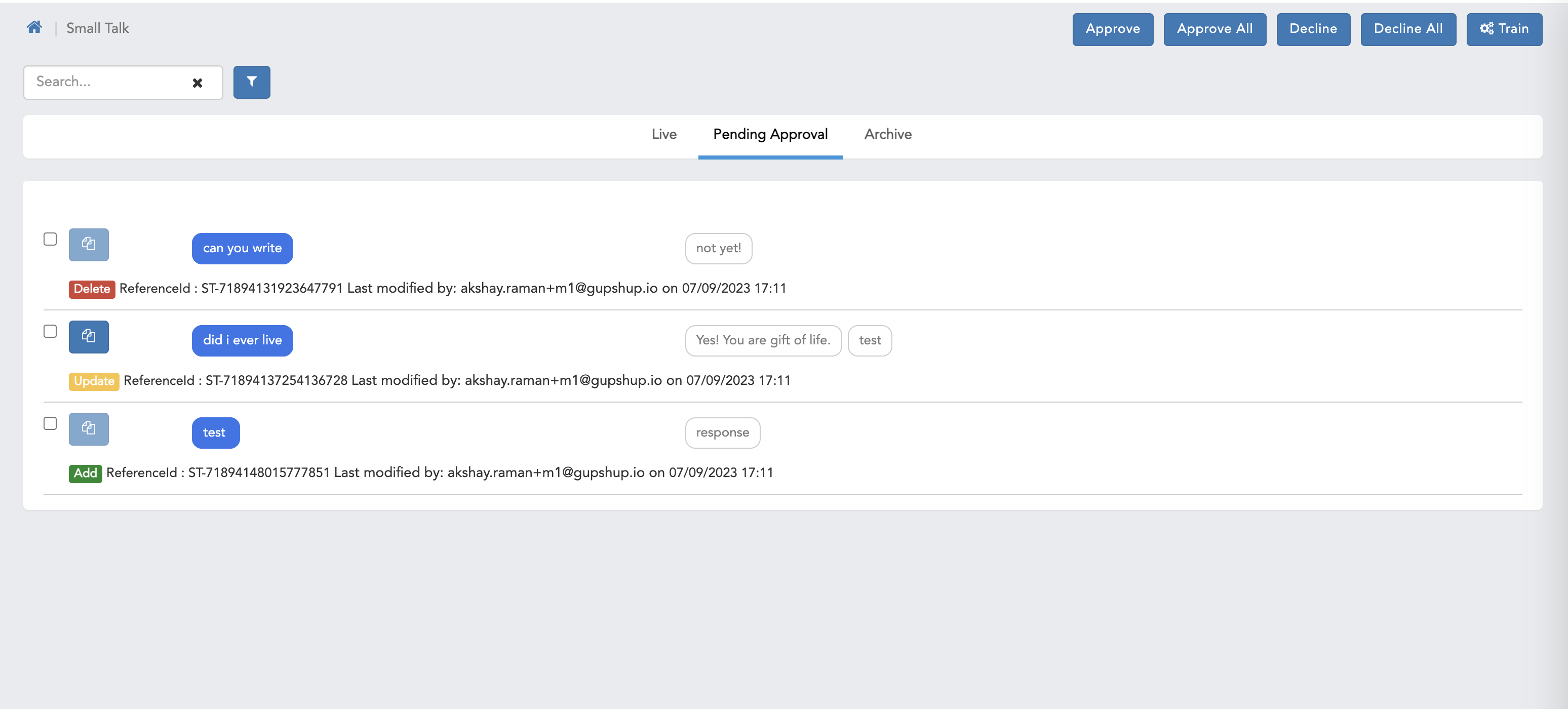

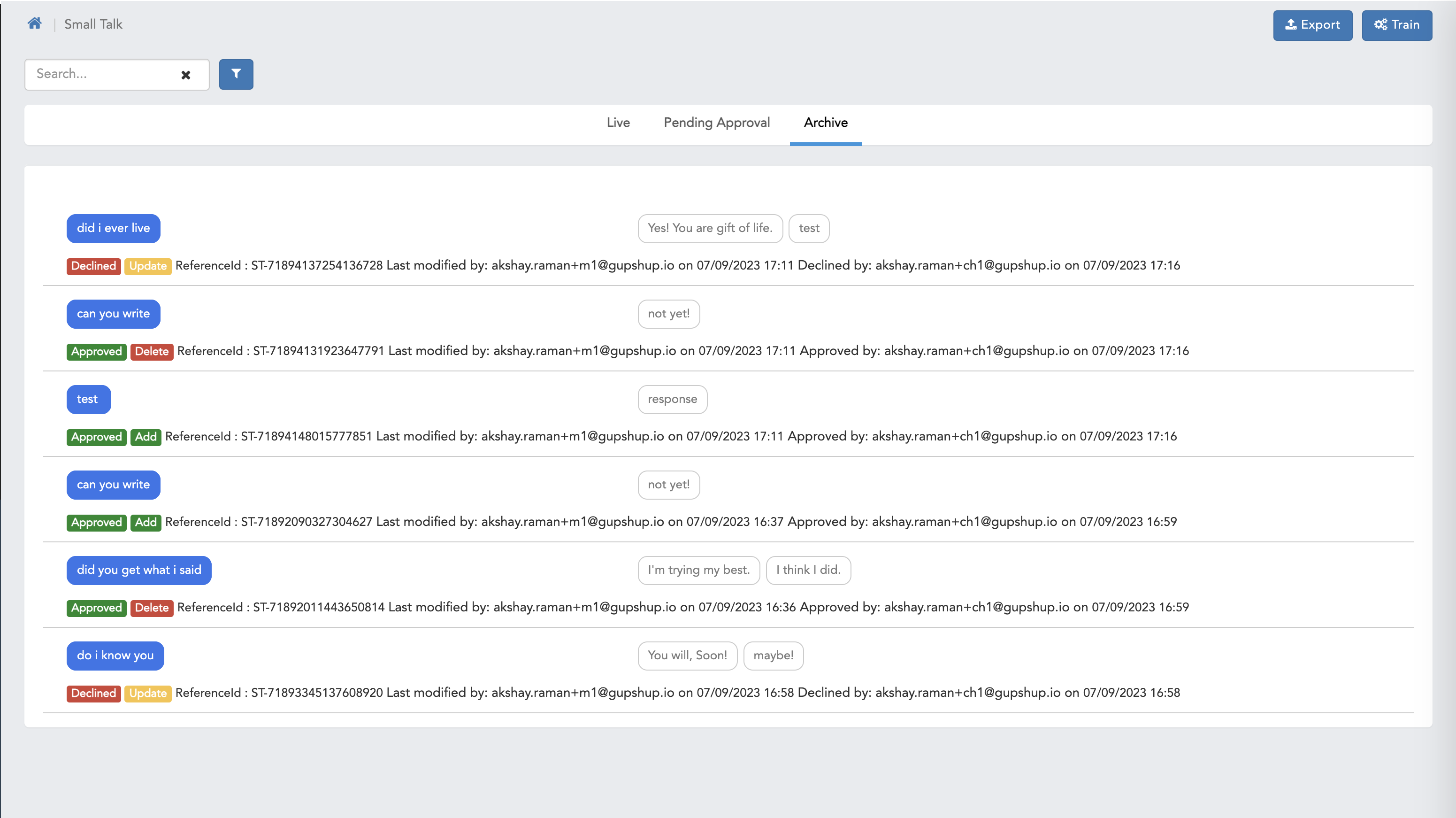

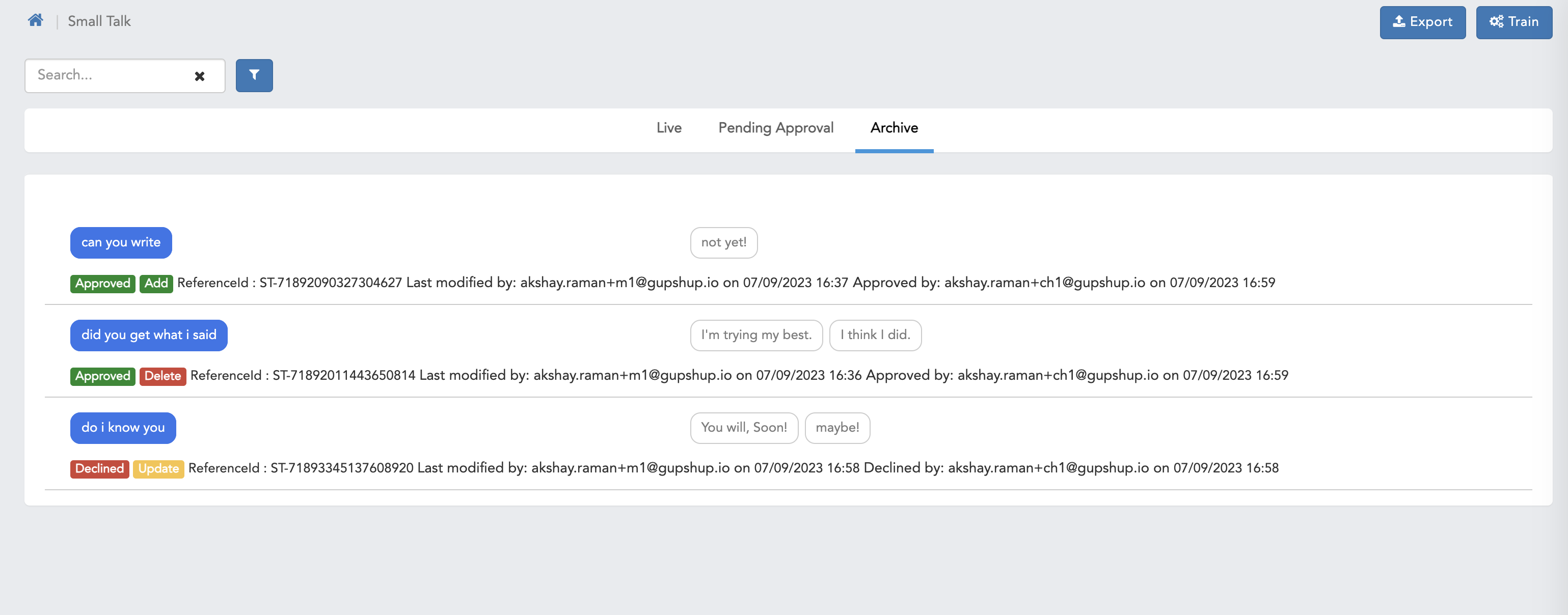

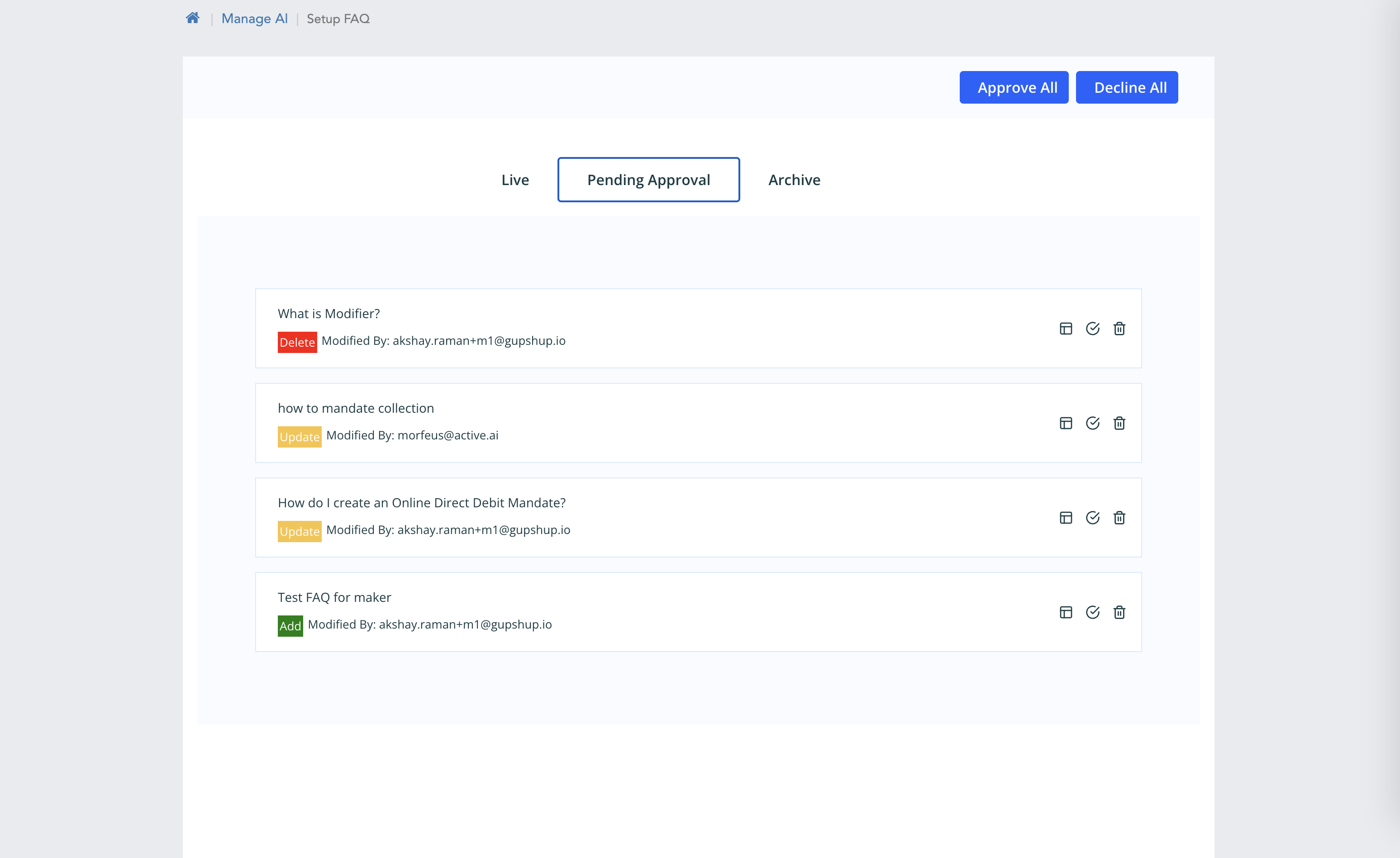

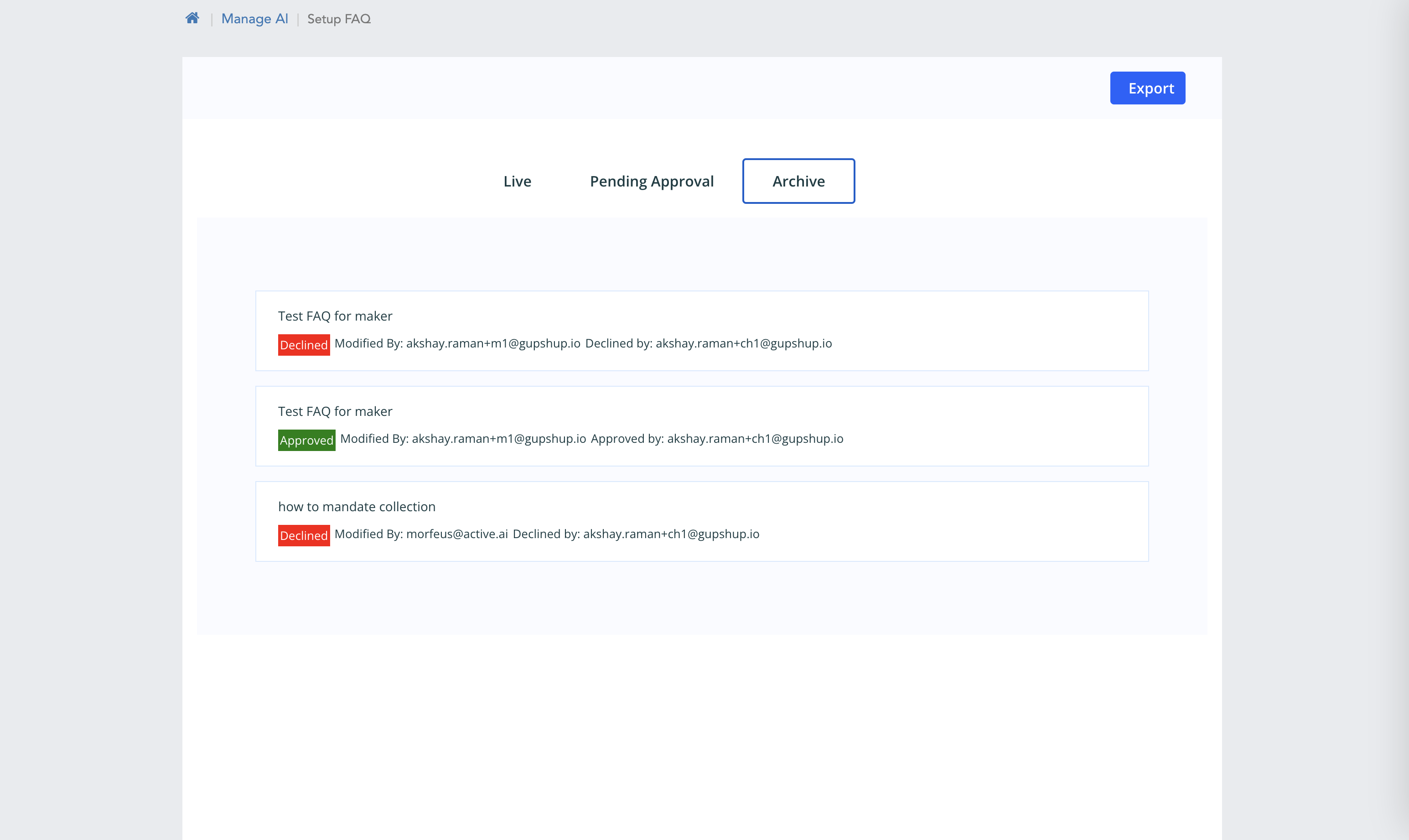

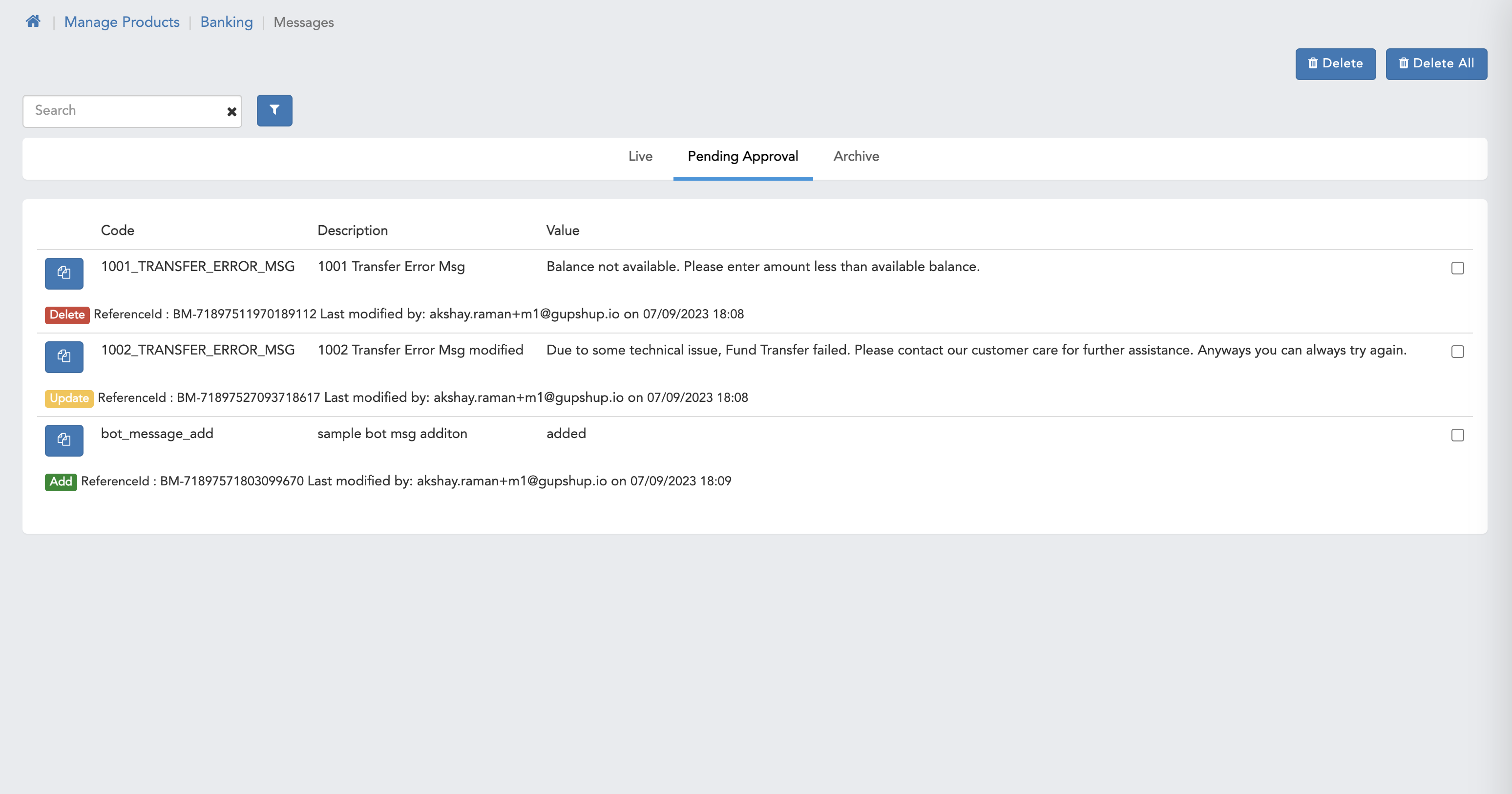

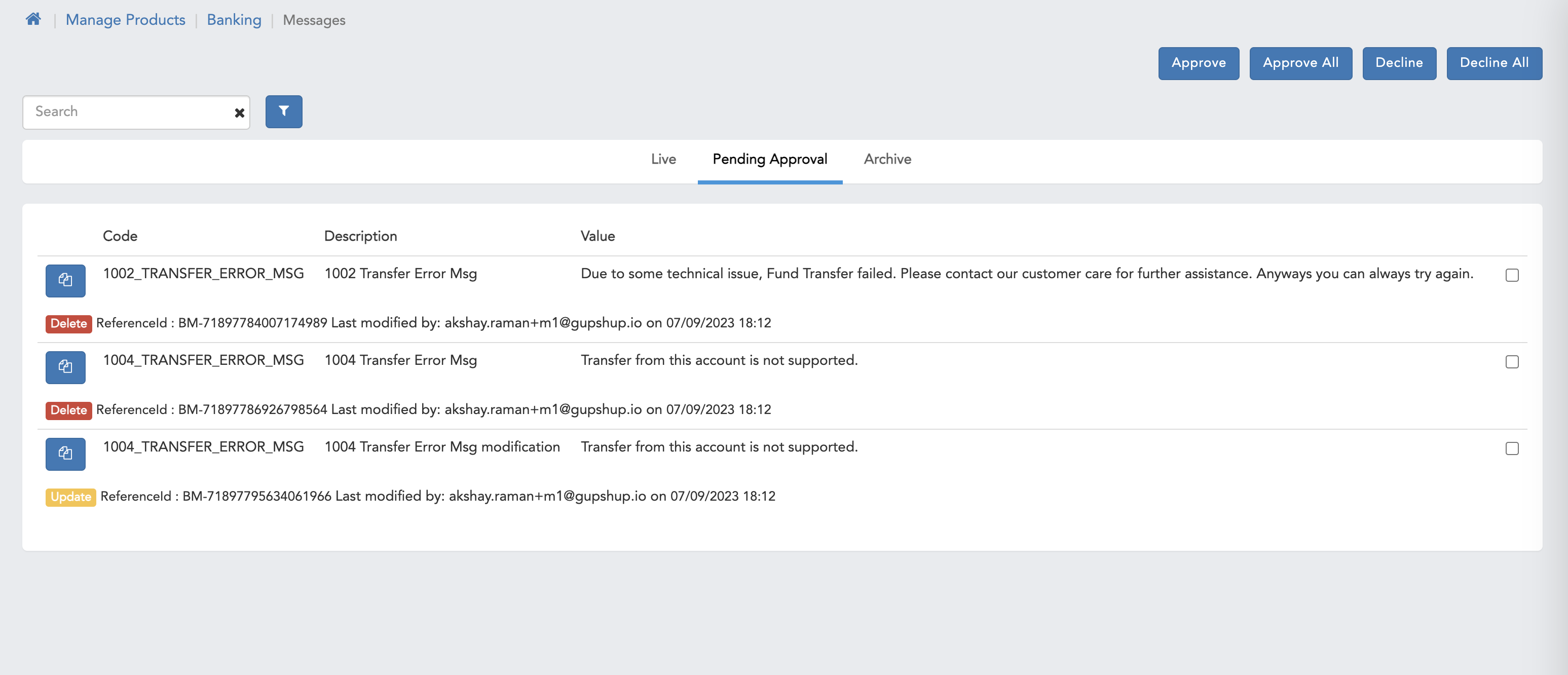

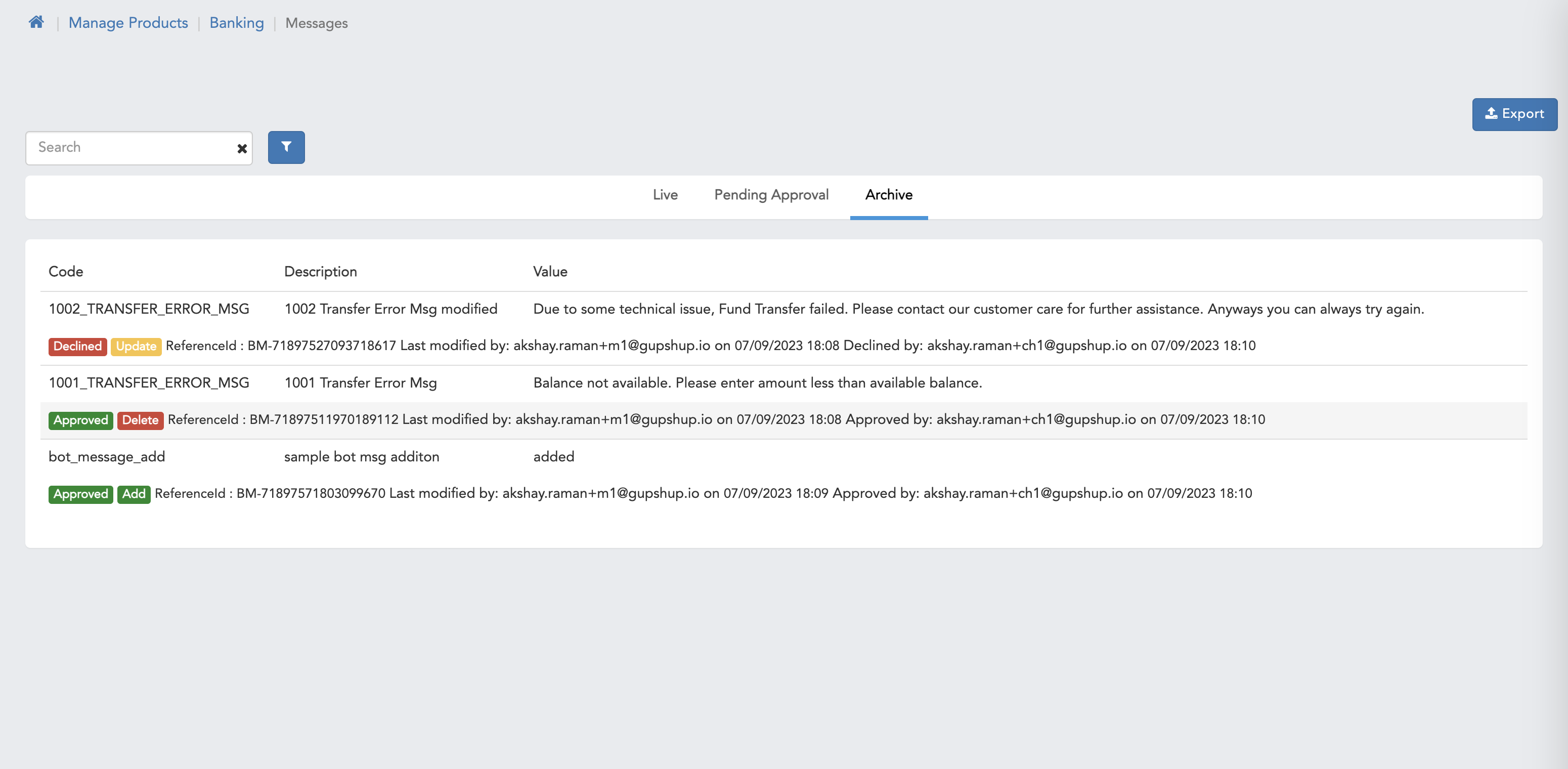

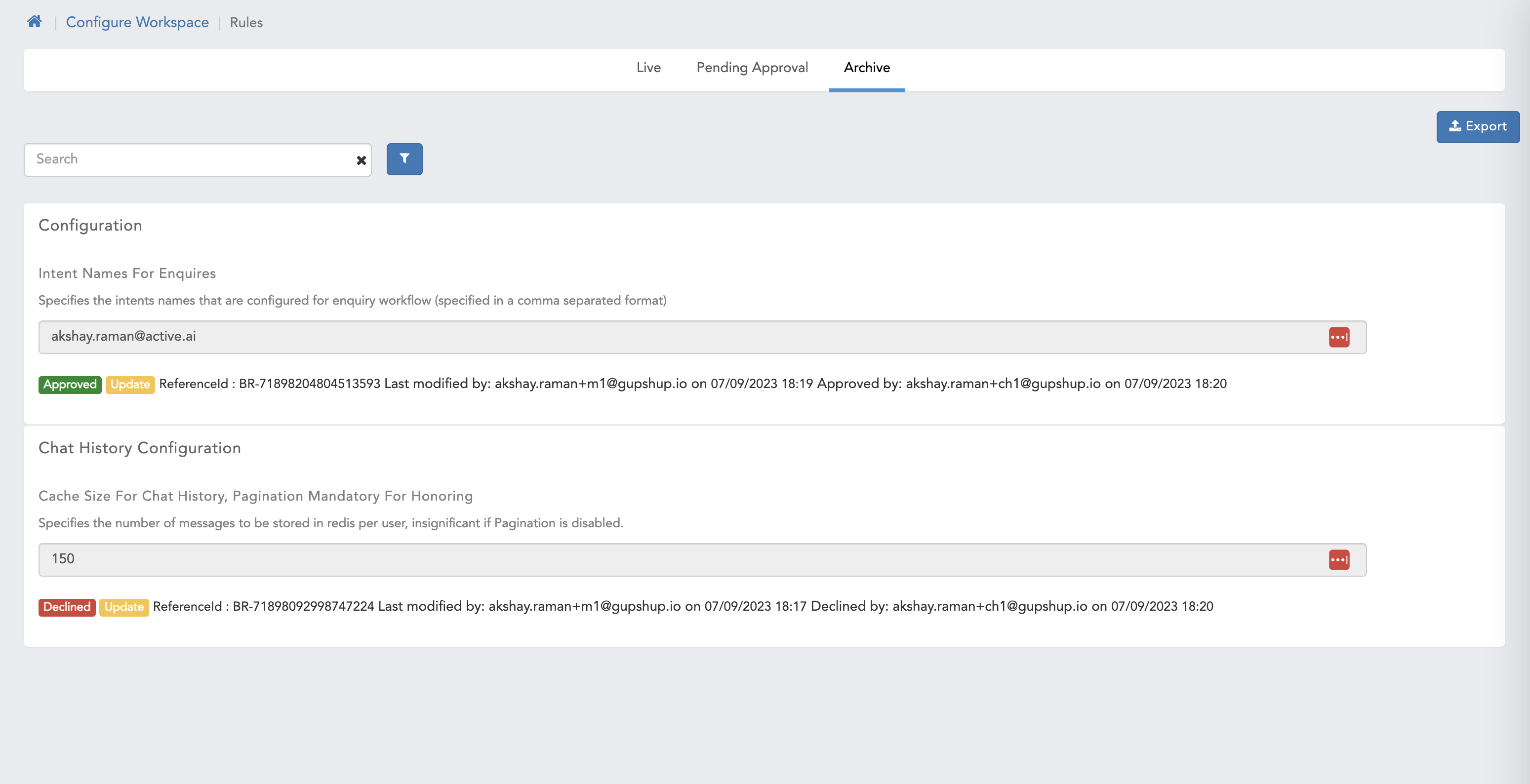

There are 3 classification for maker checker i.e. Live, Pending Approval & archive

- Live - Showcase existing data on which maker user can perform various actions like add, delete, edit.

- Pending Approval - This page showcase all the data that is pending for checker user approval.

- Archive - Showcases all the data on which action taken taken on pending approval page on this page there is a functionality to export data which is applicable for both maker and checker users.

Actions Supported On Maker/Checker

- Maker

- Delete

- Delete all

- Checker

- Approve

- Approve all

- Decline

- Decline all

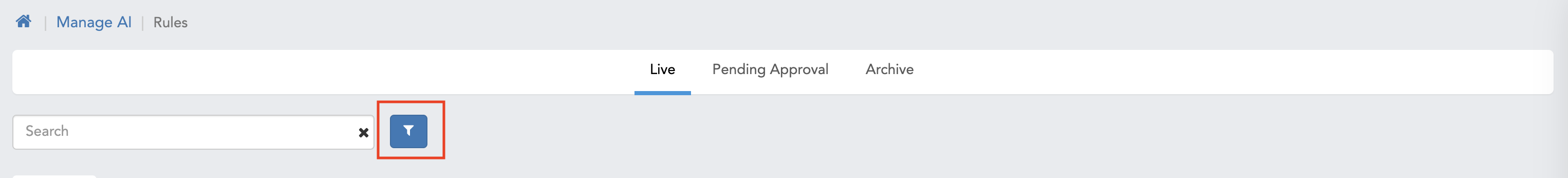

Filter There are various filters available for both maker checker users to filter data this filter can be accessed by clicking on icon shown below

We have different filters for each category.

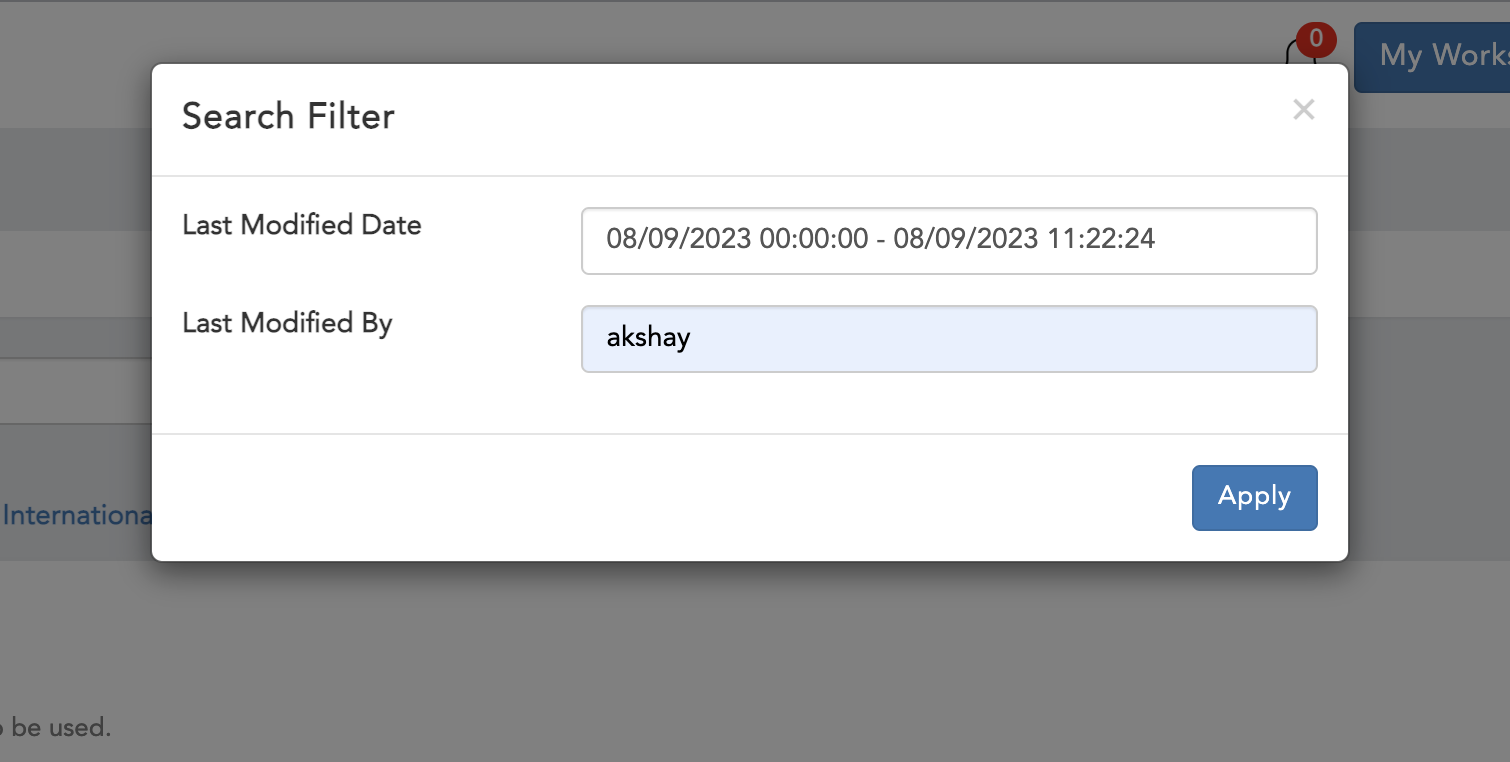

Live

- Last Modified Date

- Last Modified By

Figure 1.2 Live Filter

Figure 1.2 Live Filter

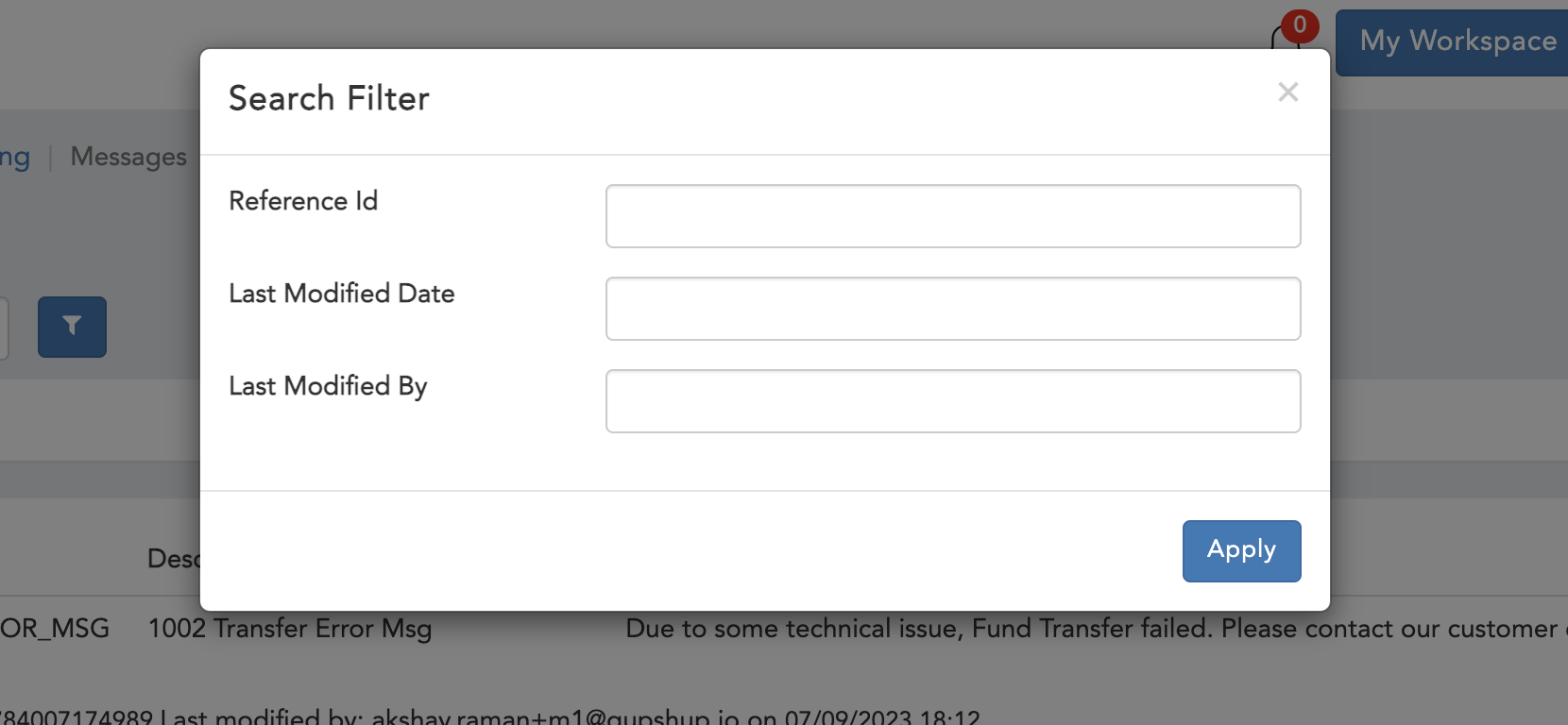

Pending Approval

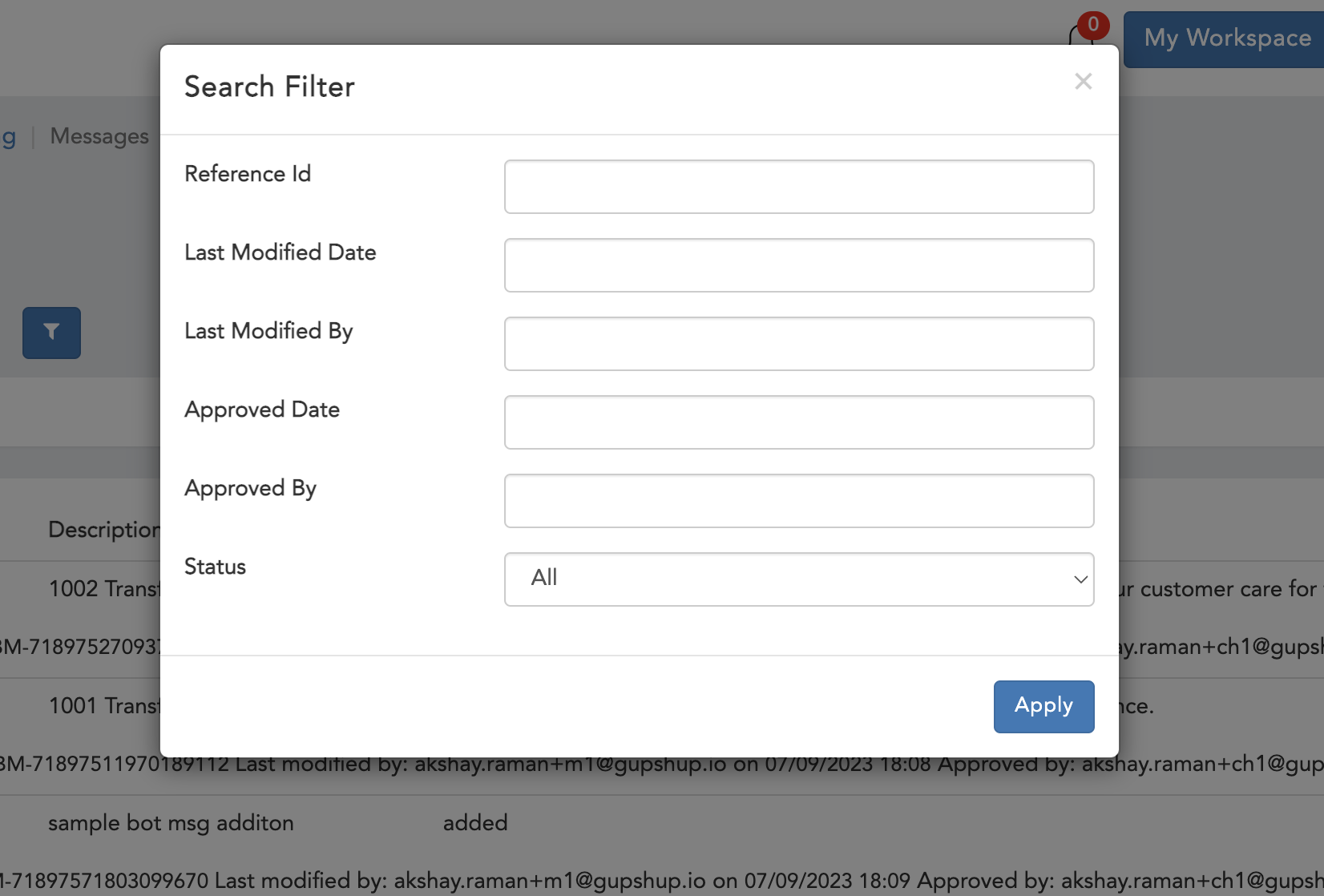

- Reference Id

- Last Modified Date

- Last Modified By

Figure 1.3 Pending Approval Filter

Figure 1.3 Pending Approval Filter

Archive

- Reference Id

- Last Modified Date

- Last Modified By

- Approved Date

- Approved By

- Status

- All

- Approved

- Decline

Figure 1.4 Archive Filter

Figure 1.4 Archive Filter

Actions Supported On Data

- Add

- Edit/Update

- Delete

- Delete all

- Import

Once you perform any action using maker user the data will be sent to checker user for approval, Note a checker user is not allowed to perform any above action.

These data can be seen by both maker and checker on pending approval.

Here 4 options are available specifically for checker user i.e. approve, approve all, decline & decline all, and 2 options available for maker user i.e. delete & delete all

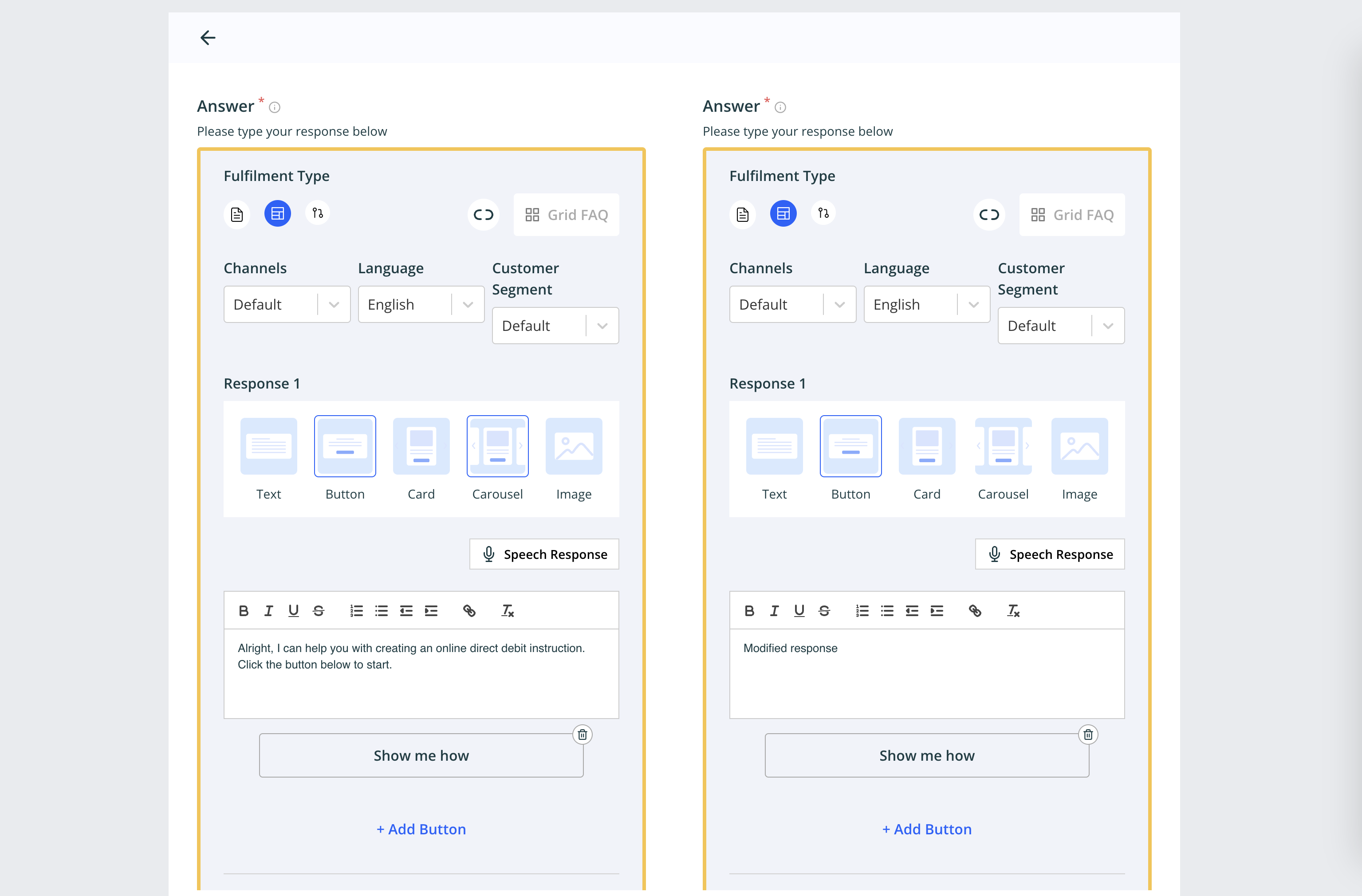

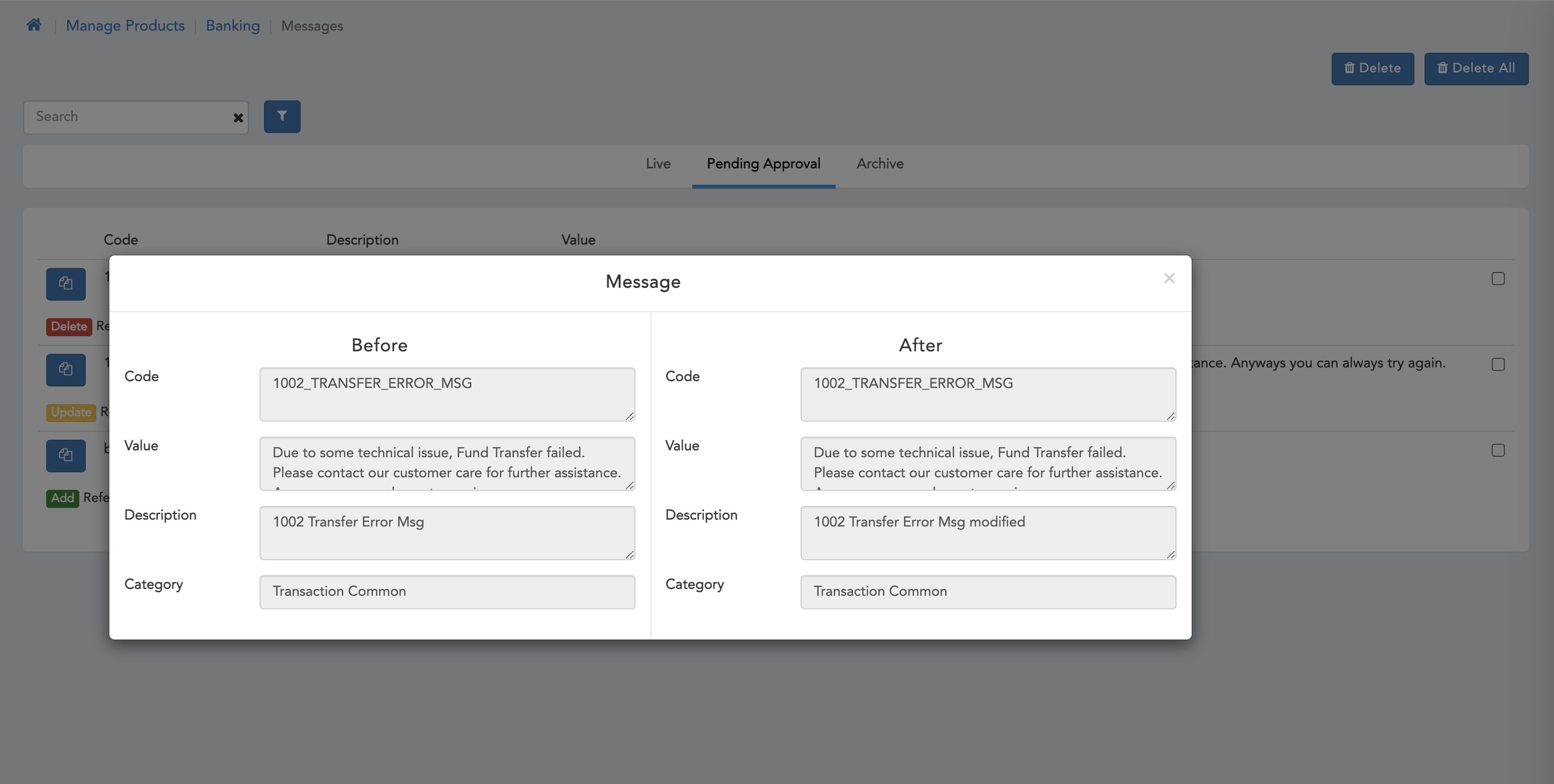

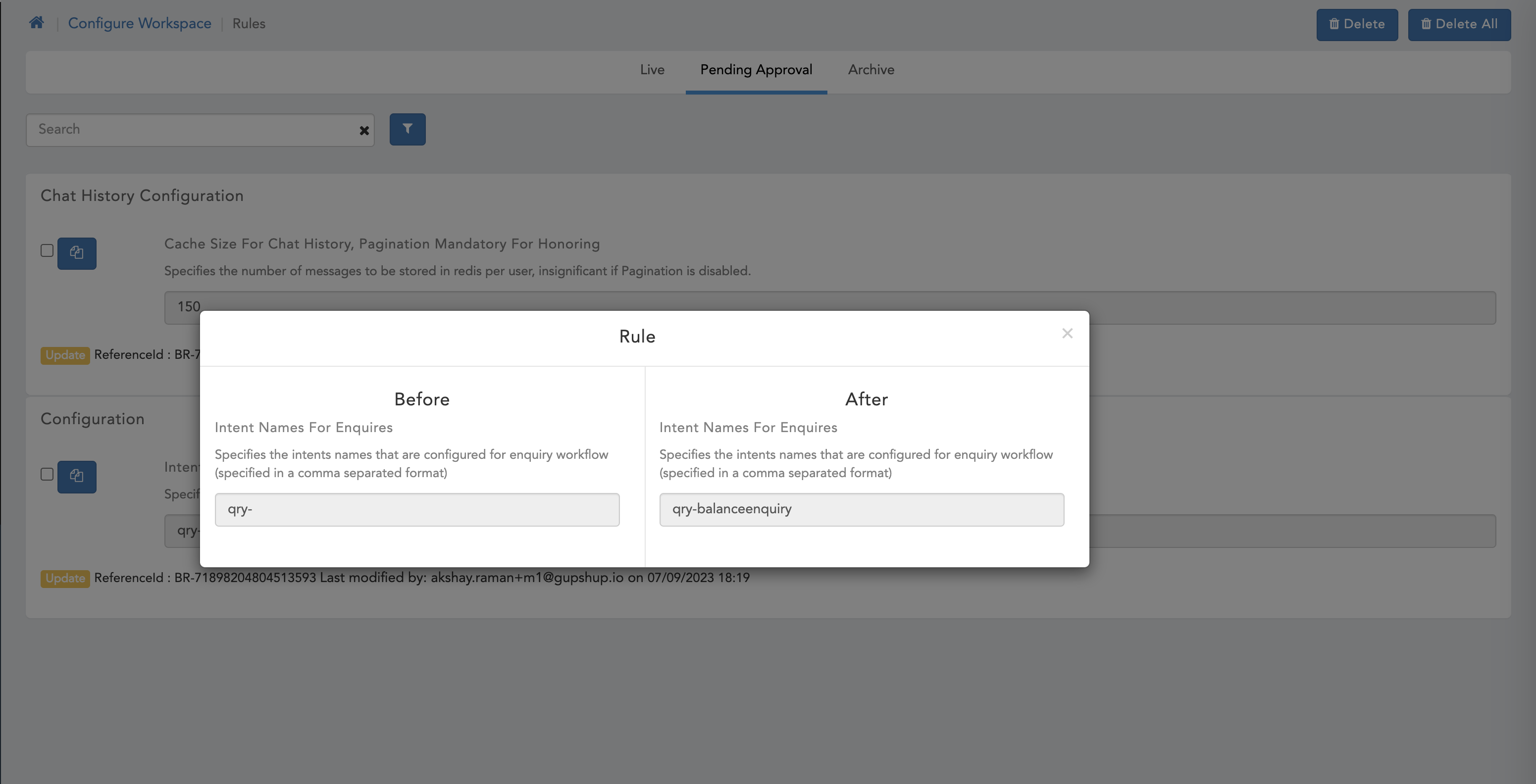

We can also compare data if update action is performed with the older data

Once we perform any of the above highlighted action the data will be processed further i.e (added, deleted or updated), this data can be seen on archive screen.

Modules Supported

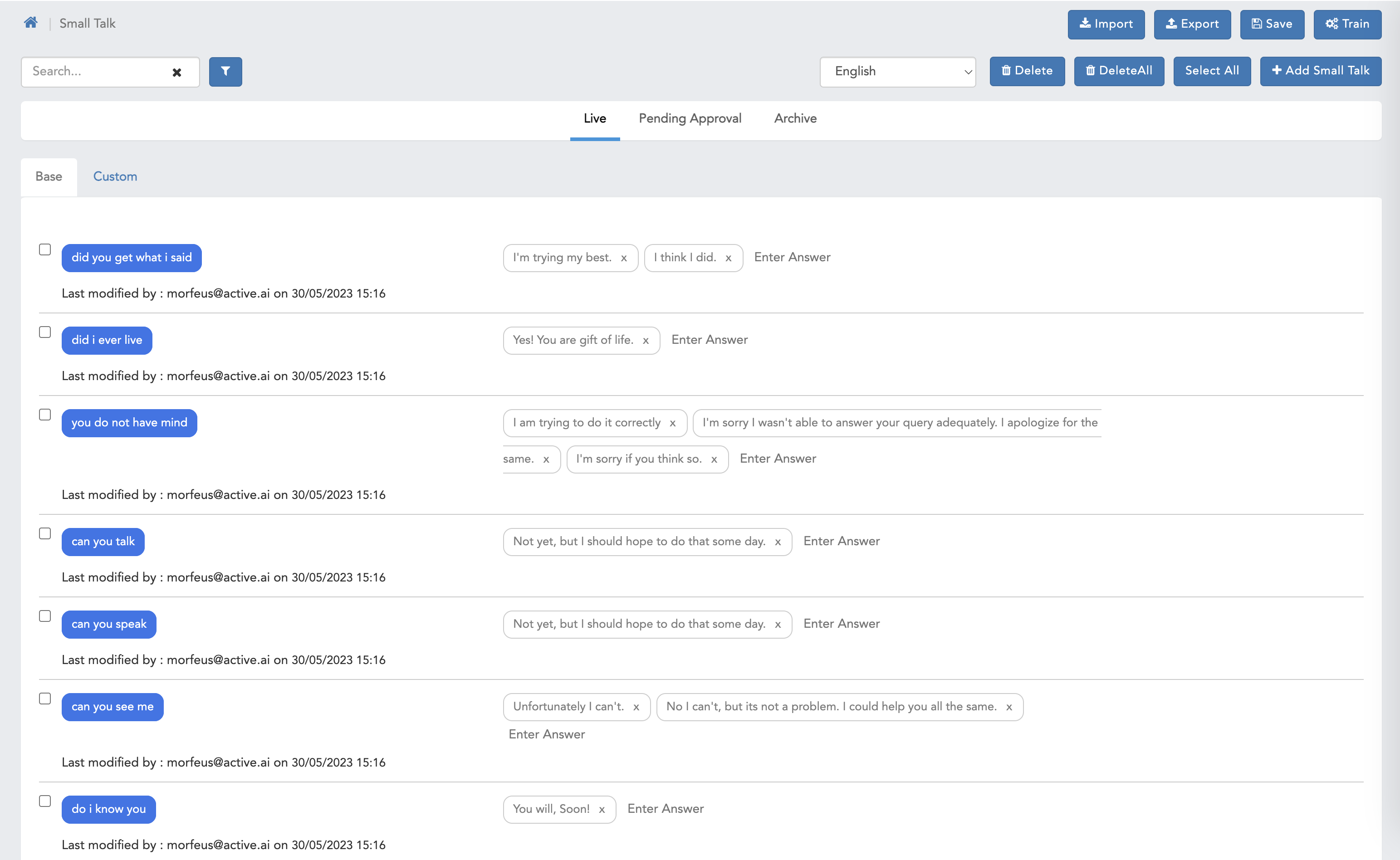

Smalltalk

- Here we can see default smalltalk page with all the functionalities with maker checker headers.

- Here we can see pending approval page for Maker user.

- Here we can see pending approval page for Checker user.

- Here we can see pending approval page with compare screen.

- Here we can see Archive screen.

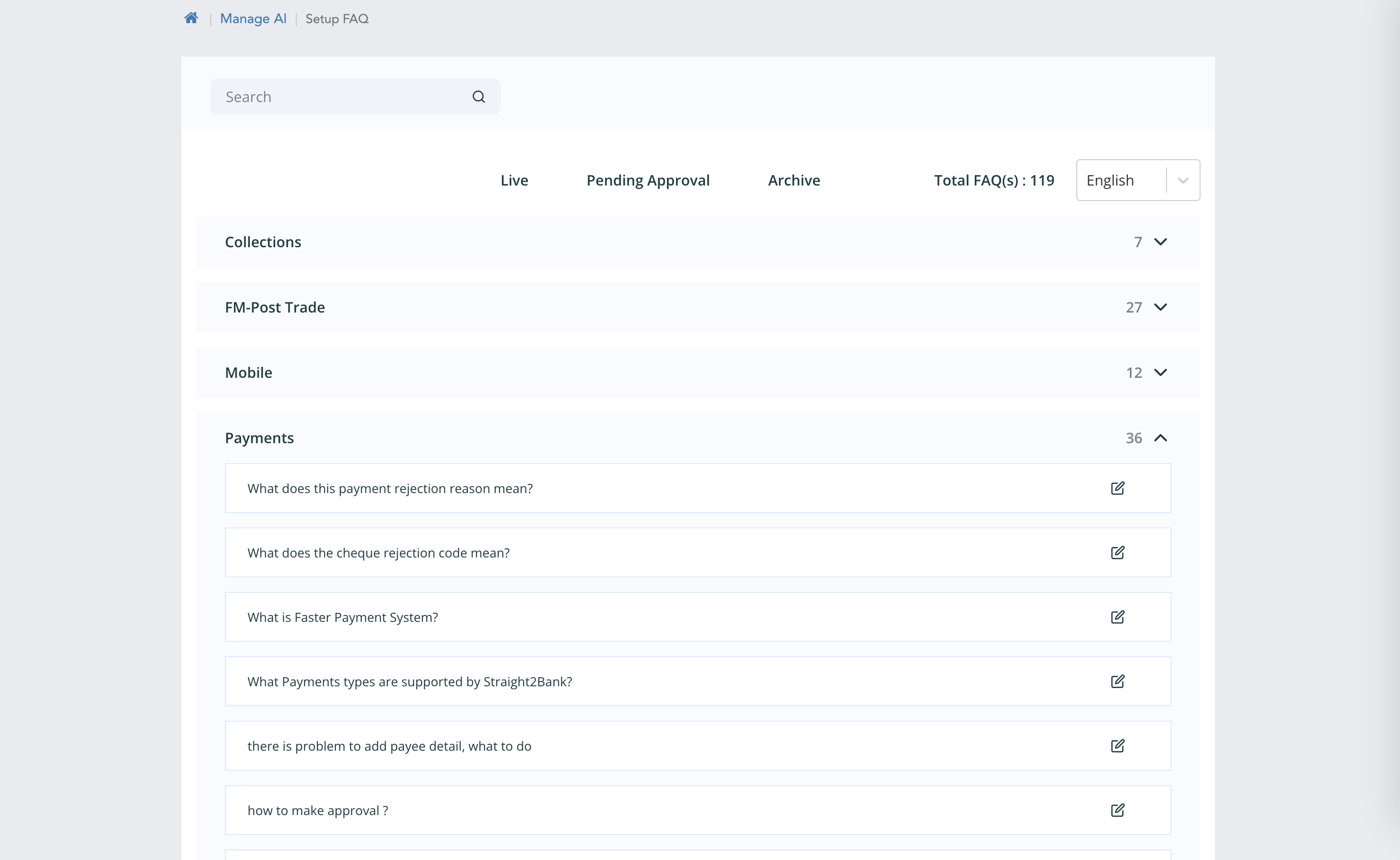

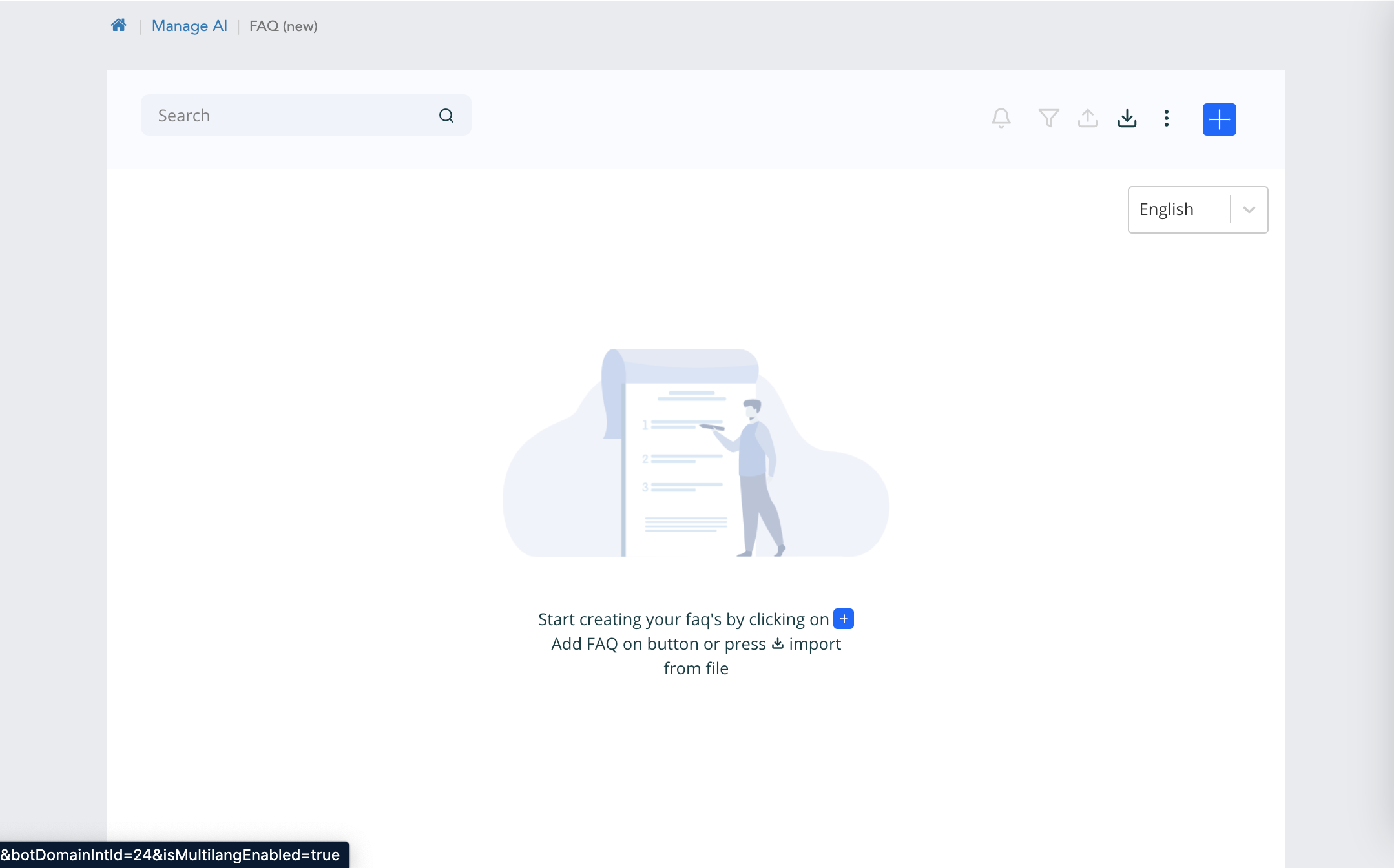

FAQ

- Here we can see default FAQ page with all the functionalities with maker checker headers.

- Here we can see pending approval page for Maker user.

- Here we can see pending approval page for Checker user.

- Here we can see pending approval page with compare screen.

- Here we can see Archive screen.

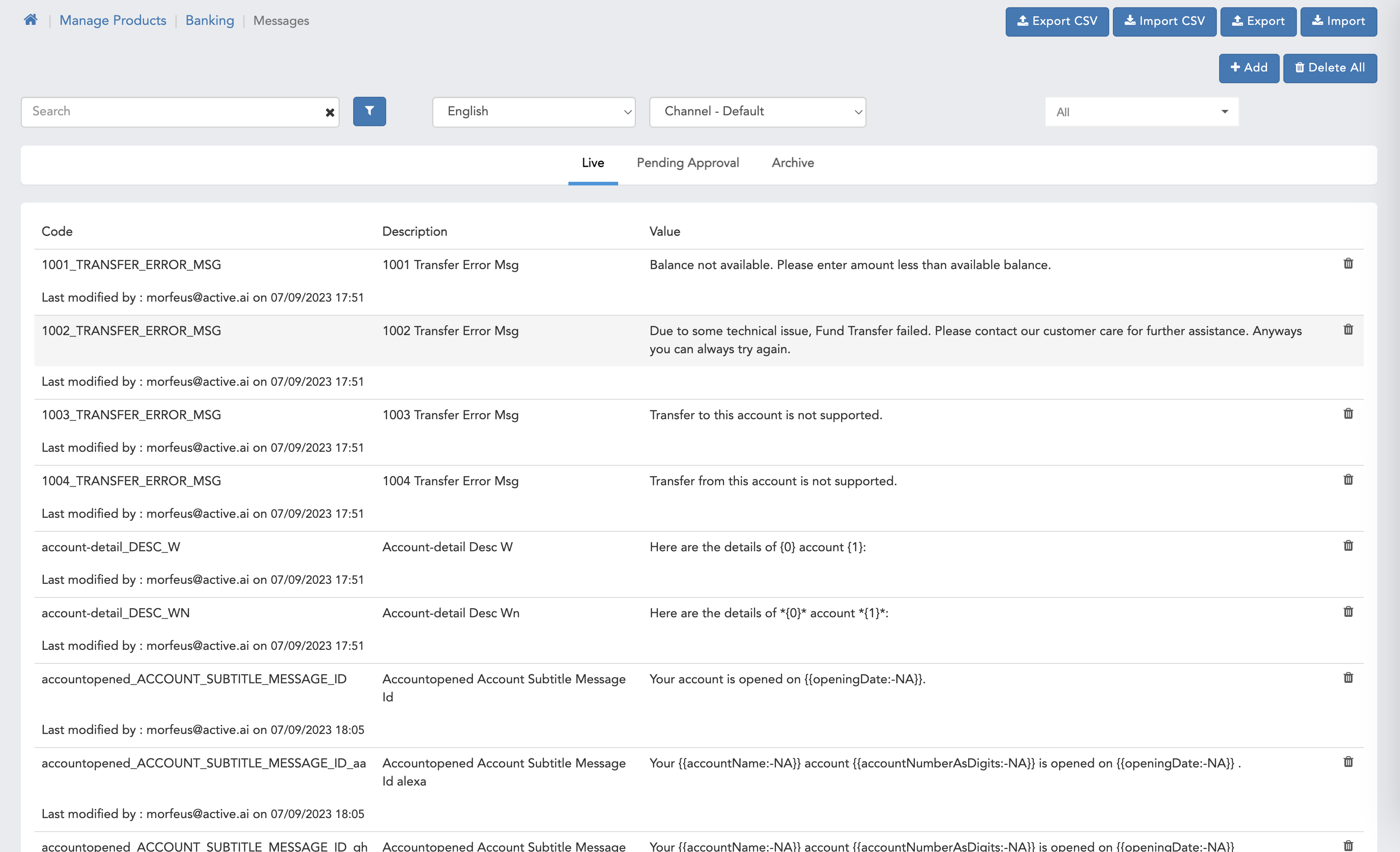

Bot Message

- Here we can see default Bot Message page with all the functionalities with maker checker headers.

- Here we can see pending approval page for Maker user.

- Here we can see pending approval page for Checker user.

- Here we can see pending approval page with compare screen.

- Here we can see Archive screen.

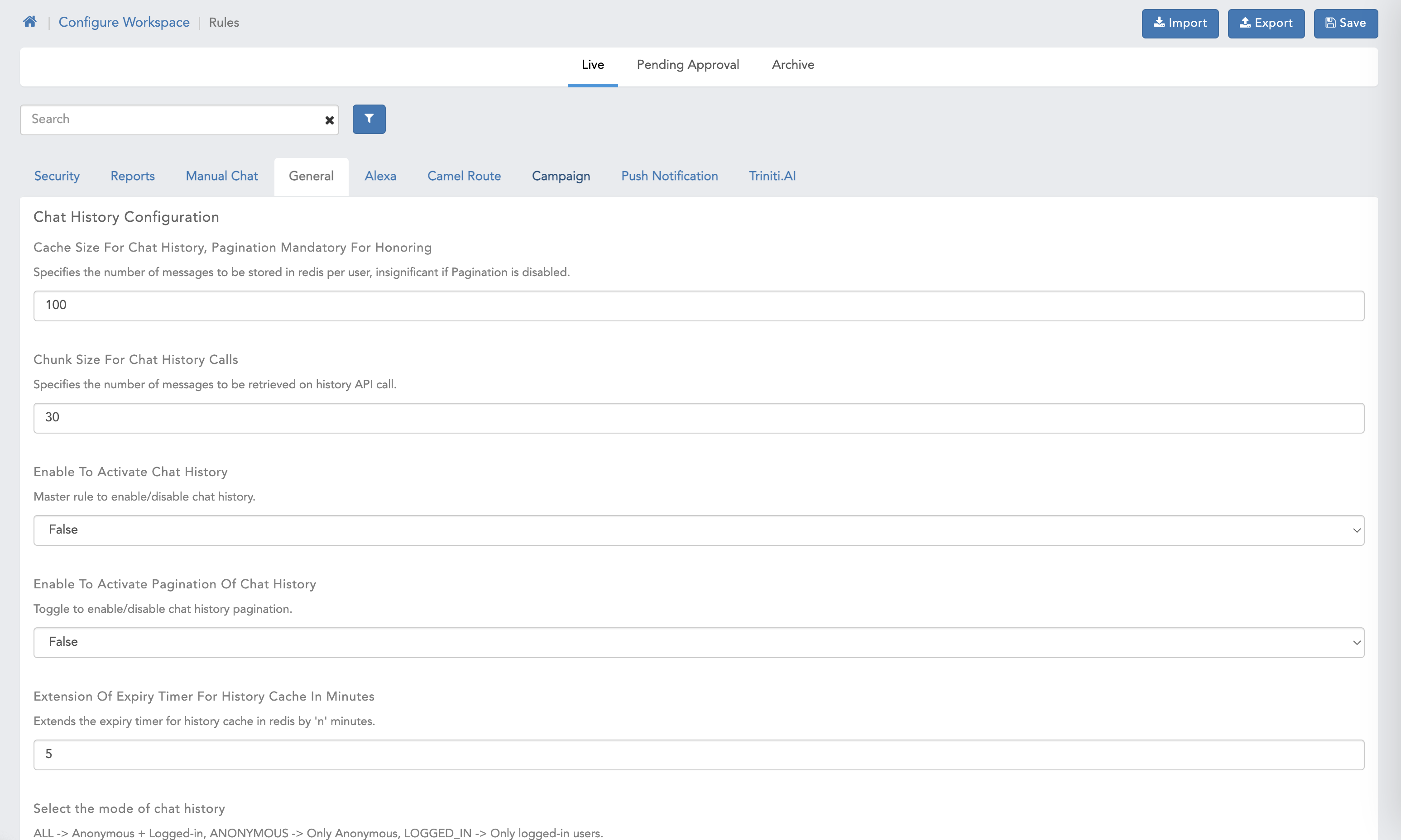

Manage Workspace Rules & Manage AI rules

In Rules we can only modify the rules addition and deletion option is not supported.

- Here we can see default Manage Workspace Rules & Manage AI rules page with all the functionalities with maker checker headers.

- Here we can see pending approval page for Maker user.

- Here we can see pending approval page for Checker user.

- Here we can see pending approval page with compare screen.

- Here we can see Archive screen.

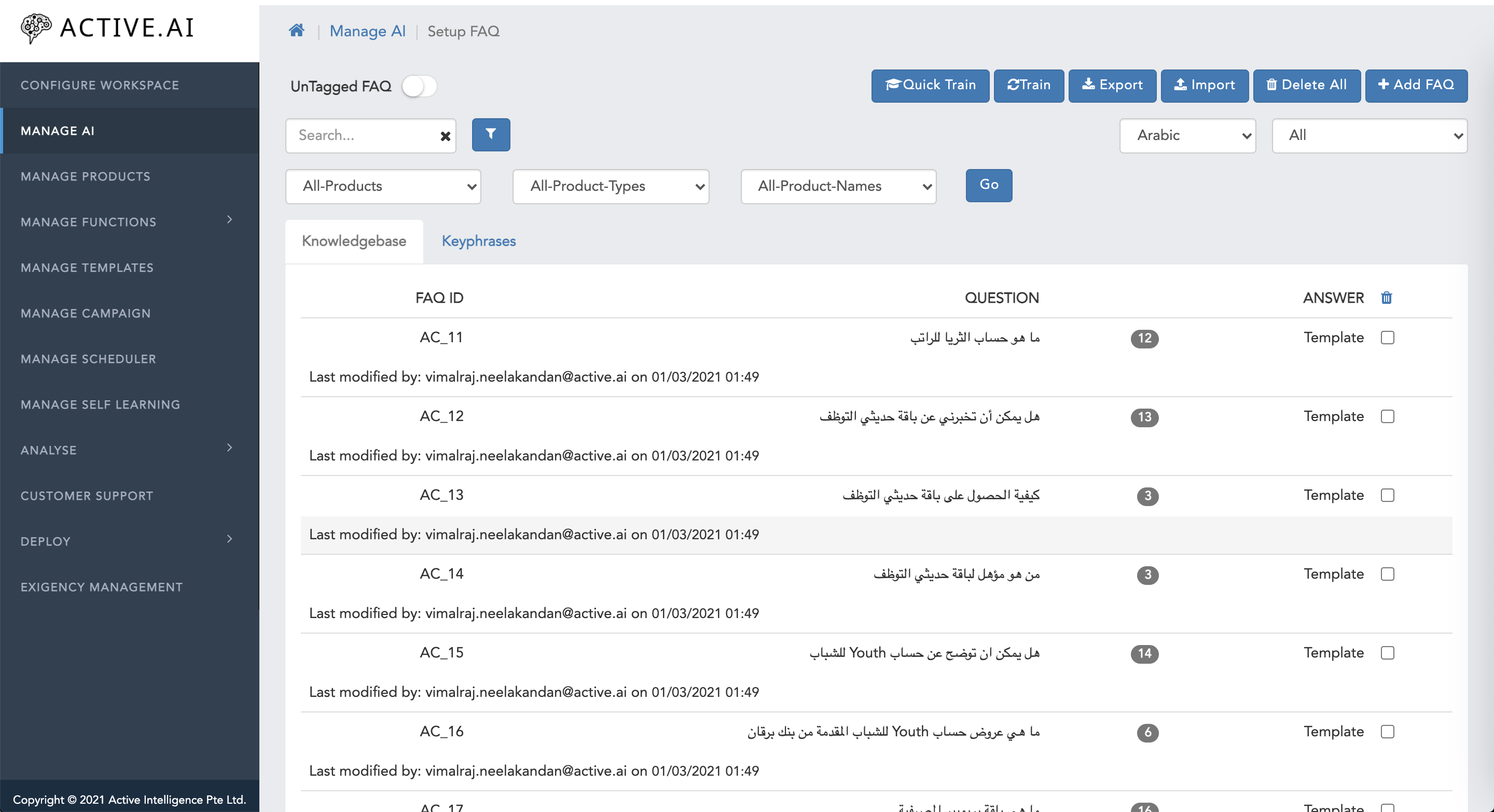

Managing FAQs

Overview

The FAQs (Frequently Asked Questions) are the customer-specific questions that might be asked by the users. FAQa are usually about the business oriented product offerings. FAQs are usually interrogative in nature.You can add FAQs to your workspace as per your requirements so that bot will give a response of those FAQs which user will ask to your bot.

The FAQ screen has been revamped and had new functionalities also for the faq data. It has some enhancement as well for the data curation, duplication check, search functionality, grid tab and filters. The changes are listed down in points.

Details

When we create a workspace, then at backend git sync happens. So new screen will have the status update for in progress, error or git sync completion for it.

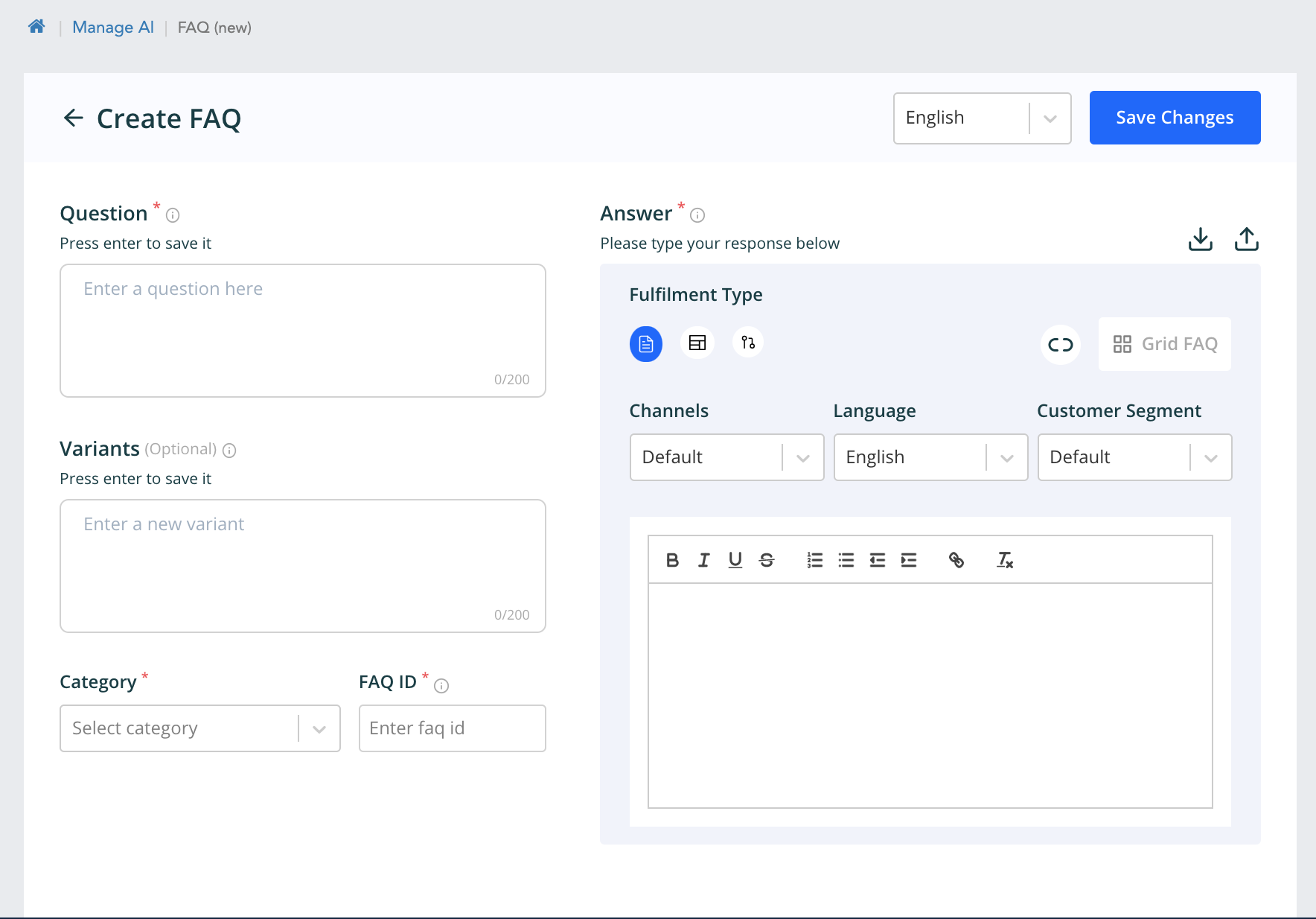

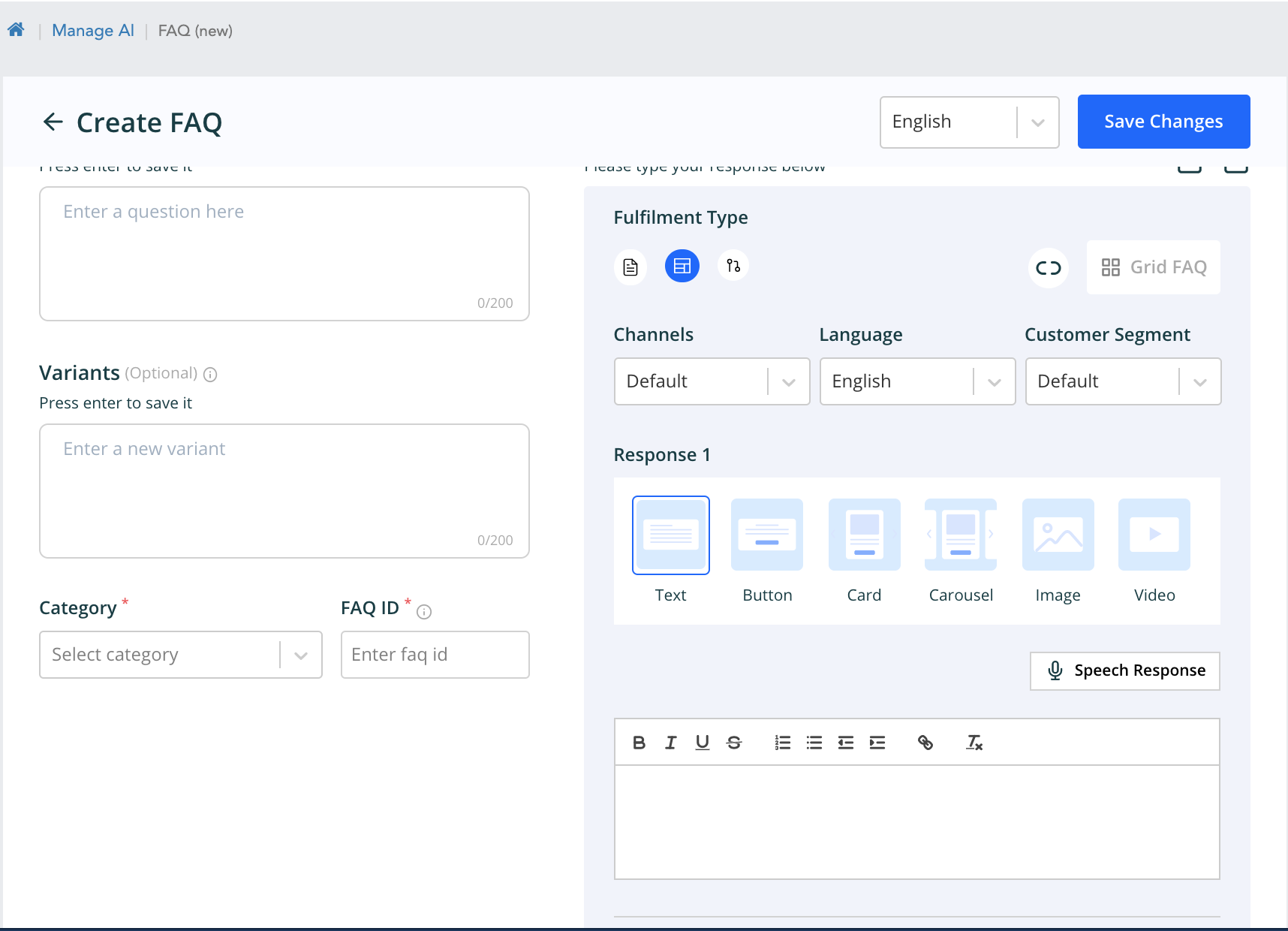

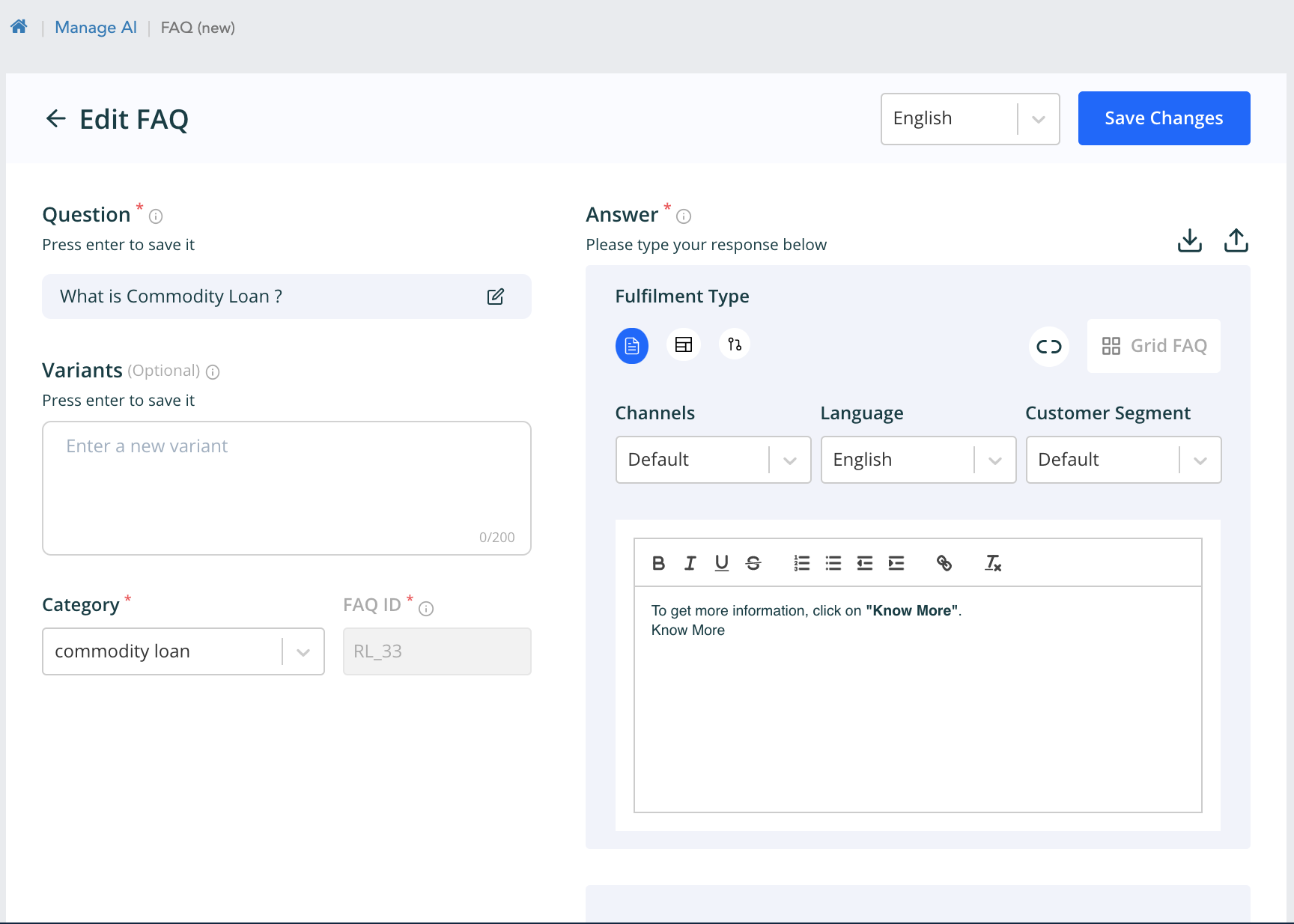

Create faqs and response

Creation of faq UI is changed, here all the response, variant w.r.t language and response type is at one place. Multi-response and multi-channel can be configured from one place only.

Click on add button at the top to create new faq.

From the above page we can configure response of various types by selecting the Fulfilment type. The order is text, template and workflow response. The customer segment response can also be configured from here. The faqs can also be added with existing workflows.

The multi response can also be added by clicking on Add following response which will open same editor as shown to add more responses.

Similarly the edit faq is changed similar to above one.

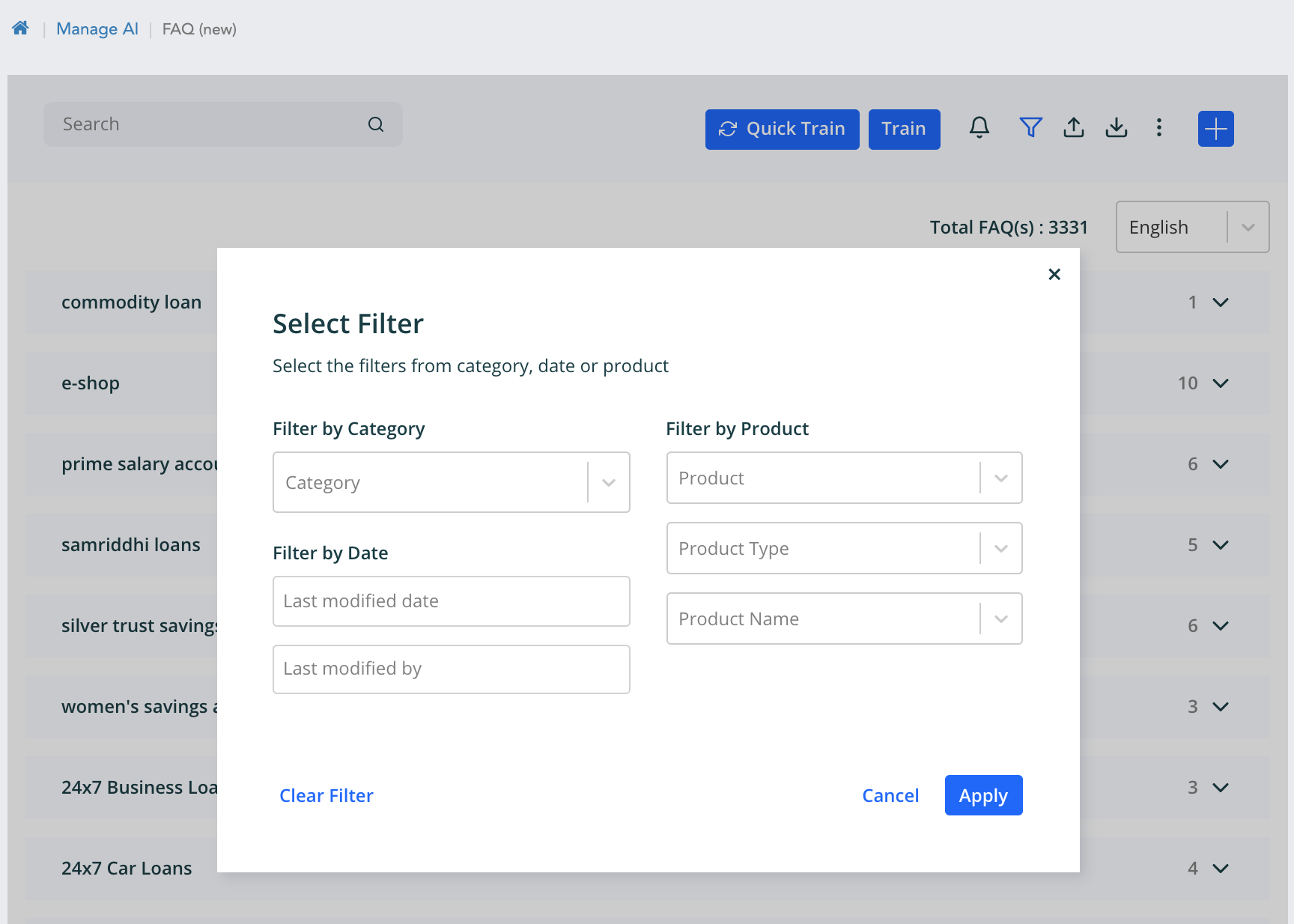

Filter page

Here the category, date and modified by can be applied together as a criteria to filter. When ontology os enabled then product, product name and product type filter option comes up. This is independent of category and date.

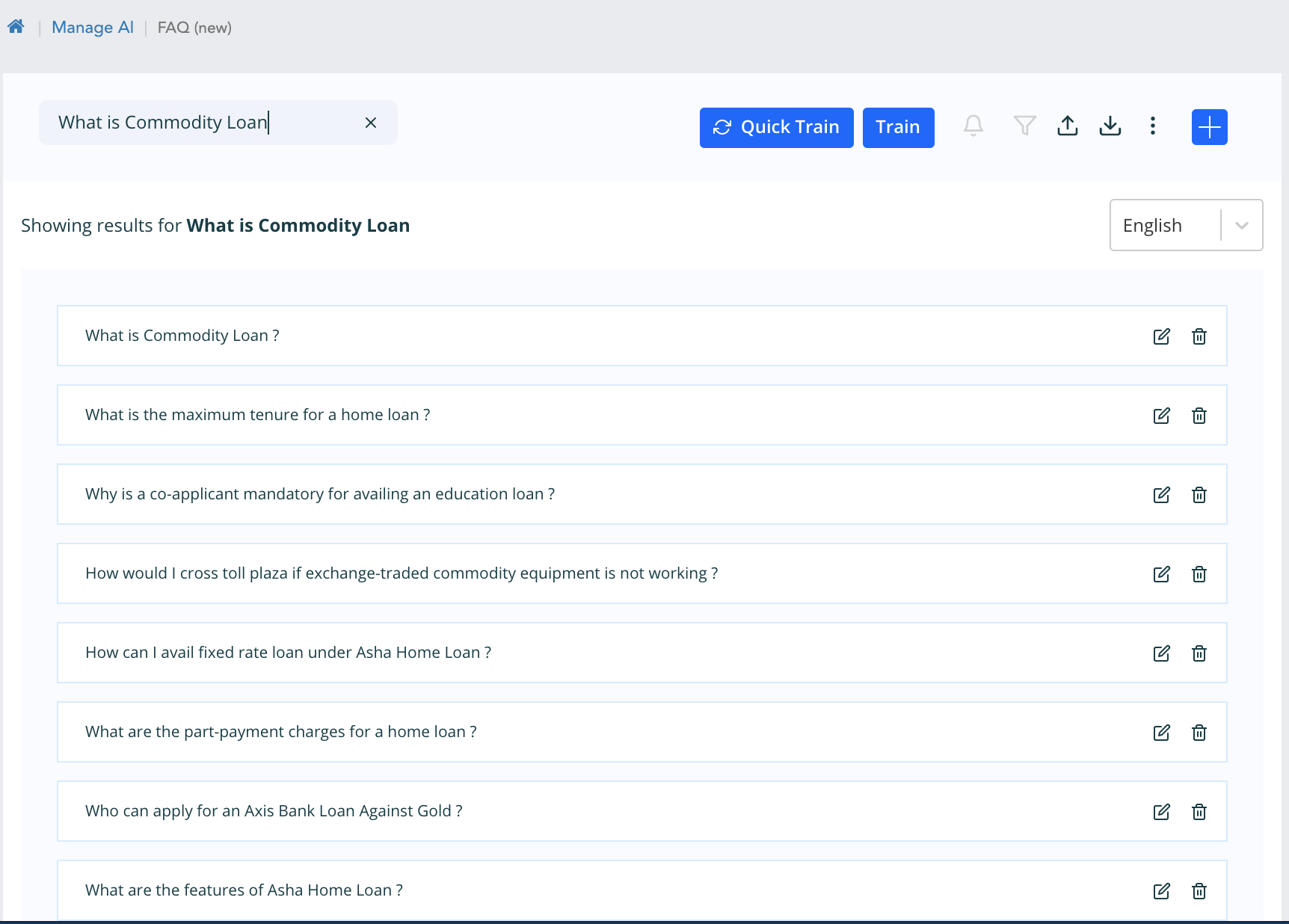

Search

When we search any question, variant, answer or faq id, if the elastic search index is created for the workspace then the response is shown from the elastic search. It is like a suggestion for whatever text is given.

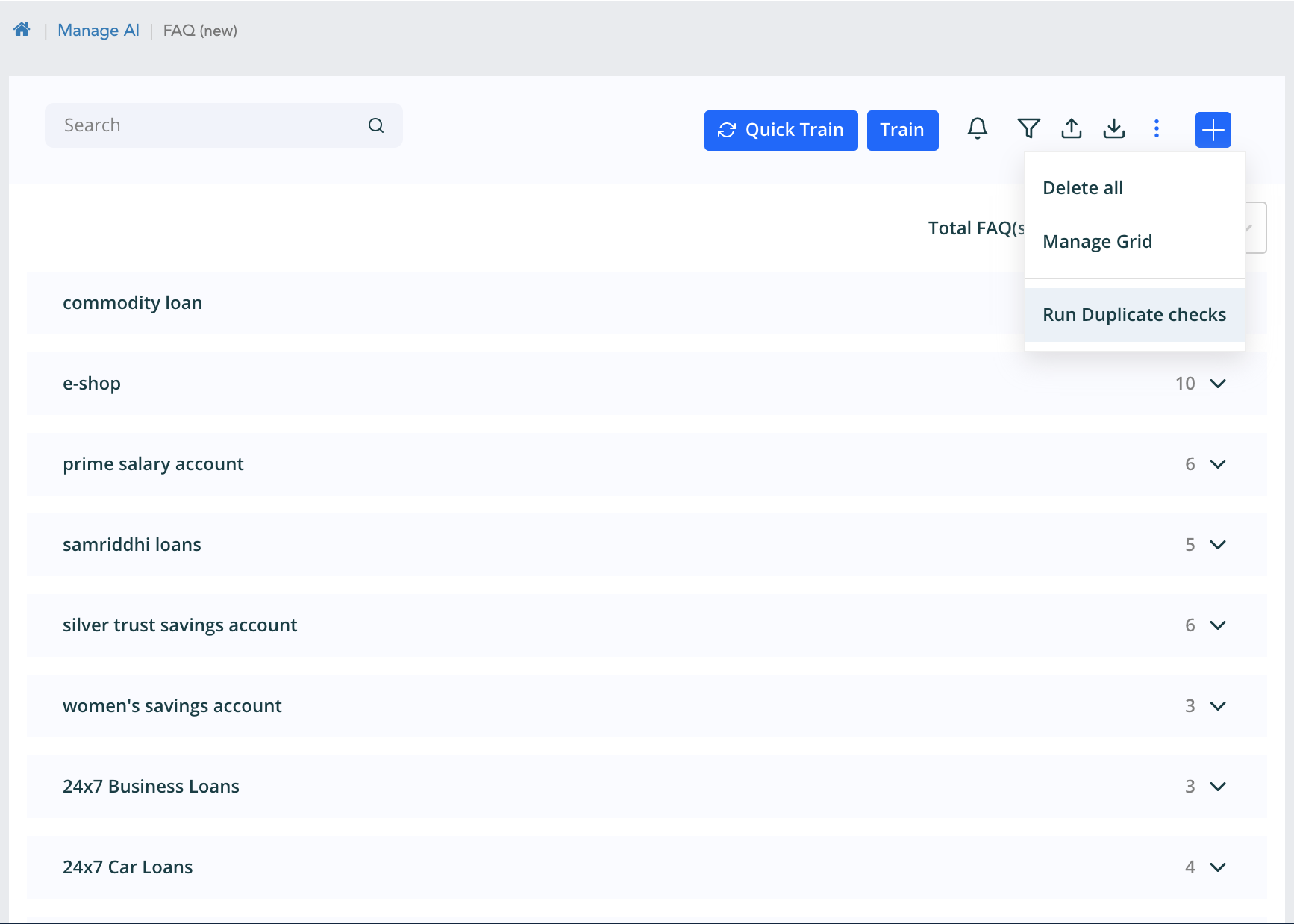

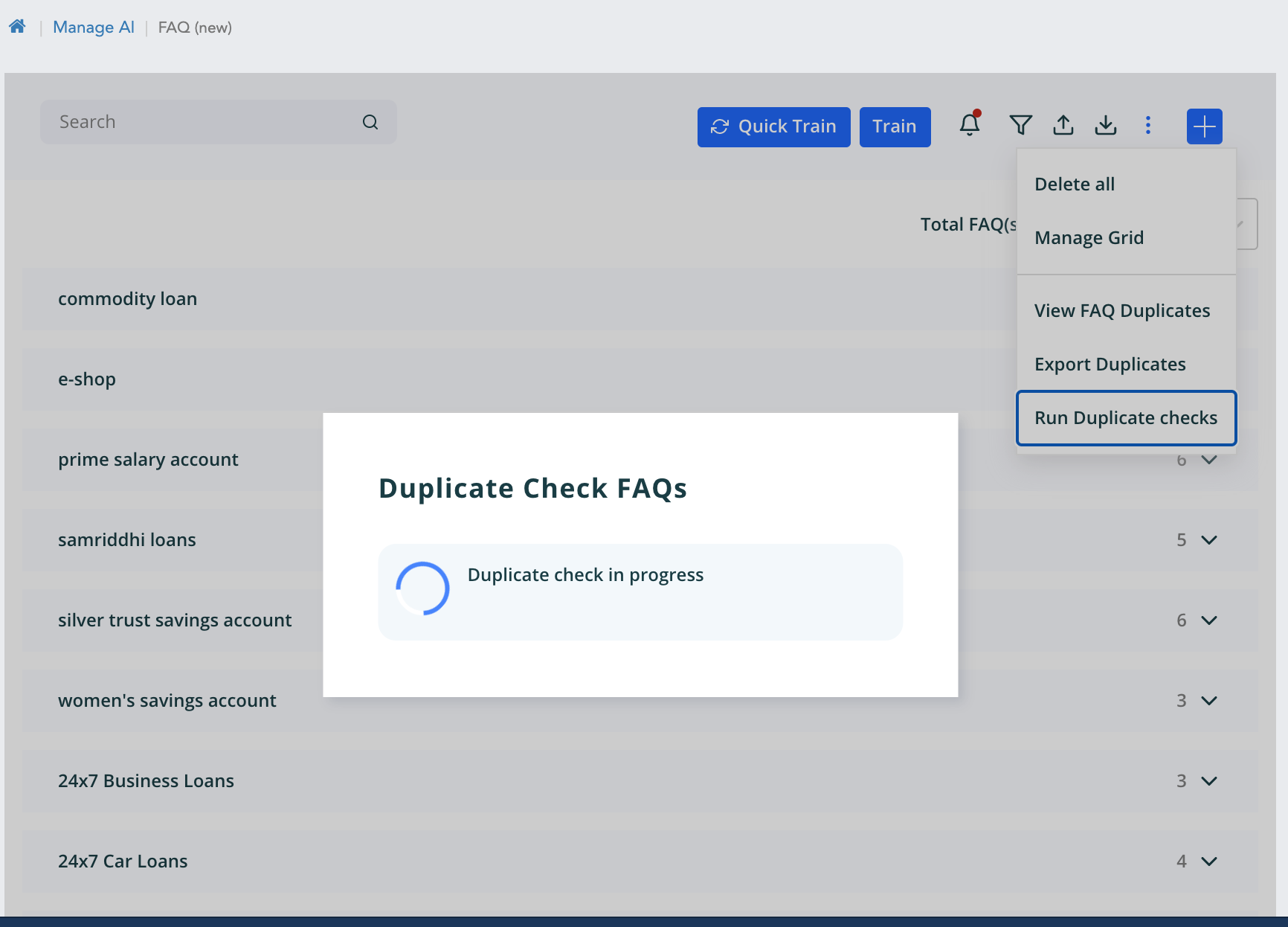

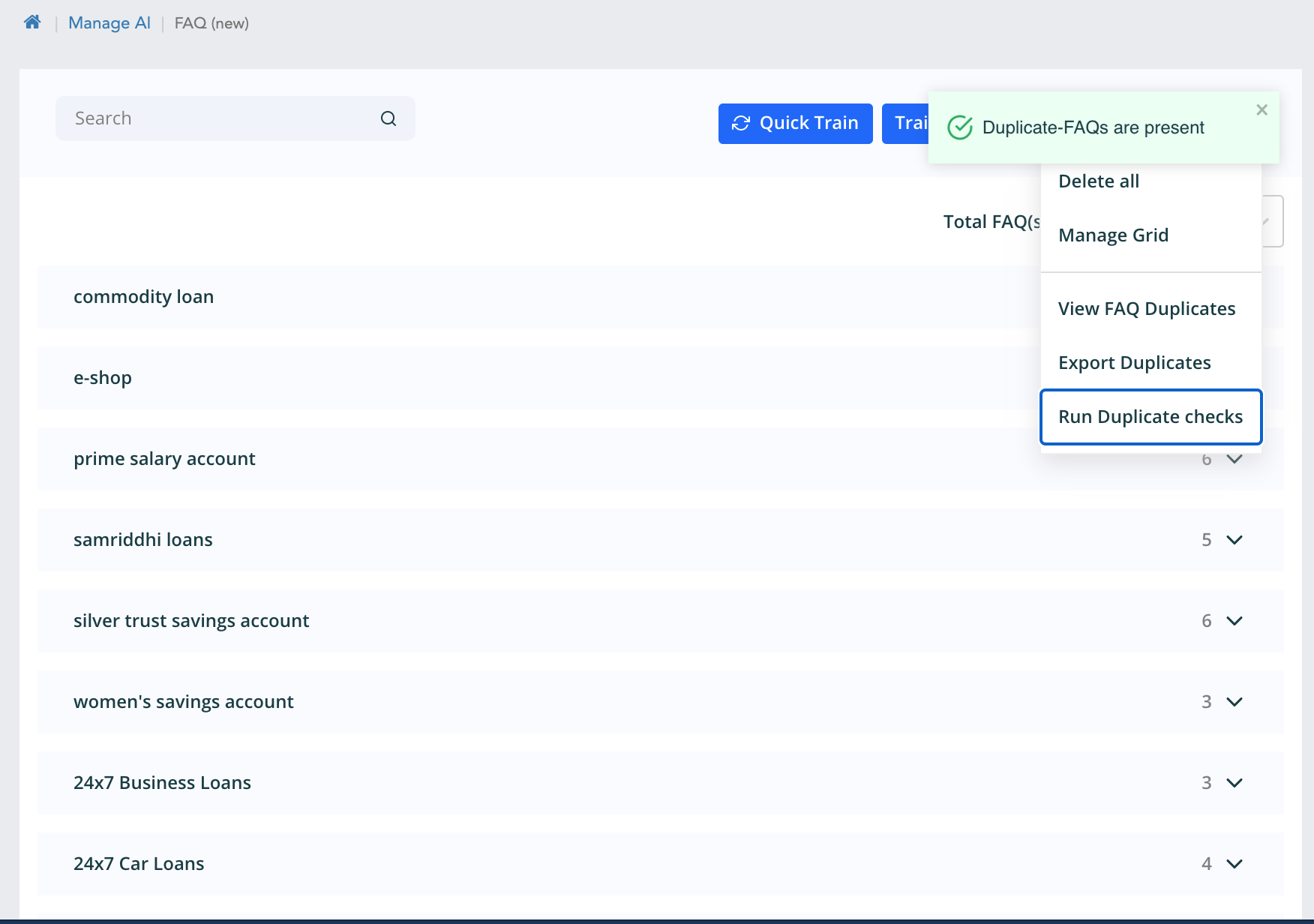

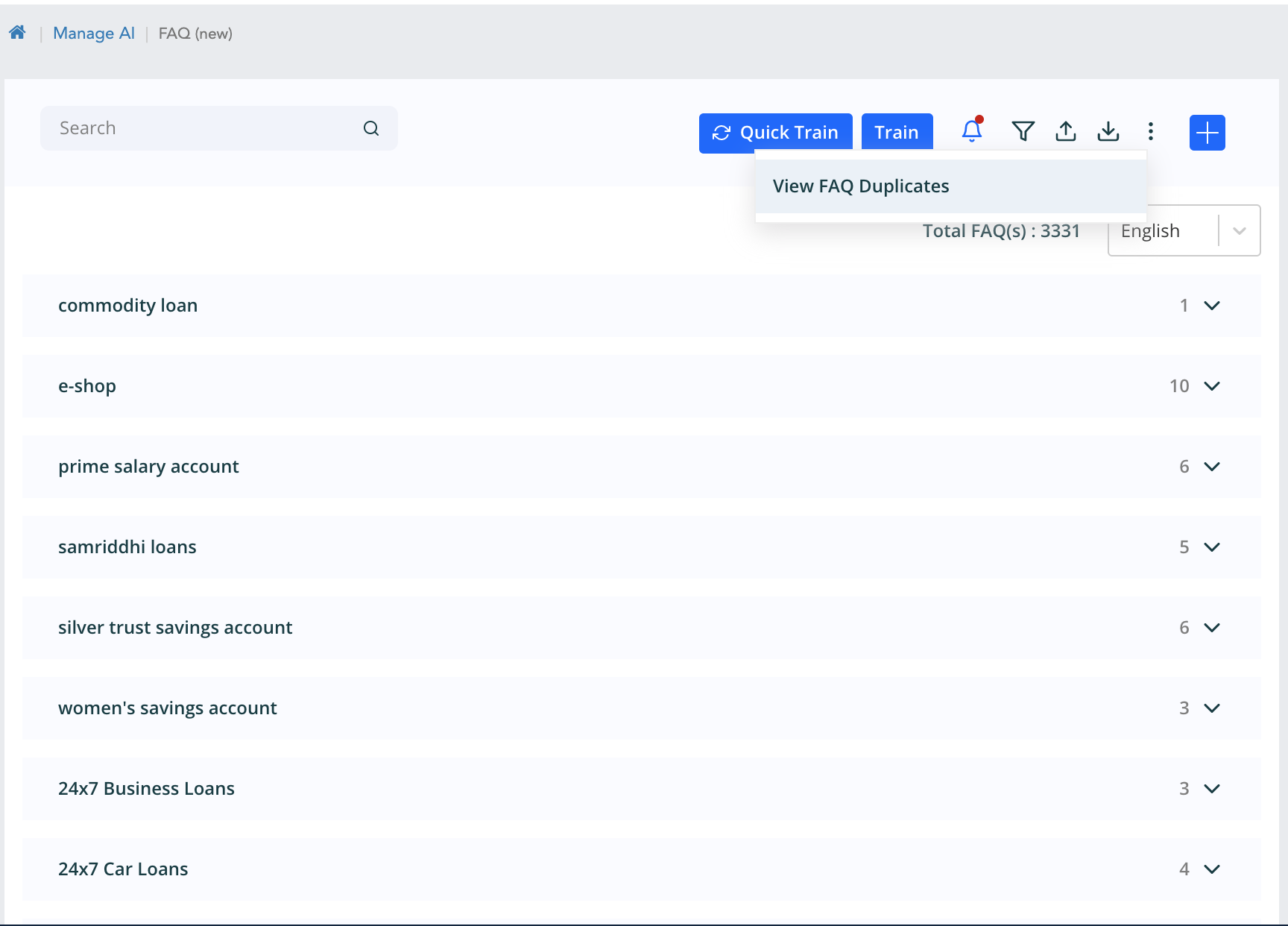

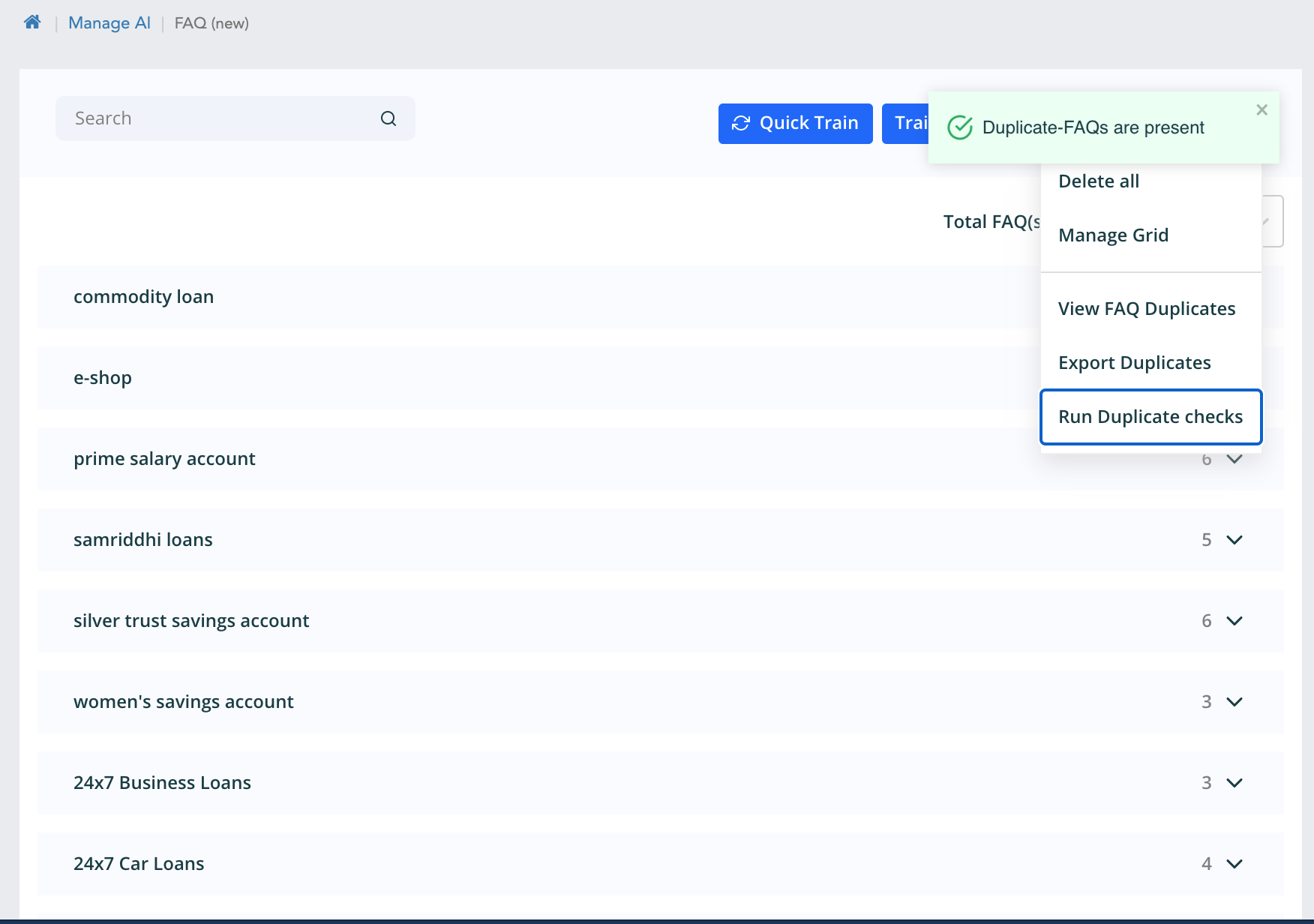

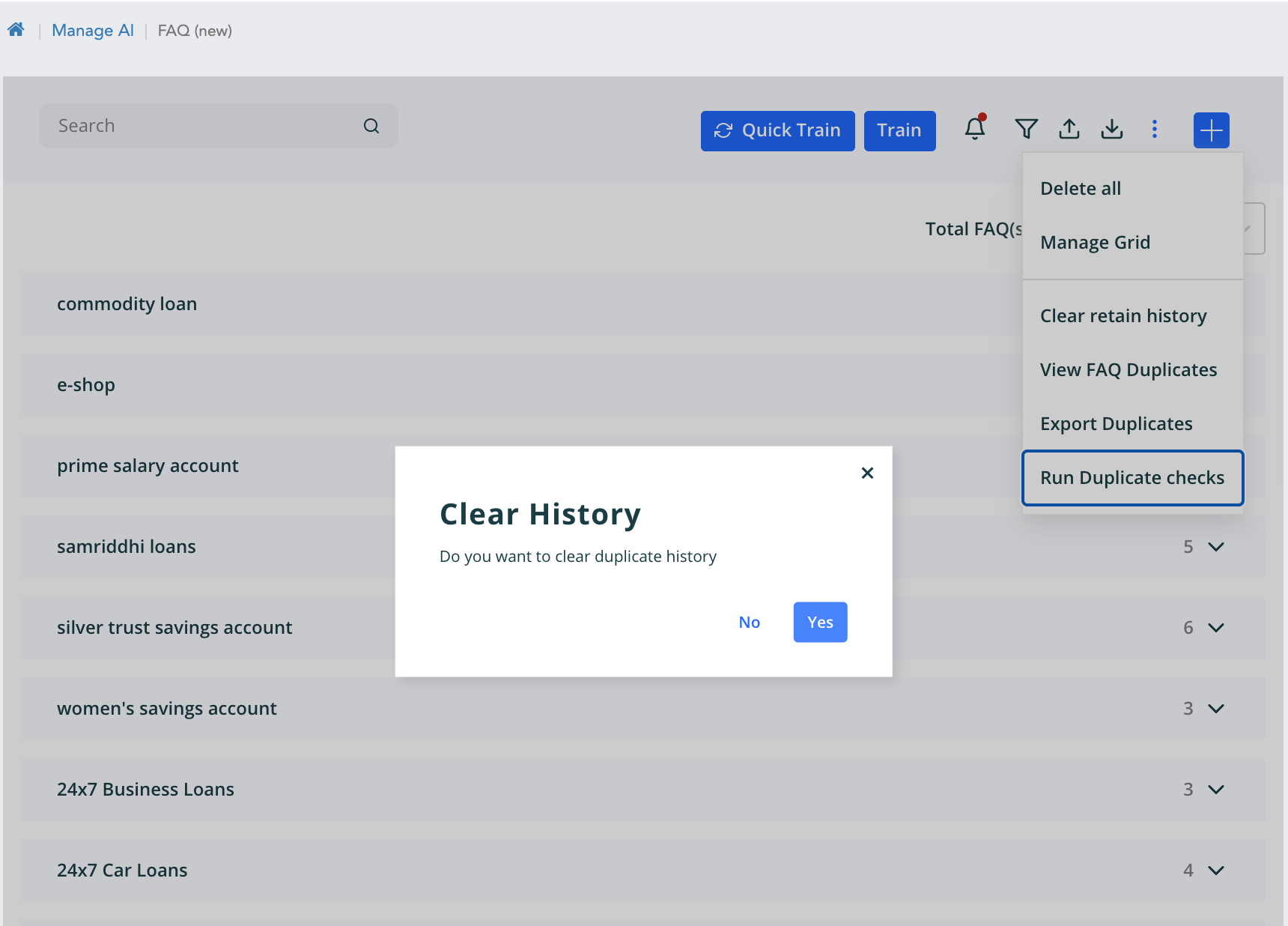

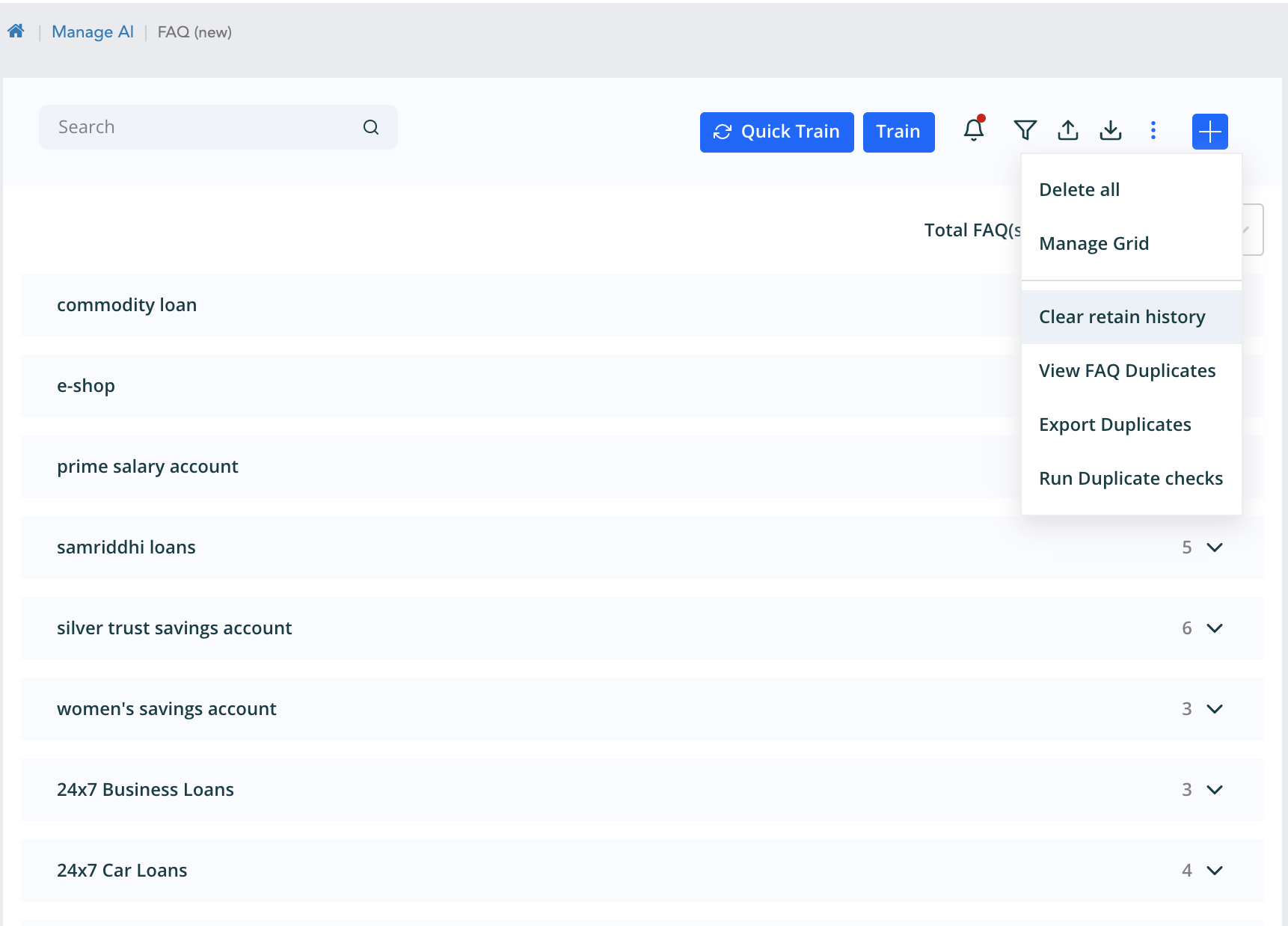

The manage grid option shown will navigate to the Manage knowledge grid. The run duplicate check triggers the duplicate check on the faqs, this is detailed later in this module.

DEDUP feature

Description

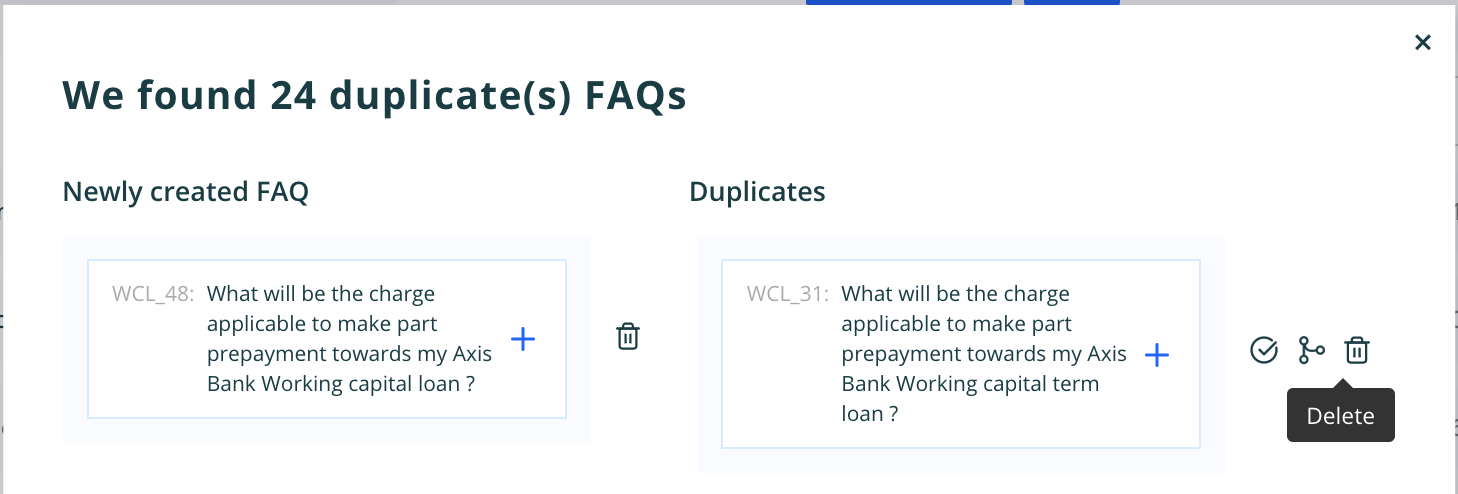

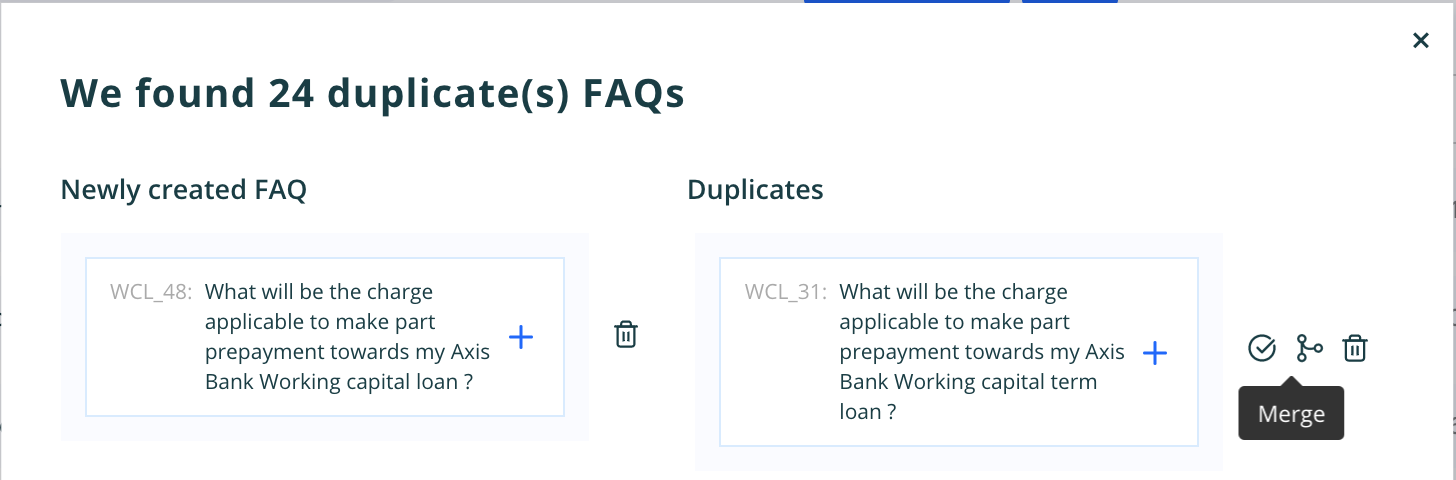

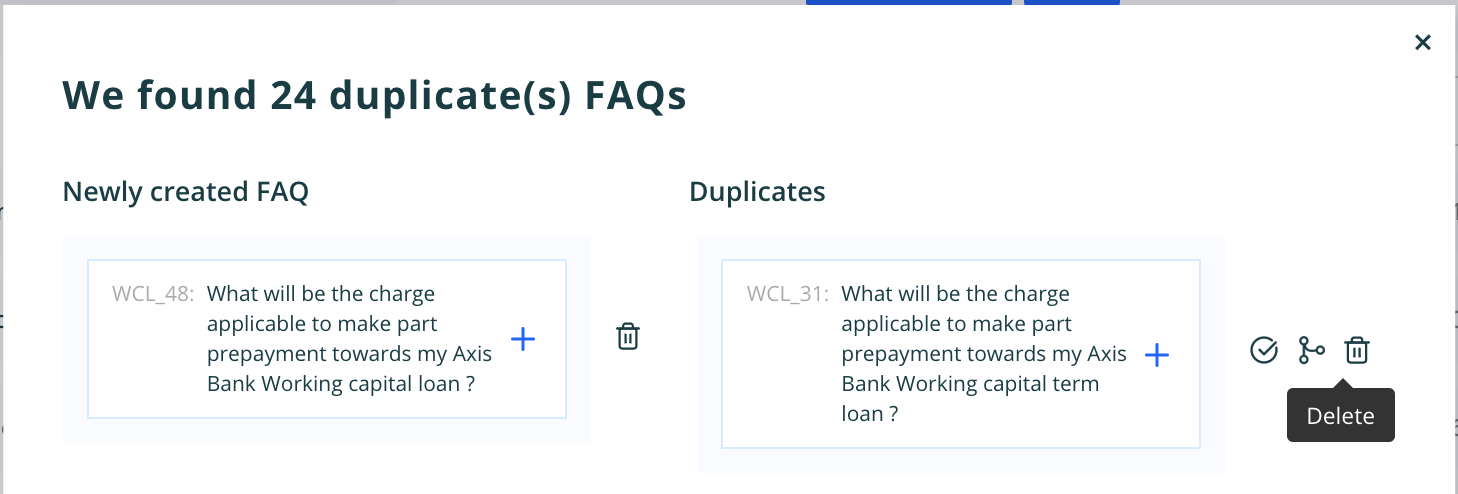

Dedup features helps to identify the duplicate faqs present in the data. This feature has the dependency of the sniper dedup api and the elastic search url. It exports all the data from the Elastic search index for the workspace. When we import or add new faqs then on “Run Duplicate Check”. It has the metic to find the duplicates that is Similarity and Variant/Non-Variant. Similarity is the percentage how much one question is similar to the existing one. Variant/Non-variant is classified as main question or variants.

Feature is explained in more detail in below steps :

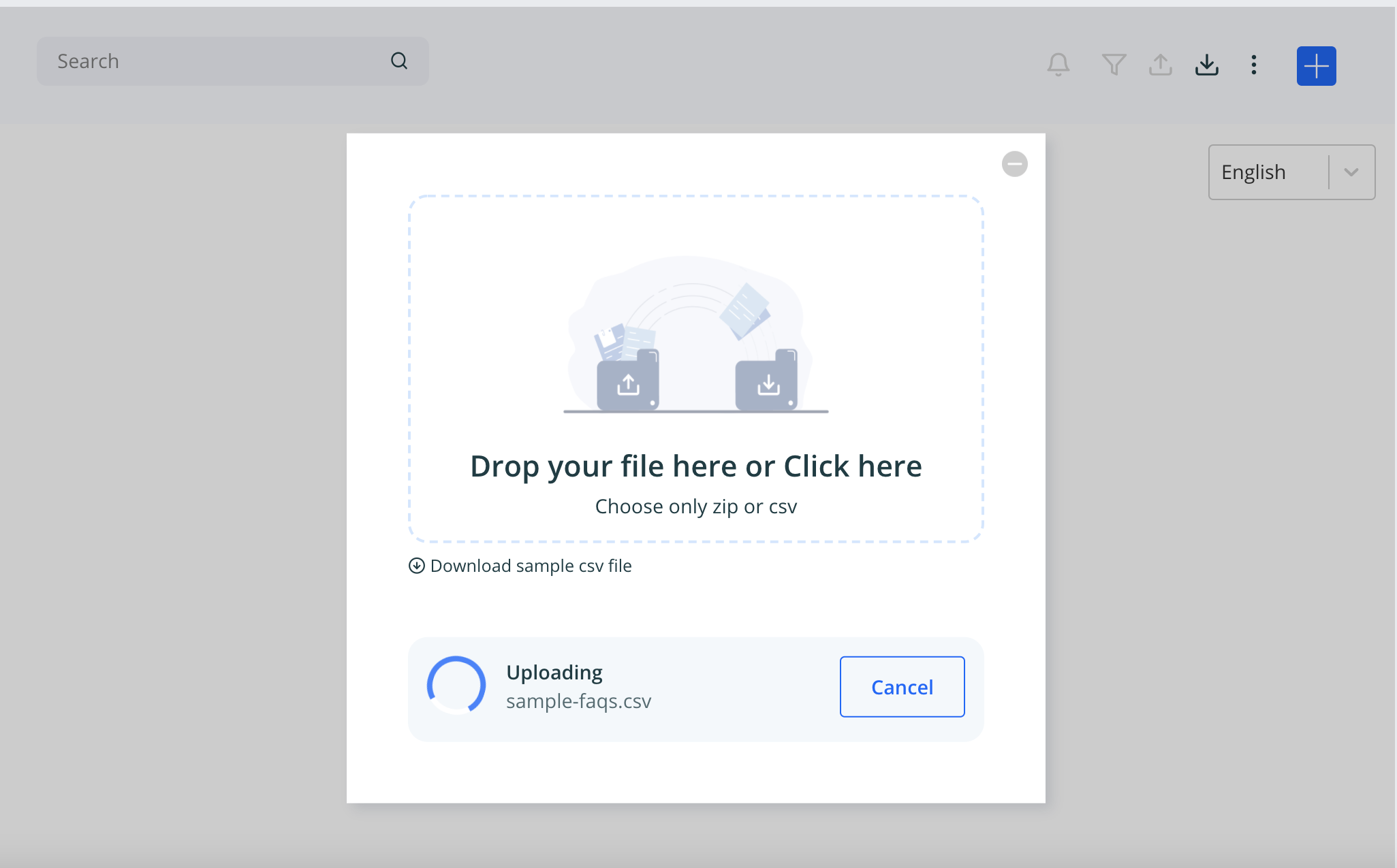

- Import/add faq on Faq screen

- Once import done click on Run Duplicate Check

- If duplicates are present for the data then we get the below shown message

- To view duplicates click on View Duplicates inside three dots above or click on notification bell which is highlighted because the duplicates are present.

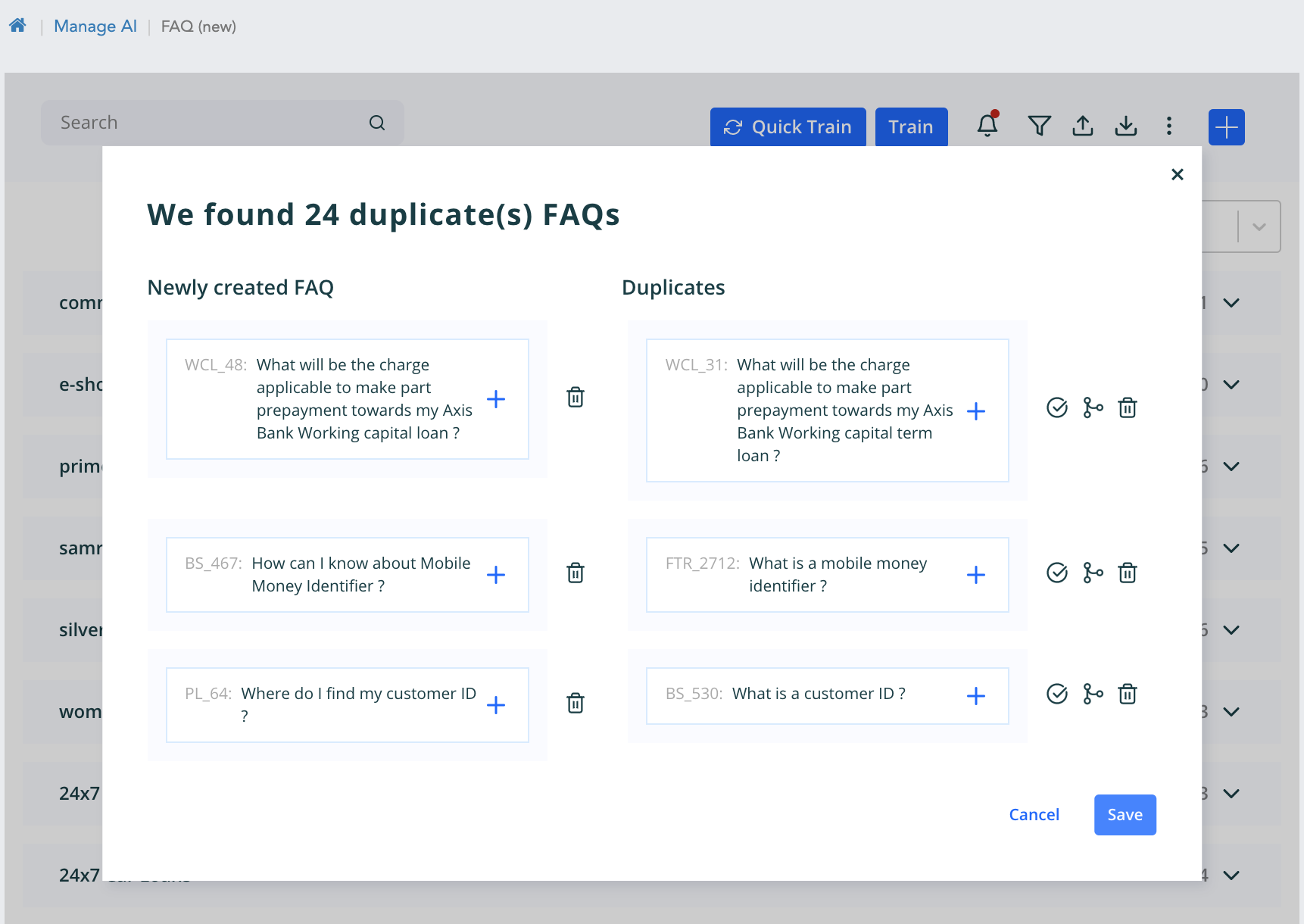

- Once we click on the view duplicates then the popup will appear with list of all the duplicates combination from the data.

On the right hand side is the question and on left is the respective duplicate. There are three user actions - Delete question - It will delete the question and retain the duplicate as the main question. Retain Duplicate - it retain the duplicate as the main question in the database and elastic search Merge Duplicate - It will merge the duplicate as the variance to the question on the left. Delete Duplicate - This will delete the duplicate question which is present in the database as an independent question

Note - All the above four actions will make changes in the database and the elastic search index for the respective workspace.

- Once we Retain the duplicate it will be store in the history. Firstly, On second check it will be asked to remove the history and proceed or proceed without clearing history. In the check the retained question will not be sent for dedup check if history is not cleared. Secondly, we have an option to clear the retained history. Note - All the above four actions will make changes in the database and the elastic search index for the respective workspace.

Note -

- When we delete all faqs, the duplicates and the history is also cleaned

- on import of data the previous history and the duplicates all are removed.

- before import, it is mandatory to update the Elastic search URL, otherwise the feature will not work.

- On dedup check if the messages are as follows:

- ES index not created yet - means the index is not created on the import or creation is in progress.

- At 20 faqs must be present for dedup check - this is the constraint on the feature to have minimum 20 faqs for the check.

- DeDup Rules not configured - It checks in the bot rules whether the sniper dedup api and similarity index rules are configured. We can customise it from admin as well. Go to Configure workspace -> manage workspace rules -> General tab -> configuration. Two rules are Similarity index for faqs and Duplication check Url.

Formatting Responses

The response will be rendered on the bot normally in a textual format. You can add a text response in your FAQs by entering the responses in the bot Response section. If you are not selecting any template so by default is Text.

Templates

Template editor supports formatting the FAQ response from the bot to render good look and feel to your bot responses so that the conversations will be more interactive & user-friendly.

You can format the responses as Text, Card, Image, Carousel, List, Button, Video & Custom by following these steps:

- Select your workspace and Click on Manage AI -> Click Setup FAQs -> Select Add FAQ.

- Enter FAQ ID, FAQ Question, select Response Type as Text

- Enter the response

- Click Add.

Following are the types of template response for FAQs

- Text Template

- Card Template

- Carousel Template

- Button Template

- List Template

- Image Template

- Video Template

- Custom Template

Workflow

Workflow helps to define step by step conversation journeys. The intents and entities derivation is mandatory to identify the correct response to user, but all the needed information may not be available all the time, during these cases workflow can be configured to prompt the user for more input that is needed to respond correctly.

Ex: If a user asks, How to apply for a debit card?, the defined workflow can ask for the various card selection like Rupay Card, Master Card, Visa Card, etc.

In a workflow, each entity is handled by a node. A node will have atleast a prompt and a connection. A prompt is to ask for user input and connection to link to another node. In a typical workflow that handles n entities, there would be n+2 nodes (One node per entity with a start and a cancel node).

In the workflow, we expect user inputs in a sequence, but by design, a workflow can handle any entities in any order or all in a single statement. Out of the box, workflow supports out of context scenarios.

You can configure a workflow by following these steps:

- Select your workspace and Click on Manage AI -> Click Setup FAQs -> Select Add FAQ.

- Enter FAQ ID, FAQ Question, select Response Type as Workflow

- Configure the desired workflow

- Click Save on the template editor

- Click Add on the FAQ popup screen.

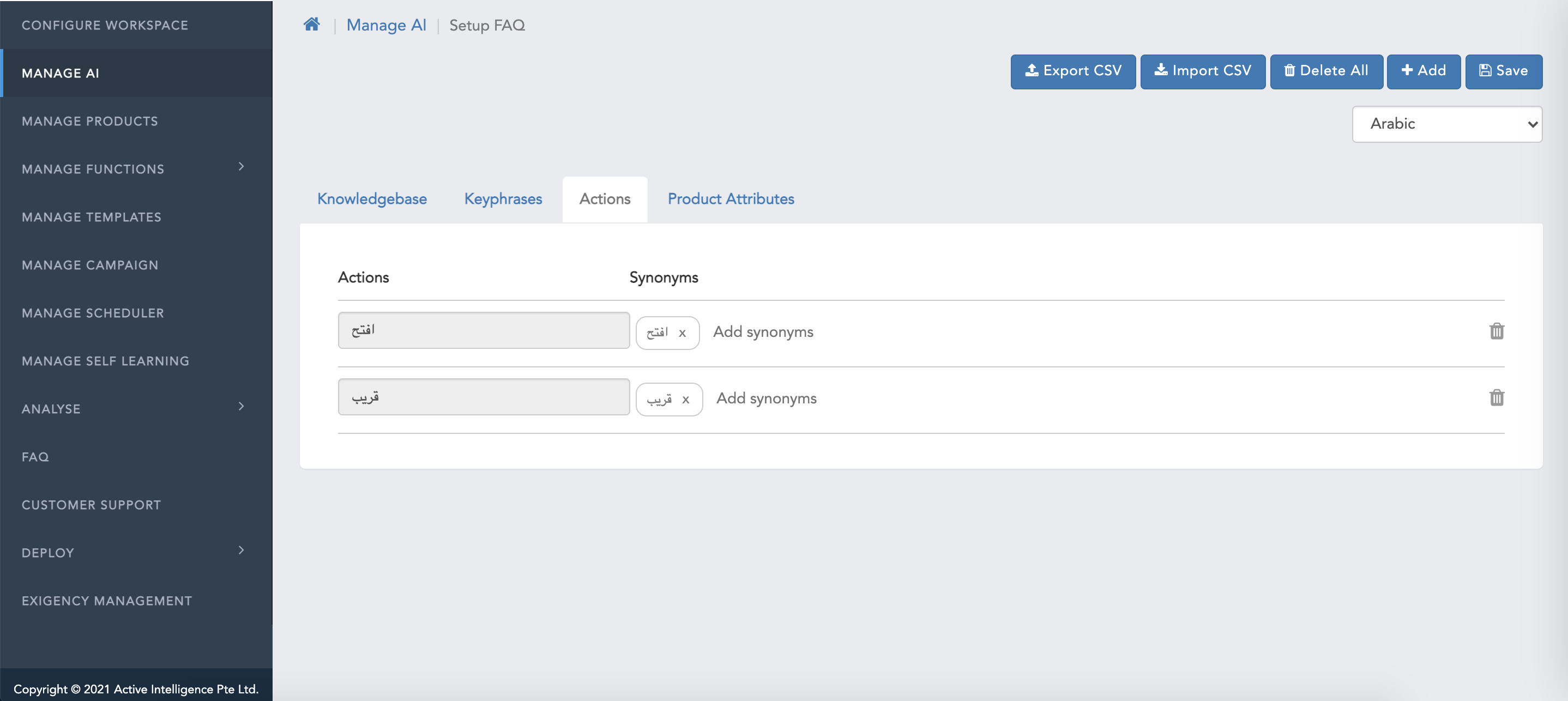

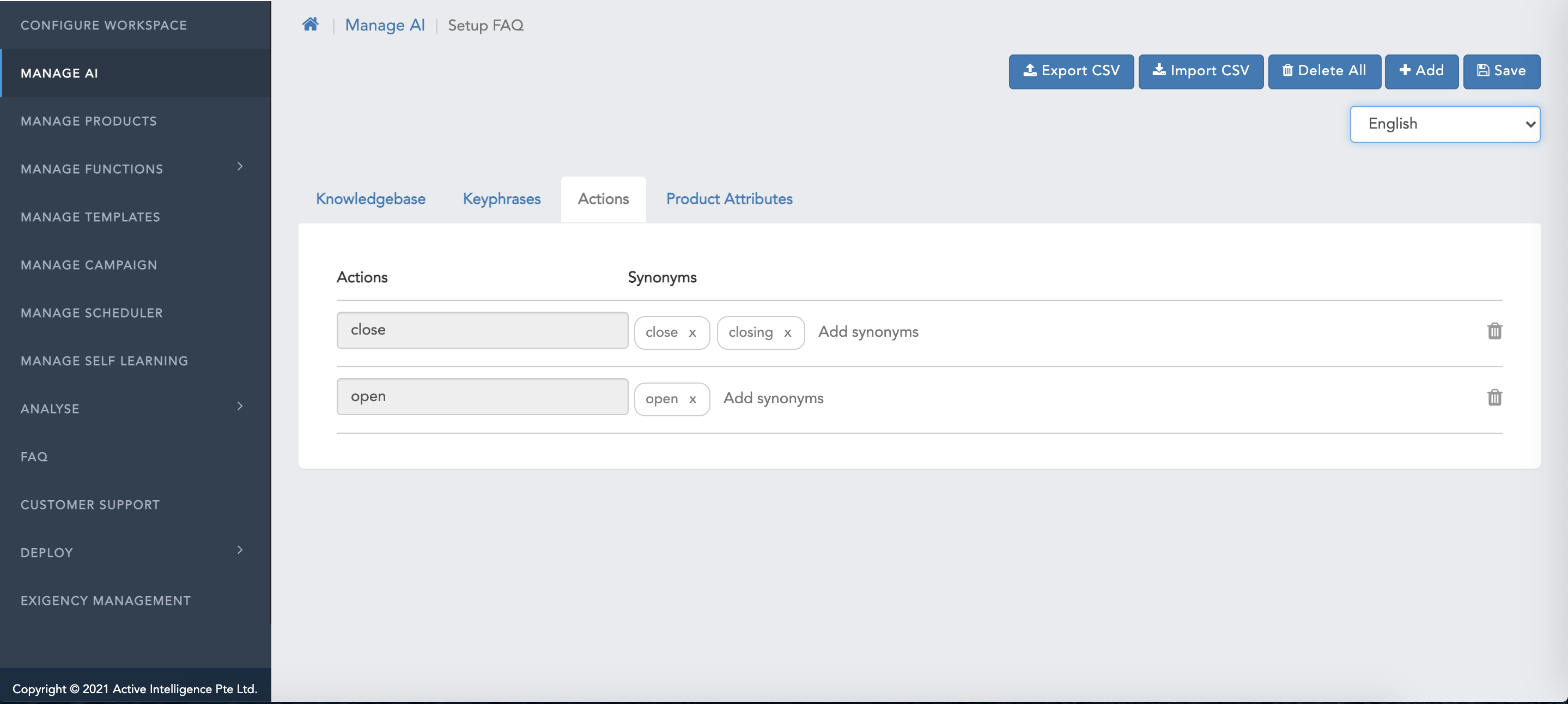

Keyphrases

A Keyphrase is a word/phrase that must be present in the user's query to consider and predict a question as potential candidate for deriving the desired response. Example “How to pay by credit card” can have a “credit card” as the key phrase.

Since users may type the key phrase in different ways you may also want to declare “credit crd”, “CC” as variants to these Keyphrases so they are all treated equally.

Keyphrases are used to narrow down the potential candidates against which the CognitiveQnA module runs matches. Quality Key phrases are important for accurate responses of the bot.

Kephrase Guidelines

- Post adding/modifying/deleting keyphrases, Training AI data is required

- Maximum three words are permitted under keyphrases where the single space(s) should be replaced _ (underscore)

- Root value of the keyphrase should not contain the single or multiple space rather should be replaced with _ (underscore)

Adding Keyphrases

You can either add the keyphrases manually by entering the key phrase & the respective synonyms or import a CSV file of keyphrases.

Adding Keyphrases Manually

To add keyphrases, one has to follow below steps:

- Select your workspace and Click on Manage AI -> Click Setup FAQ -> Click on 3 dots -> Click on -> Manage FAQ Metadata

- Screen with two headers namely "keyphrases" and "Synonyms" will be shown

- Under header "keyphrases" within the box with navigational text "Type Keyphrase name", populate the root value of the keyphrase

- Under header "Synonym" within the box with navigational text "Add synonym", populate the synonyms of the Original keyphrase root value

- Post populating the content, click on Save .

Keyphrases CSV Import File Structure

| Column Name | Description |

|---|---|

| Keyphrase Name | Rootword of the Keyphrase |

| Status | if it is added |

| Language | Language of the Keyphrase |

| Send To Train | Keyphrase is to send for Data training or not |

| Synonym1 | First Synonym of the Rootword |

| Synonym2 | Second Synonym of the Rootword |

| ... | ... |

| SynonymN | ... |

Importing Keyphrases

You can import the list of keyphrases by following these steps:

- Select your workspace and Click on Manage AI -> Click Setup FAQ -> Click on 3 dots -> Click on -> Manage FAQ Metadata

- Select Import, Select "Do you want to delete existing Keyphrases if any?"

- Click on Choose files

- Select the CSV file of keyphrases to import.

Note: The CSV file should have a column for Keyphrases & synonyms (You can add multiple synonyms)

Exporting Keyphrases

You can also export the keyphrases by following these steps:

- Select your workspace and Click on Manage AI -> Click Setup FAQ -> Click on 3 dots -> Click on -> Manage FAQ Metadata

- Select Export

- keyphrases CSV file will be downloaded.

It will download a CSV file containing keyphrase & synonyms columns

Deleting Keyphrases

You can also delete the keyphrases which you don't want by following these steps:

- Select your workspace and Click on Manage AI -> Click Setup FAQ -> Click on 3 dots -> Click on -> Manage FAQ Metadata

- Select the keyphrases to Delete

- Click on the delete

- Or can click on Delete All (Delete All will delete keyphrases added by the customer and all the keyphrases for the bot (preloaded keyphrases) also)

- Goto your workspace

- Navigate to 'Manage AI'

- Click on 'Manage Knowledge Grid'

- Special Characters are not allowed (Except <, >, #, etc.)

- The contents of each cell should have a minimum of 2 and a maximum of 200 characters.

To get the dynamic values assigned to attribute messages at the time of fulfillment, the data must be added in the following ways:

When a single value to be placed in the attribute message it should be as

- Attributemessage - The Account Charges are

. - AttributeValue - Rs 200.

In the above scenario, the AtttributeValue entered as Rs 200 will be placed at

at runtime. We have to mention wherever the data has to be picked from the attribute value. - Attributemessage - The Account Charges are

When multiple values have to be placed in the attribute message then it must be as

- AttributMessage - The rate of interest for

on salary account is percent. - AttributeValue - 2 years#10.8

Here inside attribute value, the subsequent values have to be separated by #. So accordingly the attribute message will be formed as “The rate of interest for 2 years on salary account is 10.8 percent.

- AttributMessage - The rate of interest for

- Goto your workspace

- Navigate to 'Manage AI'

- Click on 'Manage Knowledge Grid'

- Click on 'Add Data' ( '+' icon)

- Enter 'Select Product' (Eg; Super Card)

- Enter 'Product Type' (Eg; Savings)

- Enter 'Product Name' (Eg; Credit Card)

- Enter 'Attribute' (Eg; Annual Charges)

- Click on the Arrow mark to expand

- Enter 'Attribute message' (Eg; Annual Charges is

) - Enter 'Attribute Value' (Eg; Rs. 500)

- Click on the 'Save' icon

- Product

- Sub-Product

- Product-Type

- Product-Name

- Attribute-Heading

- Attribute-Message

- Attribute-Value

- Language

- Goto your workspace

- Navigate to 'Manage AI'

- Click on 'Manage Knowledge Grid'

- Click on the import icon

- Select a CSV file (That contains all the columns specified above)

- The Grid Data will be imported and the data will be shown based on the product type

- Goto your workspace

- Navigate to 'Manage AI'

- Click on 'Manage Knowledge Grid'

- Click on the export icon

- Select 'Export as .csv'

- A CSV file will be downloaded with the columns (Product, Sub-Product, Product-Type, Product-Name, Attribute-Heading, Attribute-Message, Attribute-Value, Language, etc.)

- Goto your workspace

- Navigate to 'Manage AI'

- Click on 'Setup FAQs'

- Select any question

- Click on 'Grid FAQ'

- Select Product, SubProduct, Type, Product Name

- Select attribute(s)

- Click on 'Finish'

- Click on 'Save'

- You can arrange the order of attributes/messages by dragging them in any order to show on the bot

- You can also arrange the order of product, sub-product, type, product name by dragging them in an order

- To get the updated answer along with the grid, Training must be done before all the grid-related linking. Then after linking we have to quick train the faqs.

- Goto your workspace

- Navigate to 'Manage AI'

- Click on 'Setup FAQs'

- Select any question

- Select 'Edit or Link Faq' (from the dropdown in the Edit FAQ popup right top corner)

- Edit Product, SubProduct, Type, Product Name

- Edit attribute(s)

- Click on 'Finish'

- Click on 'Save'

- Goto your workspace

- Navigate to 'Manage AI'

- Click on 'Setup FAQs'

- Select a question for which you want to unlink the grid data

- Select 'Unlink Faq' (from the dropdown in the Edit FAQ popup right top corner)

- Click on 'Save'

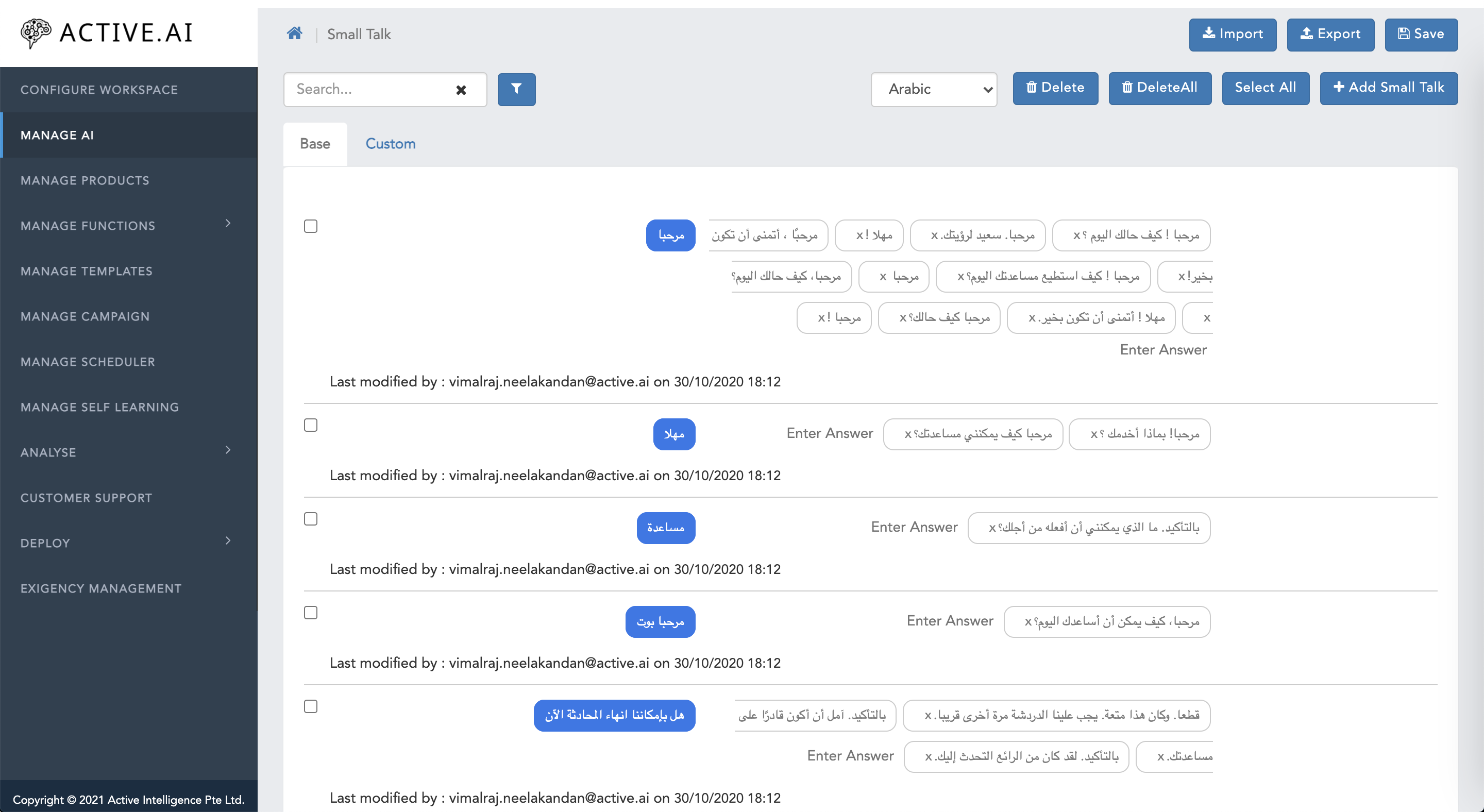

- FAQs: The Question and answers will be shown in the RTL layout for those languages as shown in the following image.

- Smalltalk: The Smalltalk will be shown in the RTL layout for those languages as shown in the following image.

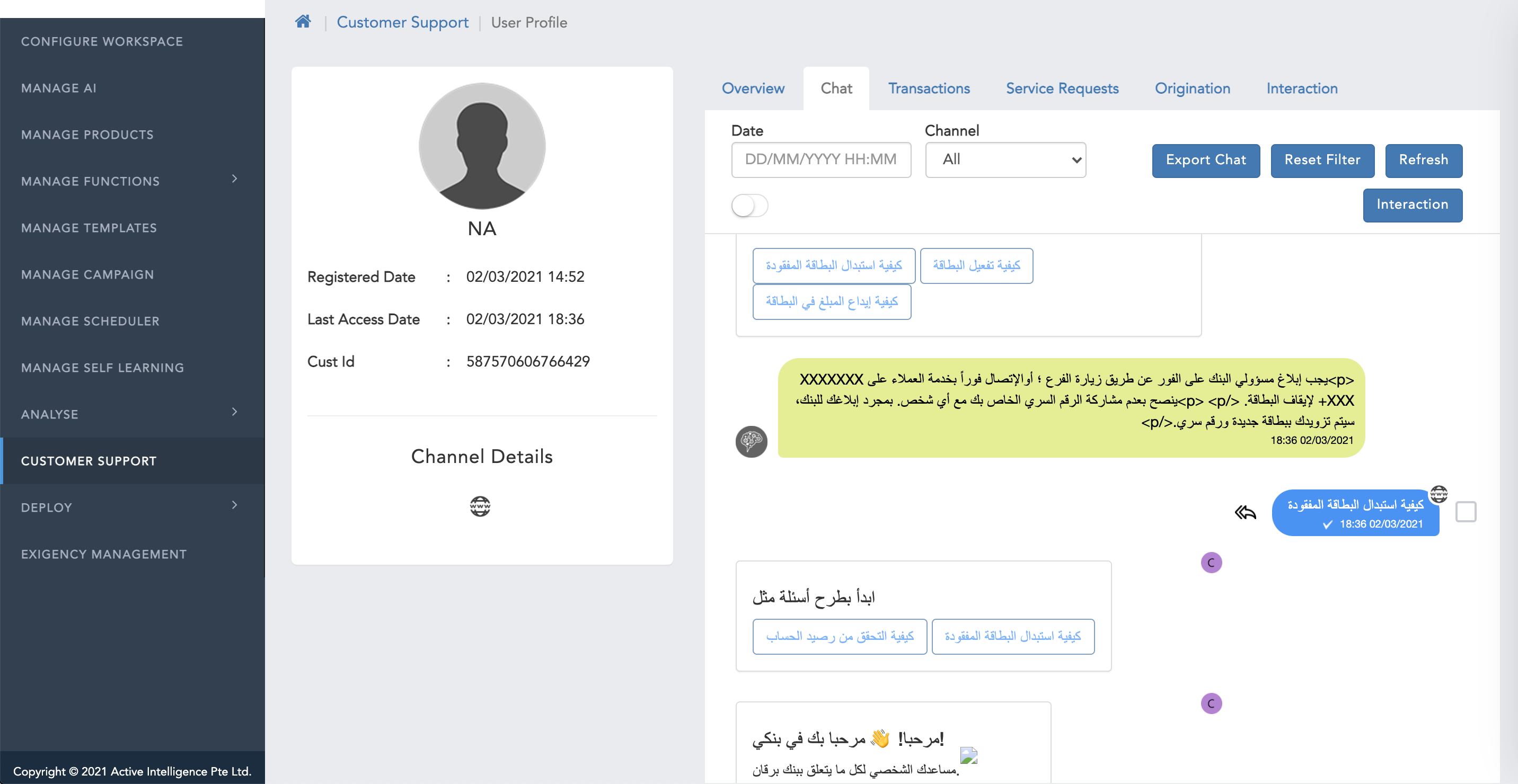

- Chat History: The chat history which customer had with the bot in those specific languages those chat history will be shown in RTL layout as shown in the following image.

- All the greetings, bot capability questions and bot introduction questions possibilly be asked by the users should be though of

- Customer specific Small Talks should be provided by the business user along with the appropriate neutral answers

- Small Talk works based on the lookup, thus all different variations to a query should be included and mapped with an appropriate answers

- Smilies can be used in the answer for which unicode mapping should be provided in the bot configuration.

- Select your workspace and Click on Manage AI -> Click Setup Small Talk

- Select the Language you are interested in

- Click on Add Small Talk

- Add Question/Query in 1st box (where navigational text is present as "Small Talk" in grey colour)

- Add Response in 2nd box (where navigational text is present as "Enter Response" in grey colour)

- To add Multiple response to a single Question/Query press enter after each response and add the +nth reponse

- Click on Save.

- Select your workspace and Click on Manage AI -> Click Setup Small Talk

- Click on Import

- Select CSV file adhering to the above File Structure of the Small Talk

- Click Yes on the popup (*Are you sure you want to overwrite?*)

- Click on Save.

- Post successful import of .csv file, a message Small Talk uploaded successfully will be shown

- Select your workspace and Click on Manage AI -> Click Setup Small Talk

- Click on Export

- Select your workspace and Click on Manage AI -> Click Setup Small Talk

- Select the Small Talk(s) which you want to Delete and click Delete

- Select Delete for (*Are you sure you want to delete the selected smalltalk?*)

- For clearing all Small Talks, please use Delete All

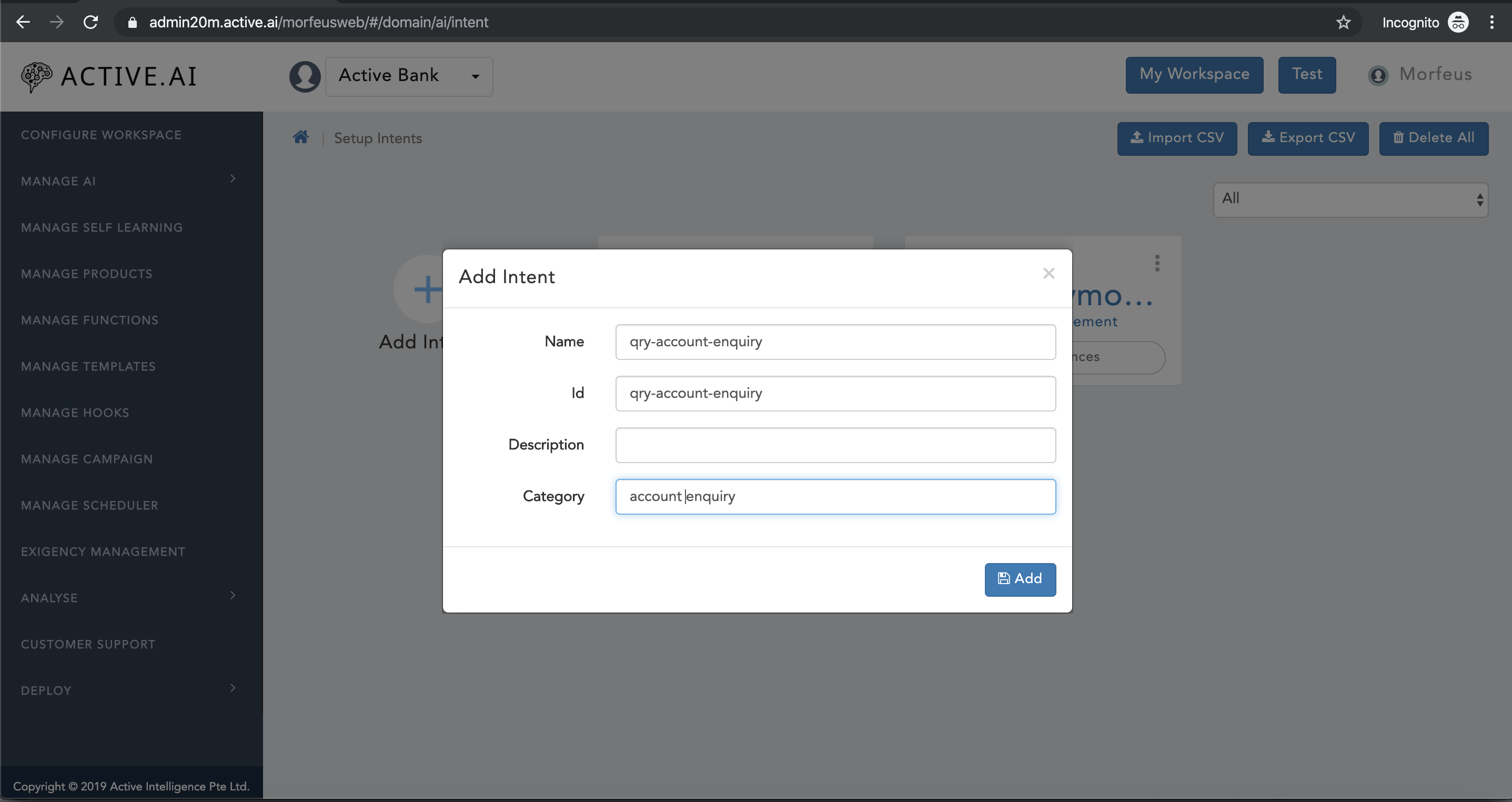

- Select your workspace and Click on Manage AI -> Click Setup Intents

- Enter the name for intent

- Click on Add Intent

- Good Intent naming practise: Start Intent name with "qry-" for enquiry intents / "txn-" for transaction intents

- Id - Name of the intent

- Description - Optional Description related to the intent.

- Category - Category of the intent is the brief two to three words about the intent.

- Click on Add. Now the Intent will be listed.

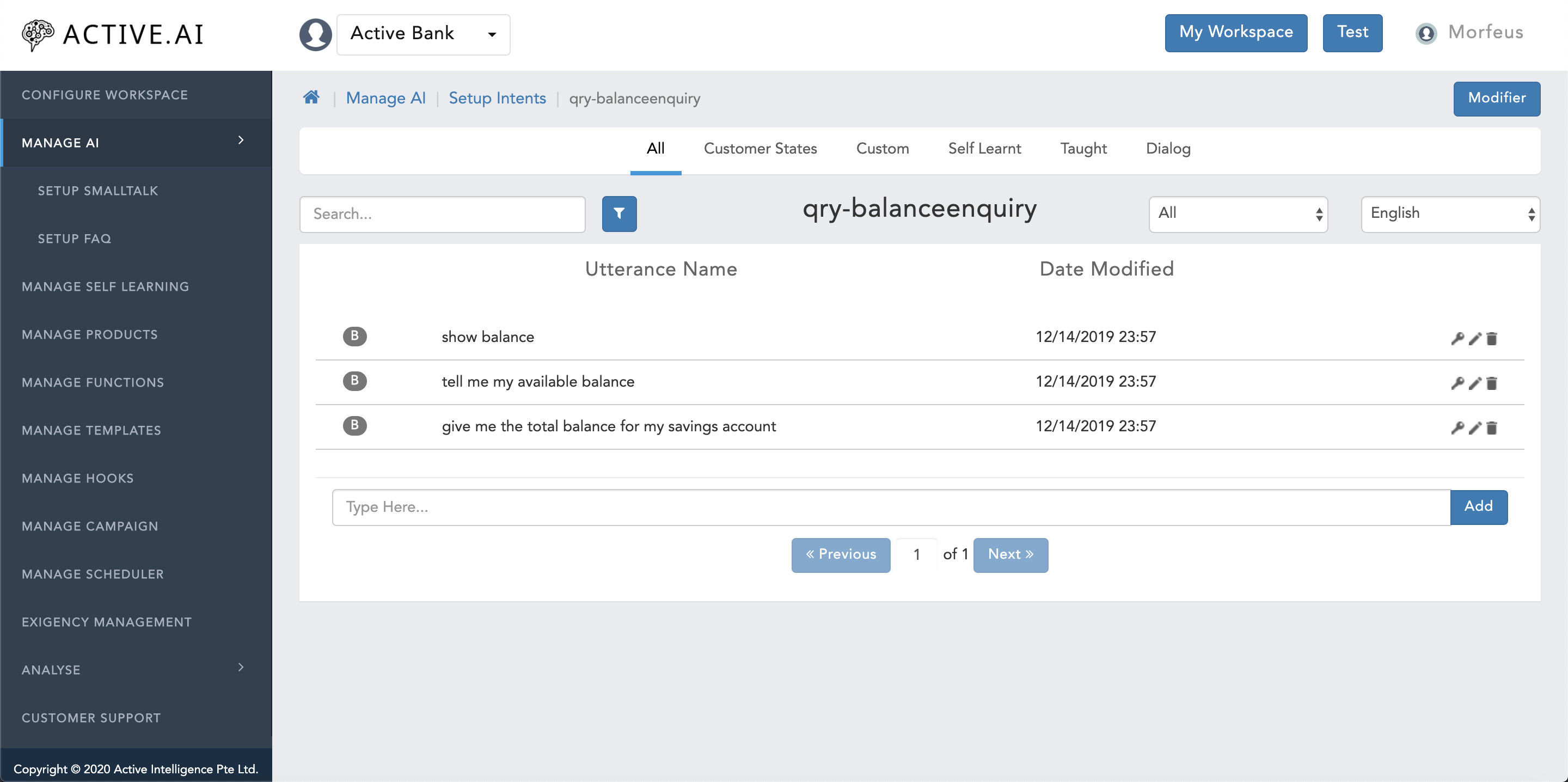

- Select the Language you are interested in

- Key in the utterance (Navigational text - Type Here...) and click on Add button on the right side of the box to add the utterance. (*Minimum 20 utterances should be added for a intent*)

- Select your workspace and Click on Manage AI -> Click Setup Intents

- Click Import CSV

- Click 'Yes' on the popup. (*Are you sure you want to overwrite?*)

- Select the CSV intents file to import

- Select your workspace and Click on Manage AI -> Click Setup Intents

- Click Export CSV

- Select your workspace and Click on Manage AI -> Click Setup Intents

- Click on menu icon of the particular intent

- Click on Delete intent, Click Delete on the popup. (*Are you sure you want to delete unsupported intent?*)

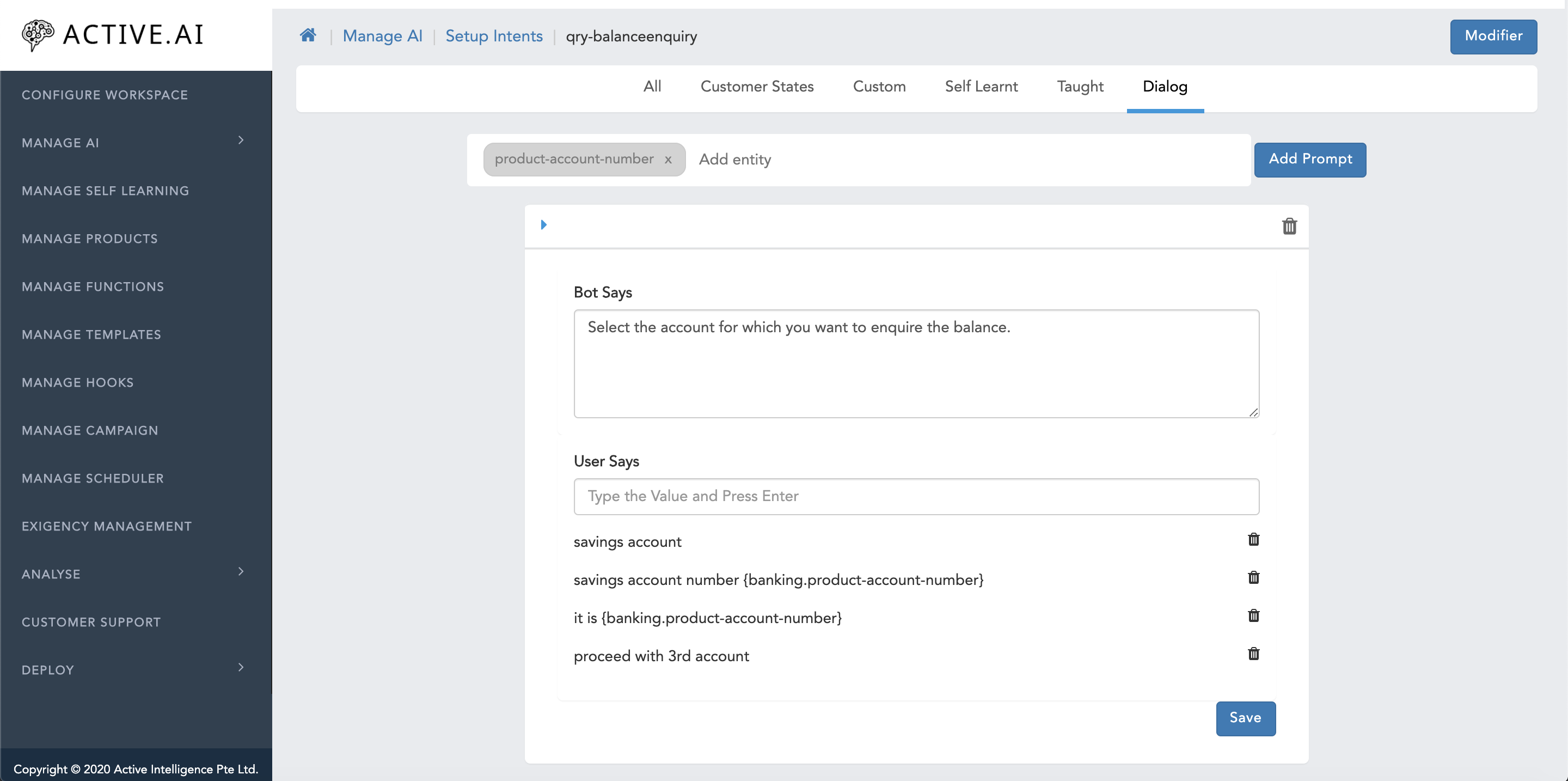

- Expected entity

- Bot says

- User says

- Select your workspace and Click on Manage AI -> Click Setup Intents

- Click on Setup Utterances of the desired intent -> Click Dialog tab -> click Add

- To prepare the dialog utterances, first key in the entity for which a bot questions the user (navigational text Add entity

- After registering the Entity and Bot Question, add sample user answers by click on Add Prompt button. (*can add multiple utterances that user might ask**)

- Click Save

- Select your workspace and Click on Manage AI -> Click Setup Intents

- Click on Setup Utterances of the desired intent -> Click Dialog tab

- Click on the delete icon for which you want to Delete

- Click 'Yes' on the popup. (*Are you sure you want to delete?*)

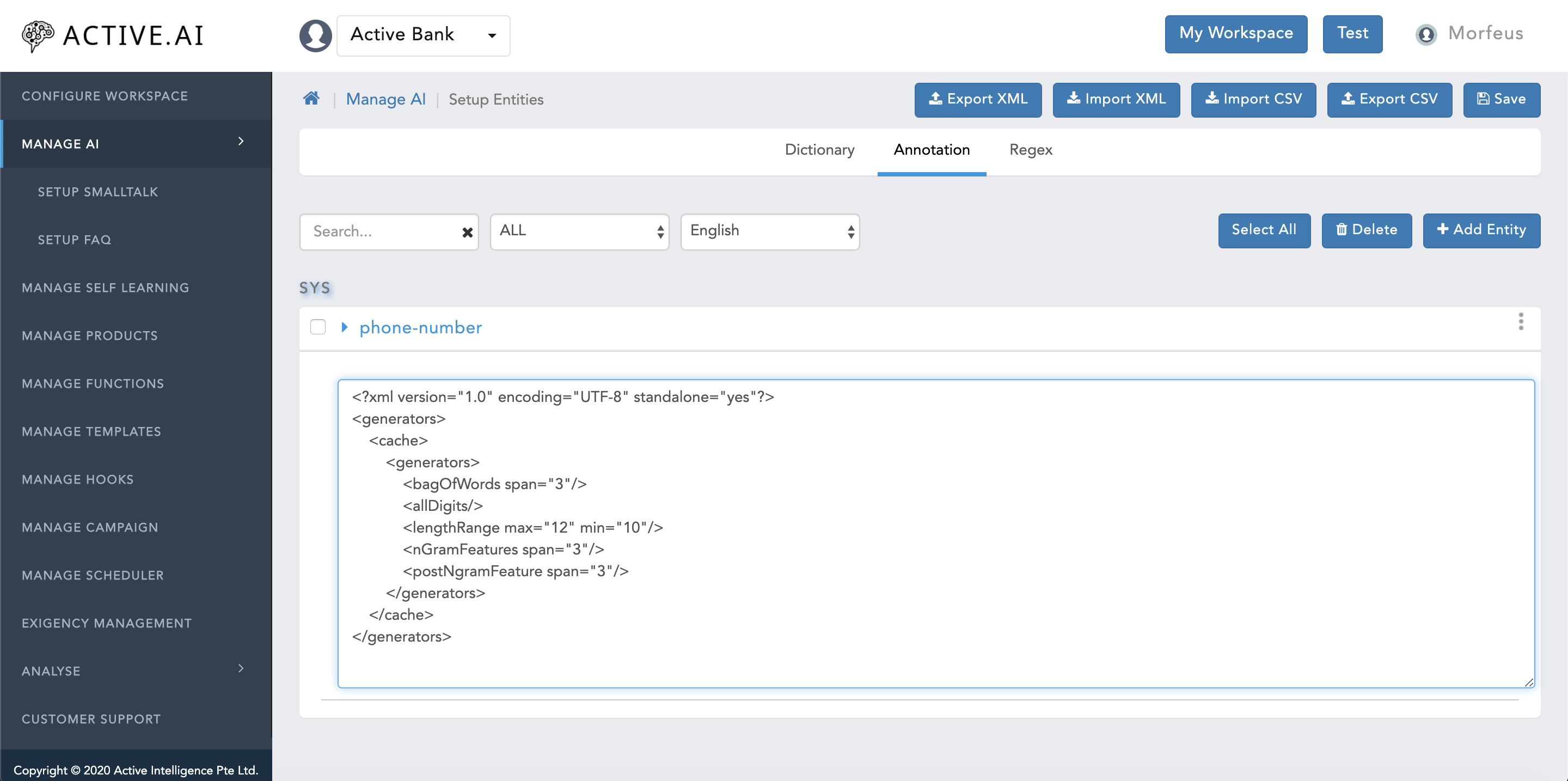

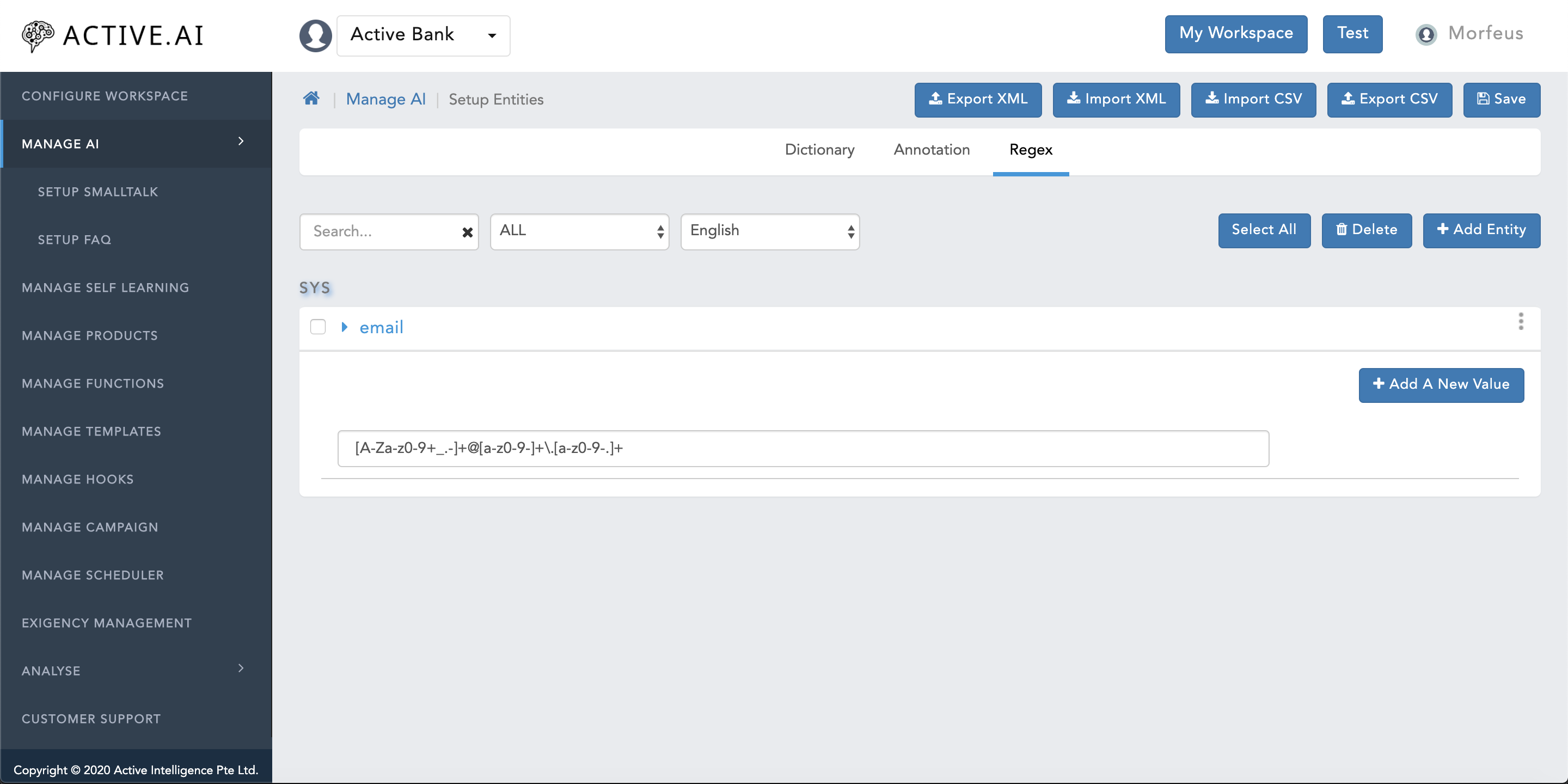

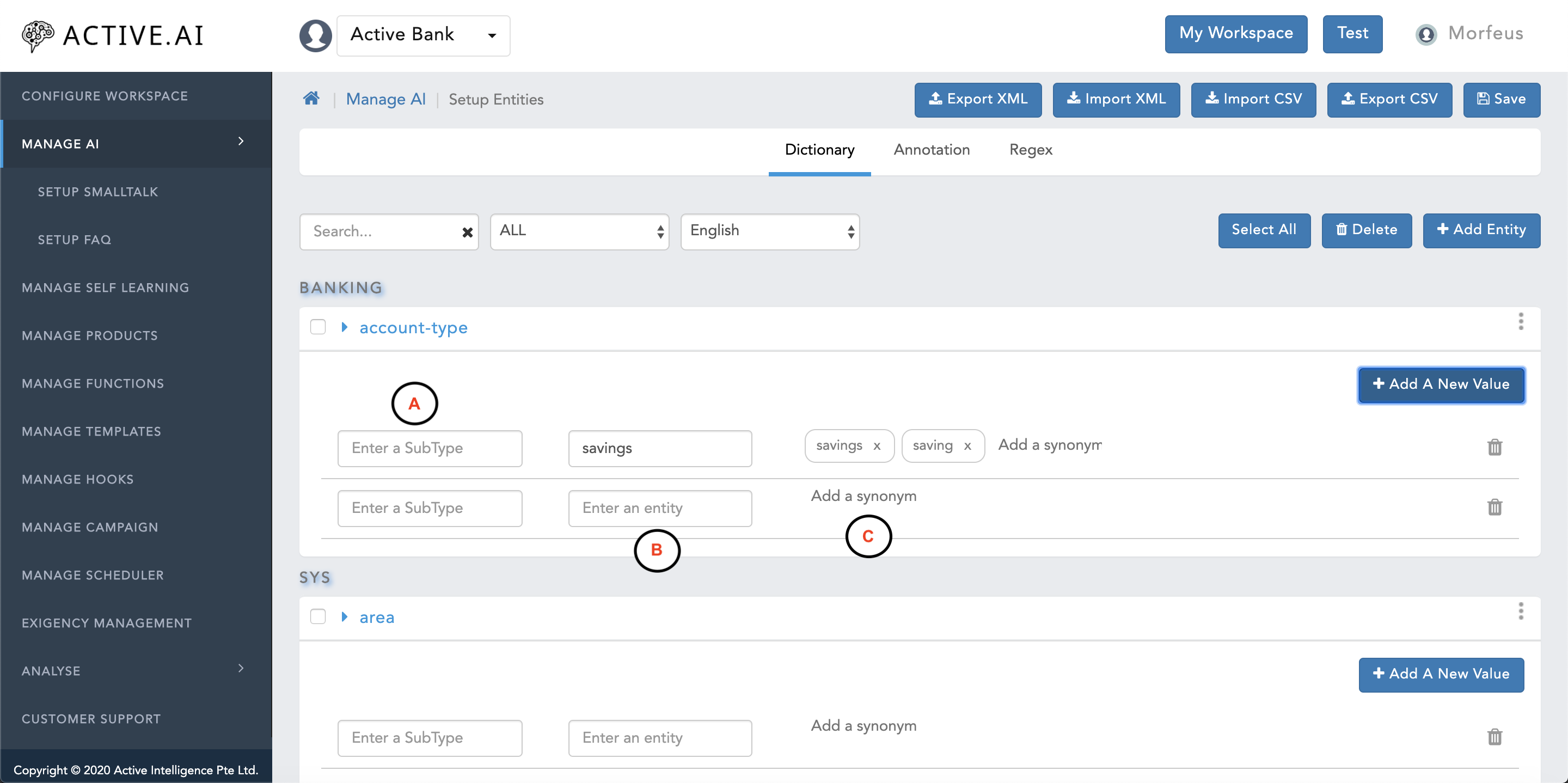

- Dictionary (All finite values)

- RegEx (Regular Expression Pattern)

- Train (Infinite Values)

- product_type in which card type do you list? - Visa, AMEX Master, UPOP, JCB or Rupay

- Account Type show current acc balance - Current, savings, salary are the finite account types.

- Mobile number in recharge my mobile number 8130927472 - 10 digit mobile number which is infinite in nature

- Amount in transfer 500 rupees - 1 to 8 digit amount which is infinite in nature

- Payee name transfer to jacob - payee name are regular expression which is infinite in nature.

- PrimaryClassifier data preperation - need to annotate the train entities within curly braces like; _"{<train entity name>}"_

- Dialog data preperation - need to annotate the train entities within curly braces like; _"{<train entity name>}"_

- While defining train entities, need to prepare a .samples file for AI data training

- All sample data will not be visible in the ui as the sample values are in thousands. To check the sample values one has to click on Export XML button.

- email id in send an e-statement to neo@active.ai - email if is universal which can easily be captures with a RegEx pattern

- Item Number within a transactional flow select 5th account - Item Number within the user's input can easily be extracted by using RegEx pattern.

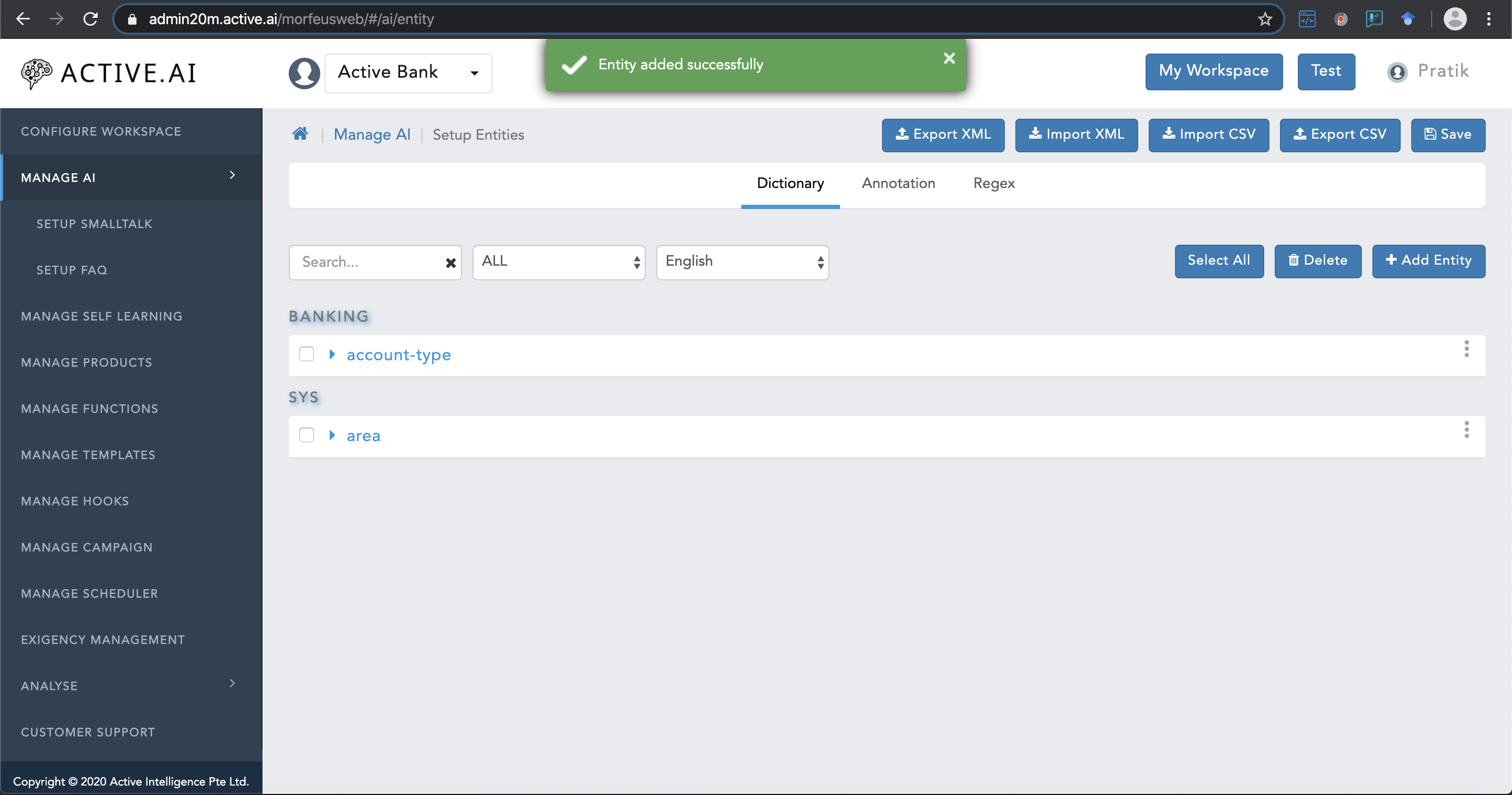

- Select your workspace and Click on Manage AI -> Click Setup Entities

- Click on Dictionary / Annotation / Regex to load the entities.

- Select your workspace and Click on Manage AI -> Click Setup Entities

- Select Dictionary / Annotation / Regex tab for defining the Entity

- Click on Add New Entity

- Name - Name of the entity

- Code - Code of the entity

- Description - Description of the entity

- Category - Respective category Ex: sys, banking

- Class - Class of the entity can be populated here. All entity may not require or have the class

- Ontology (Knowledge Graph) Type - Here by default ontology will be set as None. If Knowledge Graph enabled for the workspace and want to enable at the entity level then can configure here with one of the types Product, type, name, attribute or Action.

- Enter a SubType : SubType is used when we need to enter different types of attribute groups for the same entity.

- banking.product-name for credit card : SubType can be populated as Credit Card

- banking.product-name for debit card : SubType can be populated as Debit Card

- Enter an entity : The root value of an attribute to be stored here

- Add a synonym : Synonym(s) should be populated where one variation should be the root value itself and rest should be actual synonym(s)

- Click on Save button located on the right top corner.

- Select your workspace and Click on Manage AI -> Click Setup Entities

- Click on Import CSV, Select the CSV entities file.

- Select your workspace and Click on Manage AI -> Click Setup Entities

- Click on Import XML, Select the XML file of entities

- Select your workspace and Click on Manage AI -> Click Setup Entities

- Click on Export XML or Click on Export CSV

- Select your workspace and Click on Manage AI -> Click Setup Entities

- Select the entities to delete by type and Click Delete

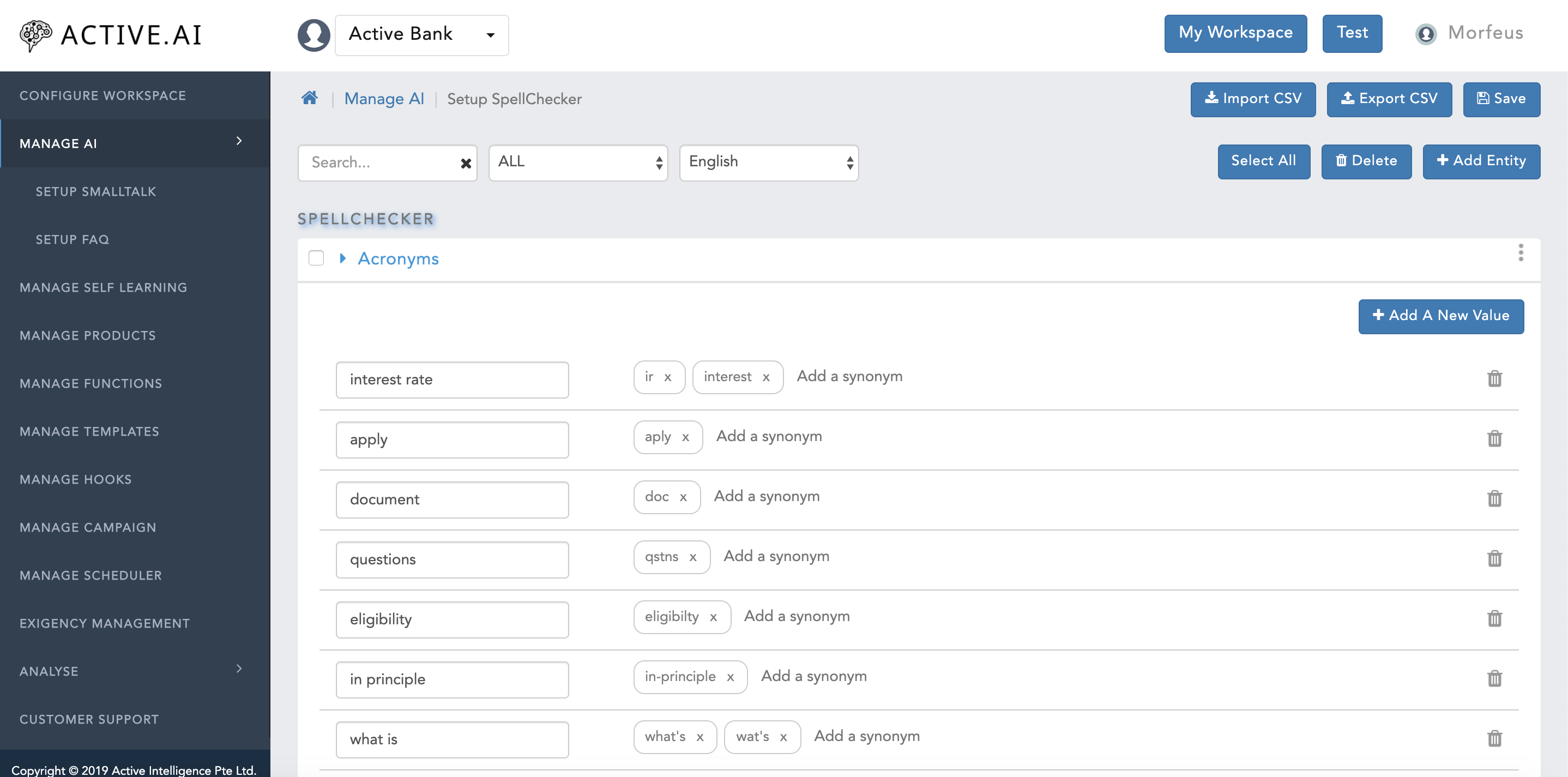

- Acronym to abbreviation = account=ac.,a/c

- Map the regular expression words with the same value to stop the auto correction = Amit=Amit

- Spell typo to correct word mapping = balance=balnc,blance

- Select your workspace and Click on Manage AI -> Click Setup SpellChecker

- Select the Language you are interested in

- Click on Add Entity

- The new empty box will prompt at the end of the Grid. Enter the Root Value (On Navigational text "enter an entity"

- Add Response in 2nd box (where navigational text is present as "Enter Response" in grey colour)

- Synonym(s) should be added in the second box (On Navigational text "Add a synonym"). Multiple entries can also be mapped to a single root value for which after each synonym one has to use the "ENTER" key and then add another synonyms

- Click Save.

- Select your workspace and Click on Manage AI -> Click Setup SpellChecker

- Click on Import CSV, CSV should comply the above mentioned File Structure

- Click 'Yes' on the popup (*Are you sure you want to overwrite?*)

- Select the CSV file of SpellChecker

- Click Save.

- Select your workspace and Click on Manage AI -> Click Setup SpellChecker

- Click on Export CSV

- Select your workspace and Click on Manage AI -> Click Setup SpellChecker

- Select SpellCheker which you want to Delete, click Delete

- Goto your workspace

- Click on 'Manage AI'

- Select 'Manage Rules'

- Click on 'Import'

- Select the file (JSON file)

- Click 'Yes' on popup. (Are you sure you want to overwrite?)

- Goto your workspace

- Click on 'Manage AI'

- Select 'Manage Rules'

- Click on 'Export'

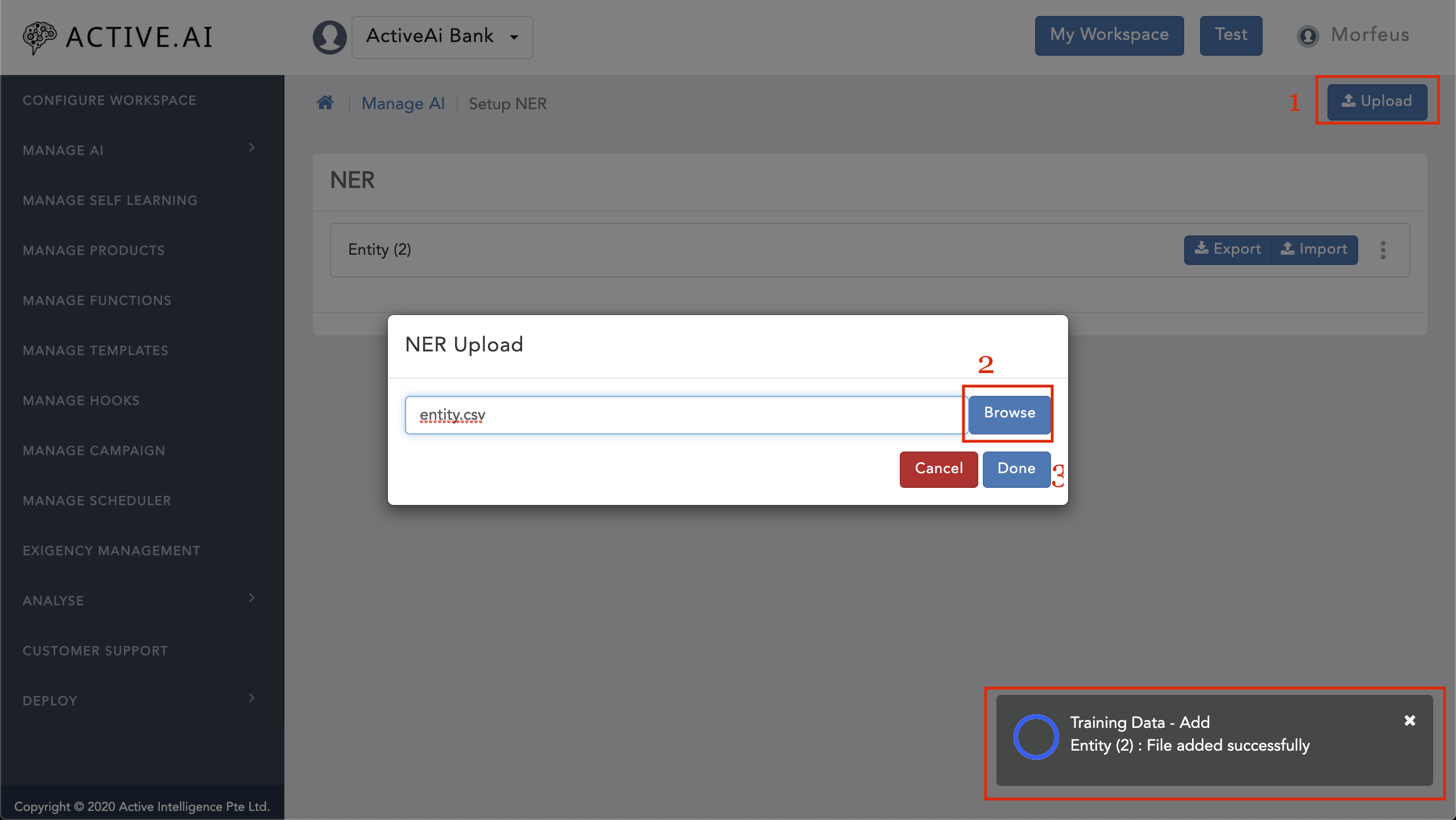

- Goto your workspace

- Navigate to 'Manage AI'

- Click on 'Setup NER'

- Click on 'Upload'

- Click on 'Browse'

- Selct a CSV file of entities

- Click on Done

- Goto your workspace

- Click on 'Manage AI'

- Navigate to 'General'

- Select 'Unified API v2' in the following fields:

- AI Engine

- Context Change Detector

- Entity Extractor

- Primary Classifier

- Click on save

- Goto your workspace

- Click on 'Manage AI'

- Navigate to 'Triniti'

- Select Deployment Type as Cloud

- Enter Triniti Manager URL as http://dev-manager.triniti.ai/v/1

- Click on save

- Goto your workspace

- Click on 'Manage AI'

- Navigate to 'Unified API V2'

- Set Endpoint URL as https://router.triniti.ai

- Set Unified API version as 2

- Set Context Path as /v2

- Click on save

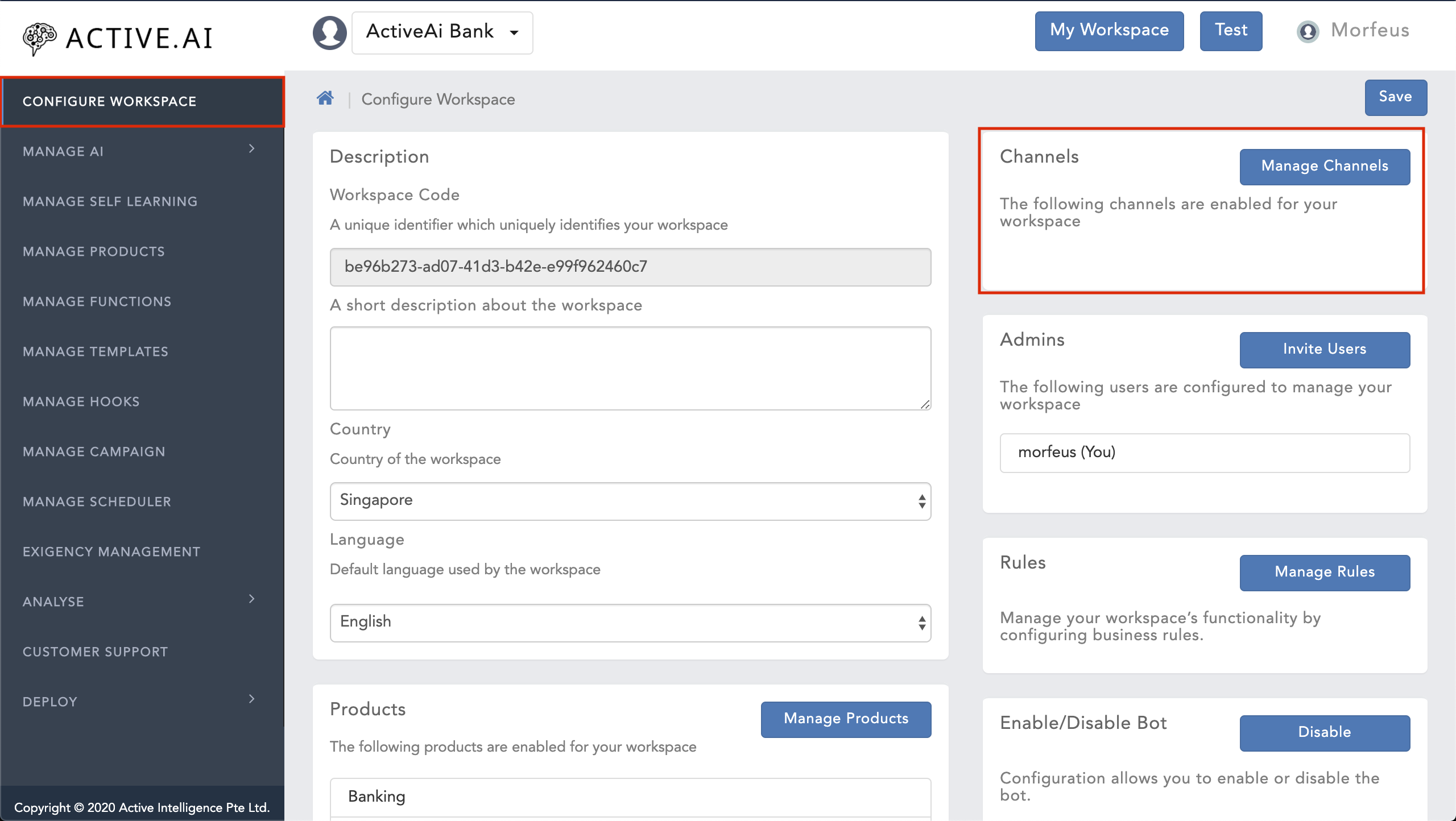

Language & Country

- Goto your workspace

- Navigate to 'Configure Workspace'

- Select Country & Language

- Click on save

- Click on save

- Click on save

Security

- Goto your workspace

- Under 'Configure Workspace', click on 'Manage Rules'

- Navigate to 'Security'

- Set Access key ID

- Set Secret Access Key

- Set Bucket Region (Based on your selected country)

- Click on save

- Goto your workspace

- Navigate to 'Deploy'

- Click on 'AI Ops'

- Click on Generate

- CLick on TRain (After finishing up the generate)

- Goto your workspace

- Click on 'Manage AI'

- Click on 'Manage Language Translation'

- Select the language for which you want to check the translation from the language dropdown

- Select the date range

- You will get the list of 'Customer Utterance' along with the respectively translated utterance and the bot response.

- If you feel that the translation is not correct, you can edit those utterances by clicking on the 'Edit' icon

- Enter the correct translation and click on the save button

- The edited utterance will be added in the Updated Translated Utterances section with the status 'untrained' utterance

- Select the language for which you want to check the translation from the language dropdown

- Select the date range

- You will get the list of 'Customer Utterance' along with the respectively 'Translated Utterance' & 'Updated Utterance'.

- If you want to edit the updated utterance you can edit by clicking on the edit icon

- And If you want to delete the updated utterance, you can delete it by clicking on the delete icon and selecting 'Yes' from the popup.

- Manage self-learning is a feature helps to trace the utterances which are ambiguous, unrelated or unclassified from all sorts of data. This classification can be traced based on Products, FAQ or Small Talk in specific date range as well.

As a human, you may speak and write in English, Spanish or Chinese. But a computer’s native language – known as machine code or machine language – is largely incomprehensible to most people. At your device’s lowest levels, communication occurs not with words but through millions of zeros and ones that produce logical actions.

As a result there will be many such uttrances that will not be classified by the Ai engine. It will be a very awful task to go through each and every unclassified uttrances and train them seperately. Self learning comes into play when you want to categorized all the uttrances under certain category. Post these categorization it becomes easy to get all the uttrances by applying various category, channel, date, Message type, and also confidence range.

For every uttrance that is being audited falls under certain category. Some of the categories are

Feedback

Ontology

Profanity

Unclassified

Unsupported

Failed

Ambiguous

Self learning reduces the manual intervation of classifying the uttrances under these categories.

- None

- Intents

- FAQs

- Smalltalk

- Feedback

- Ambiguous

- UnSupported

- UnClassified

- Ontology

- Profanity

- Unanswered

- Live Agent (Interaction with live agent eg; FreshChat, LiveChat, etc.)

- Post Back (Responses received from buttons eg; Similar Queries, Related FAQs, Button templates, etc.)

- Text (The text responses which are asked by the user by entering their input on the bot input box)

- Voice (The responses which are received from IoT devices eg; Google Assistant, Alexa, etc.)

- Goto the workspace

- Goto Manage SelfLearning

- Get the utterances based on various filters (ie; Date, Data, Type, Channels, Message type, Language, Segment, search text, etc.)

- Once we get the utterances, please click on the edit icon of that particular utterance.

- On click of the edit icon we will get the 'Add To Training' popup.

- Please select the 'Data Category' as 'FAQ'

We can choose to add the selected utterance as 'New FAQ' or 'Existing FAQ'

- Add to new FAQ:

- Add to existing FAQ:

- Add to new FAQ:

We will get the selected utterance and the 'FAQ Category' and 'FAQ list' of the selected language/utterance language.

Once we add the utterance as 'New FAQ'/'Existing FAQ' we can save the changes and train the bot.

- Add the utterance as a new FAQ => It will redirect to the FAQs Screen where we can add the selected utterance as a fresh new FAQ with a proper response.

- Add the utterance as existing FAQ => It will add the selected utterance as the variant for the selected FAQ from the dropdown list.

- Goto your workspace

- Navigate to 'Manage AI'

- Click on 'Setup Knowledge graph'

- Click on 'Add' in the Design section

- Enter all the required details (Product, synonyms, product type, etc.)

- Expand the product (which you have added in last step)

- You can add more Product types, Names & Attribute groups.

- If you have asked for cc, debit, then the bot will ask you for the actions like apply, activate, replace, etc. based on the configuration.

- If you have asked for account, then the bot will show you the product attribute like payee, biller, balance, etc.

- Goto your workspace

- Click on 'Manage Template'

- Click on 'Add Card'

- Enter the name as "ONTOLOGY_DEFAULT"

- Enter the required details (Name, Category, code, version, etc.)

- Click on 'Next'

- Configure the template as per your requirement

- Click on Save

- Click on the tempalate

- Click on the Source

- Paste the following source code

- Click on save

- Goto your workspace

- Click on 'Manage Template'

- Click on 'Add Card'

- Enter the name as "ONTOLOGY_SUGGESTION_TEMPLATE"

- Enter the required details (Name, Category, code, version, etc.)

- Click on 'Next'

- Configure the template as per your requirement

- Click on Save

- Click on the tempalate

- Click on the Source

- Paste the following source code

- Click on save

- Goto your workspace

- Navigate to 'Manage AI'

- Click on 'Setup Knowledge graph'

- Select Product in Fulfillment section

- Click on 'Add'

- Enter all the required details (Action, Type, Name, Attribute group, Attribute, Fulfillment type, fulfillment, etc.)

- Click on the save icon.

- Based on your knowledge graph the bot will show the related utterance/query to the user so that the user can easily select the appropriate answer.

- Don’t repeat the labels across product, type, name, attribute and Action

- Don’t repeat synonyms, else that will introduce ambiguity

- Product Attribute Group is only used for the ease of grouping related attributes for defining fulfillments

- Default probing will start in the following ordering

- Product -> Action

- Product -> Action -> Types

- Product -> Action -> Types -> Names

- Product -> Action -> Attributes

- Product -> Action -> Types -> Attributes

- Product -> Action -> Types -> Names -> Attributes

- Goto your workspace

- Navigate to 'Manage AI'

- Click on 'Knowledge Graph'

- Click on 'Import'

- Click 'Yes' on the popup (Are you sure you want to overwrite?)

- Select xlsx file from you system (which should contains Product, Product Type, Product Name, Product Attribute group, Product Attribute, Fulfillment Type, Fulfillment, etc. columns)

- Goto your workspace

- Navigate to 'Manage AI'

- Click on 'Knowledge Graph'

- Click on 'Export'

- Goto your workspace

- Navigate to 'Manage AI'

- Click on 'Knowledge Graph'

- Click on Delete icon

- Or click on 'Delete all' (if you want to delete all the knowledge graph)

- Goto your workspace

- Navigate to 'Manage AI'

- Click on 'Knowledge Graph'

- Click on 'Please Load'

- Go to your workspace

- Go to Manage UseCase

- Click on Add(+) icon

- In Definition tab Enter the use case name, description and toggle on the AI Enabled

- Click on Next (It will give the suggestions related to the function name which you provided or it will go to the next tab if no suggestions available)

- Select from the given suggestions (If suggestions provided)

- In Data tab Add the utterances/data related to use case (Note: Minimum 5 utterances are required, but to get the better classification & result, Minimum 20 utterances are required. We can map the word with entity on typing the utterance)

- Go to your workspace

- Go to Manage UseCase

- Click on Add(+) icon

- In Definition tab Enter the use case name, description and toggle off the AI Enabled

- Click on Next

- In Data tab Add the utterances/data related to use case (Note: Minimum 5 utterances are required, but to get the better classification & result, Minimum 20 utterances are required.)

- Click on Next

- In Fulfillment tab Select the channel & Security

- Click on Save

- Go to your workspace

- Go to Manage Use Case

- Click on any use case You will be able to see below use case menu

- Manage

- Add

- Sync

- Import(csv)

- Export(csv)

- Import Zip

- Export Zip

- On click of Manage we can see distinct Supported Products from the available data set which we managed in functions.csv file

- Based on the product selection will show supported use case as shown \ Which is categorised based on function type mentioned in CSV and shows function name to select \ which contains 2 options as explained below and one display based on fulfilment type selection as show in image (Fullfillment)

- Once we are done with the selection we save the data in functionmstr table and show only supported functions as shown in Functions landing page

- Sync button in image can be used to get latest functions added in functions.csv after initial setup

- Before testing in the bot go to \ Configure Workspace > General Rules > search for text (Enable to support managing use cases for not supported products) and enable it by default it will be disabled. So that can test in bot for managed functions accordingly

- Below are the fields to be configured while creating a function record

- Once the record added we need to configure below fields:

- Expected entity

- Bot says : Expected questions from the bot to the user

- User says : The way user replies

- Webhooks

- Workflow

- Template

- Camel Route

- Java Bean

- Goto your workspace

- Navigate to 'Manage Functions'

- Click on 'Edit' icon of the function(for which you want to add camel route)

- Navigate to 'Integration'

- Choose 'Config' will show some existing routes (If exists)

- Choose the 'Domain' under Business Application

- Choose the 'Function'

- Click on 'Load' button (It will get the API & data and will map to the parameter that will be shown on clicking load)

- Under Client API Enter the 'route id' in 'API name' field

- Choose the specification type (HTTP specification, Swagger specification, SOAP specification, etc.)

- Configure the settings of the selected specification type (*URL, HTTP method, Content type, Request class, Response class, logging message, Next route, required actions,Header parameters, property parameters, etc.)

- Click on 'Load'

- Map the Business Application parameter with the Client API parameter as per your requirement

- Click on save

- Goto your workspace

- Click on 'Manage AI'

- Select 'Manage Rules'

- Click on 'Import'

- Select the file (JSON file)

- Goto your workspace

- Click on 'Manage AI'

- Select 'Manage Rules'

- Click on 'Import'

- Select the file (JSON file)

- Click on 'Yes' on popup. (Are you sure you want to overwrite?)

- Goto your workspace

- Click on 'Manage AI'

- Select 'Manage Rules'

- Click on 'Export'

- Chat History Configuration related rules

- Cache size for chat history, Pagination mandatory for honoring

- Chunk size for chat history calls

- Enable to activate chat history

- Enable to activate pagination of chat history

- Enable/ Disable inclusion of single init response in Chat History

- Extension of expiry timer for history cache in minutes

- Select the mode of chat history

- Self destruct timer for history cache in minutes

- Configuration related rules

- Audit Anonymous Users

- Channel Based Product Rules

- Db update for the only login

- Developer Mode

- Display Welcome Message

- Domain name of One Portal

- Enable IP address audit for request

- IP Address Header name

- Languages

- Limit Audit Size In Database

- Mail Account Password

- Mail Account Username

- Mail Server Host

- Mail Server Port

- Post Login Custom Header

- Remote IP Address Header name

- Reports Export Path

- Support post login action

- User Social Profile Refresh Frequency

- Elastic Search Configuration related rules

- Cluster support for Elastic Search

- Elastic Search URL

- Password For Elastic Search

- Username For Elastic Search

- Image related rules

- Image View Height

- Image View Width

- Multilingual Configuration related rules

- Enable/ Disable multilingual data management

- OAuth Config related rules

- Login form URL for IOT channels v1

- Success form URL for IOT channels v1

- OAuth2 Proxy Config related rules

- Morfeus Domain with Context

- Post Login Configuration related rules

- Select the mode to honor post login response with INIT response

- Select to display last login time information

- Security related rules

- Expiry for the partial states of a user's application (days)

- Login modes supported for the bot users

- AWS related rules

- Access Key ID

- AWS Credentials Source

- Bucket Name

- Bucket Region

- Expiration Offset

- Expiration Offset

- Kibana URL for viewing managed EC2 Instance logs

- Live Log URL for viewing managed EC2 Instance logs

- Morfeus API Key

- Morfeus API Key Secret

- Morfeus API Key Secret

- Secret Access Key

- Configuration related rules

- Secret Access Key

- Authentication Credentials Refresh Frequency

- Domain name of the platform

- Link Customer Social Accounts to Login Credentials

- Login Policy

- Max. requests per session

- Maximum Failed Attempts for 2FA Policy

- Maximum Message Length

- Morfeus Secret Key

- Social 1FA Authentication Mode

- Social 2FA Authentication Mode

- Social Authentication Policy

- The URL Path to be used in the Cookie

- User 2FA Pending Timeout (secs)

- User Session Timeout (secs)

- WebSdk Request timeout (secs)

- Chat Agent Provider

- Manual Chat Agent Provider API Key

- Mode of Manual Chat Agents request to morfeus

- Select the agent provider for manual chat fallback

- Fallback Manual Chat related rules

- Agent chat fallback URL

- Agent Chat License Key

- Agents client id

- Agents refresh token

- Download Transcript Feature

- Enable fallback on failed AI conversations

- Enable fallback on sentimental analysis

- Enable fallback on user prompt

- Enable/disable Customer Support Fallback

- Return to bot when manual chat is inactive for a given period of time in seconds

- Text inputted by user or agent to end chat

- Text seen by agent when invalid response is sent

- Text seen by agent when user closes the chat session

- Text seen by user when agent closes the chat session

- Text seen by user when starts the manual chat session

- Zendesk domain URL

- Configuration related rules

- Amazon Alexa is literal enabled

- Enable to pass OTP successfully while on-boarding

- Configuration related rules

- CRM Interaction Route

- CRM Interaction Route Get Status

- LMS Route

- Configuration related rules

- Enable bizapp response auditing

- Enable campaigns

- Enable NER call for FAQs for data enrichment

- Configuration

- PUSH BOT DOMAIN

- FCM SERVER API KEY

- iOS/Android package name for FCM

- Configuration related rules

- AWS KMS Key

- Oauth Encryption Type

- Configuration related rules

- Fulfillment webhook url

- Secret key the Fulfillment webhook url

- Triniti Cloud Basic Auth

- Triniti Cloud Domain Name

- Goto your workspace

- Click on 'Manage Rules'

- Click on 'Import'

- Click on 'Yes' on popup. (Are you sure you want to overwrite?)

- Goto your workspace

- Click on 'Manage Rules'

- Click on 'Export'

- Goto your workspace

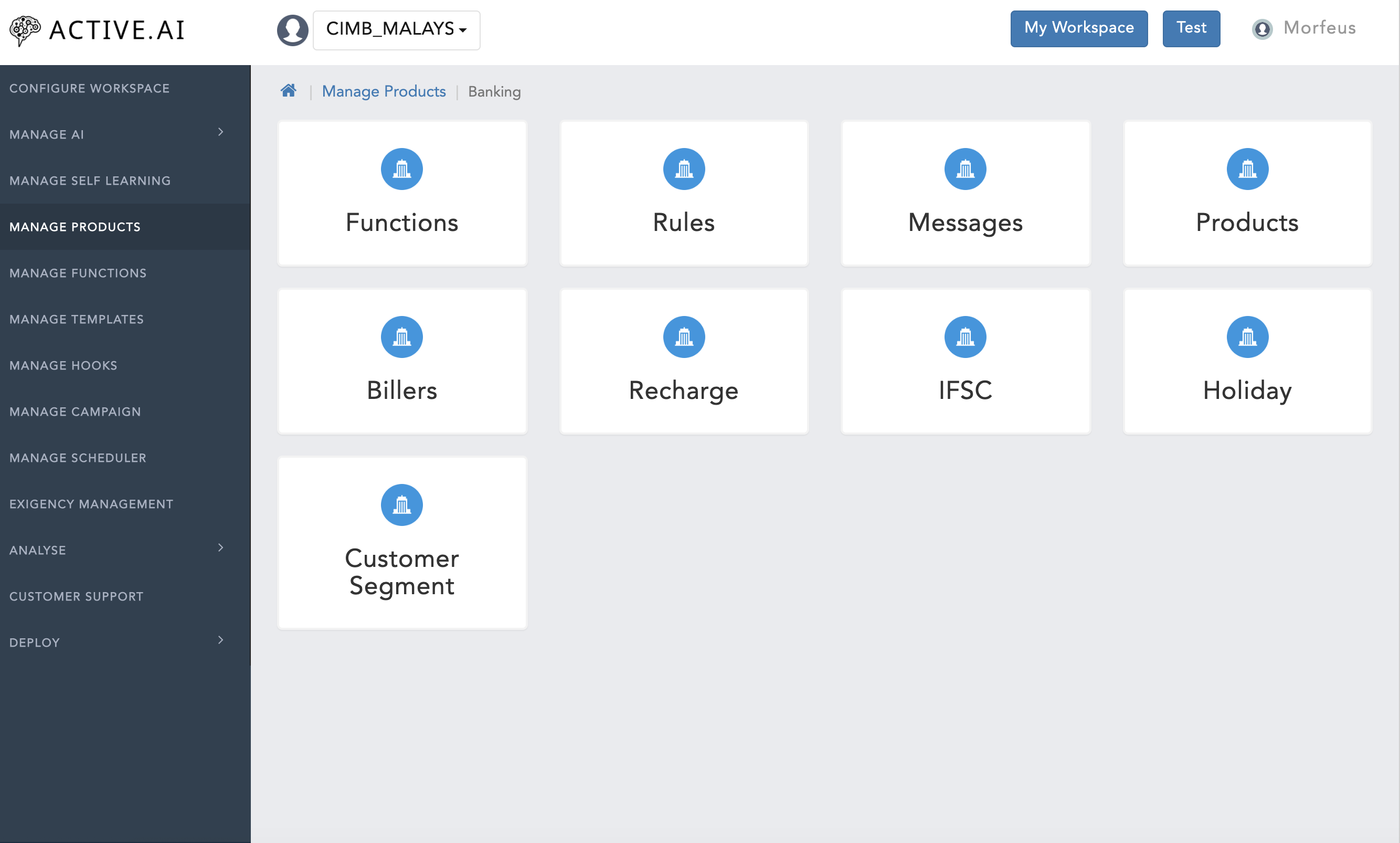

- Click on 'Manage Products'

- Click on 'Messages'

- Click on 'Add'

- Enter all the required details (Name, Value, description, category, etc)

- Click on Add

- Goto your workspace

- Click on 'Manage Products'

- Click on 'Messages'

- Click on 'Import' or 'Import CSV'

- Select a JSON file or CSV file from your system

- Click 'Yes' on popup. (Are you sure you want to overwrite?)

- If you are uploading a CSV file it should contain Message Code, Message Category Message Value, Message Description, Customer Segment, Code, Language, etc columns.

- If you are uploading a JSON file, it should have all the configured messages in JSON format.

- Goto your workspace

- Click on 'Manage Products'

- Click on 'Messages'

- Click on 'Export' or 'Export CSV'

- Go to your workspace

- Click on 'Manage Templates'

- Click on 'Add Card'

- Enter the required details (Name, category, code, version, etc.)

- Click on 'Next'

- Configure your template

- Click on save

- Text Template: How to configure this template? Please refer Configure Text Template

- Card Template: How to configure this template? Please refer Configure Card Template

- Image Template: How to configure this template? Please refer Configure Image Template

- List Template: How to configure this template? Please refer Configure List Template

- Button Template: How to configure this template? Please refer Configure Button Template

- Carousel Template: How to configure this template? Please refer Configure Carousel Template

- Video Template: How to configure this template? Please refer Configure Video Template

- Custom Template: How to configure this template? Please refer Configure Custom Template

- Go to your workspace

- Click on 'Manage Templates'

- Click on 'Import'

- Click 'yes' on the popup (Are you sure you want to overwrite?)

- Select a JSON file from your system containing template configuration in JSON format.

- Go to your workspace

- Click on 'Manage Templates'

- Click on 'Export'

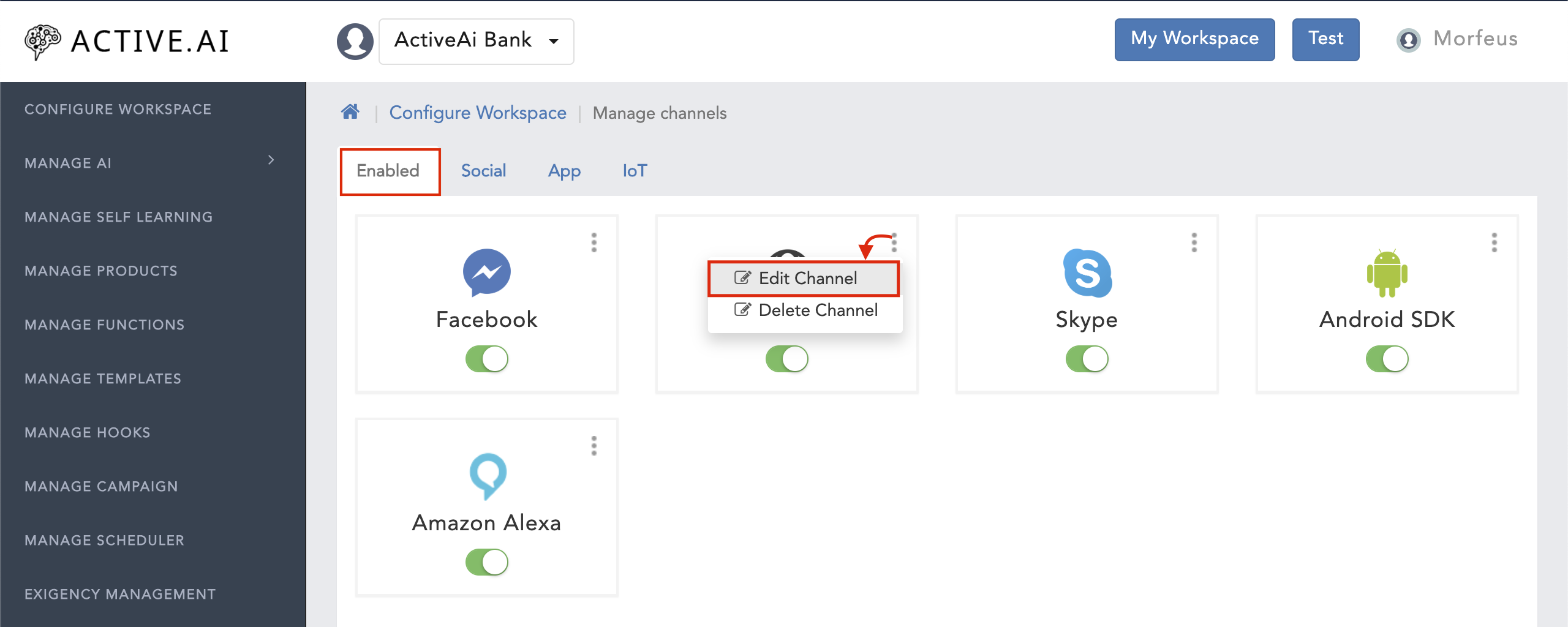

- Social Channels

- Application-based Channels

- IoT based Channels

- Goto your workspace

- Click on 'Manage Channels'

- Navigate to 'Enabled'

- Click on the menu icon (On the channel which you want to configure)

- Click on 'Edit Channel'

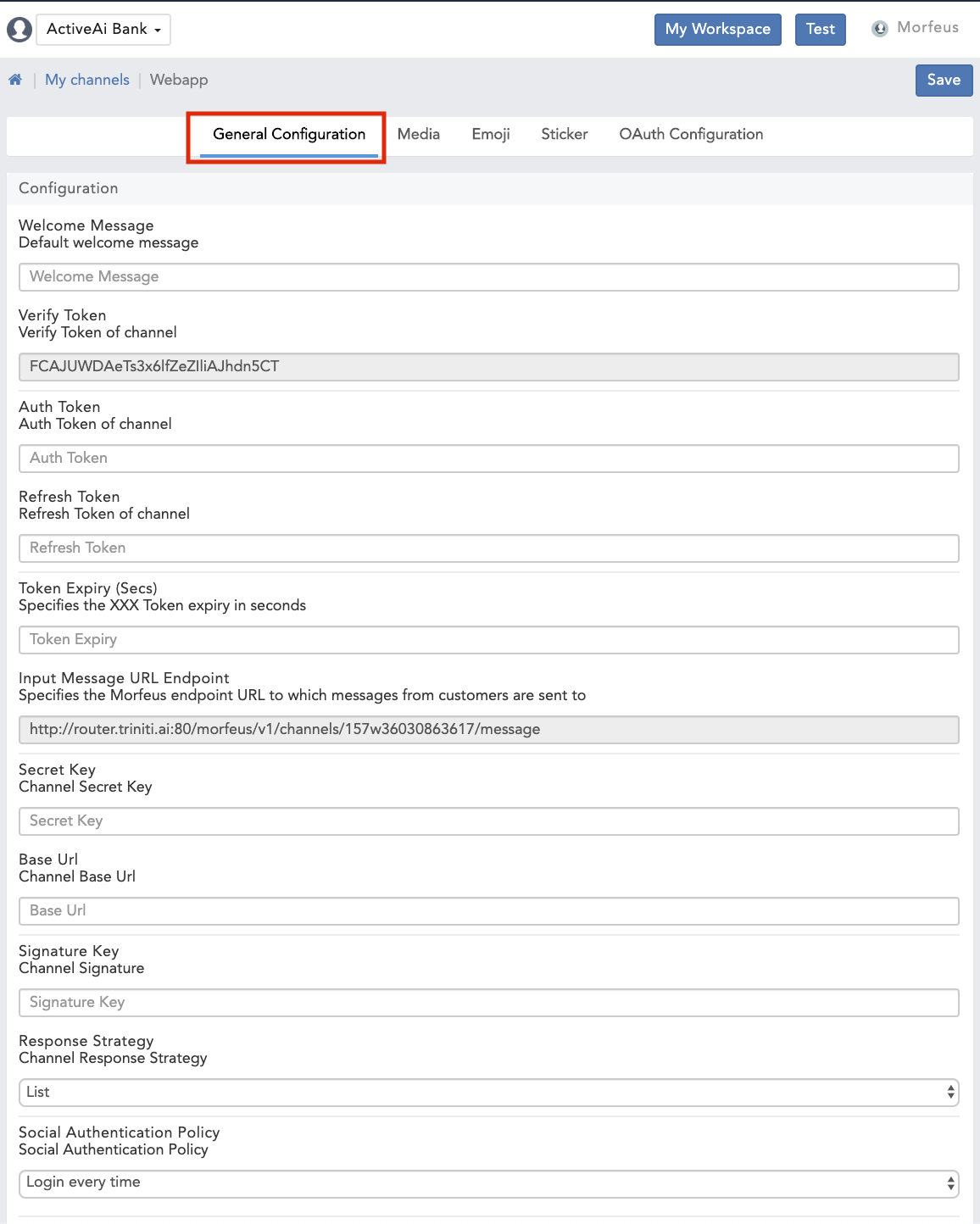

- General Configuration:

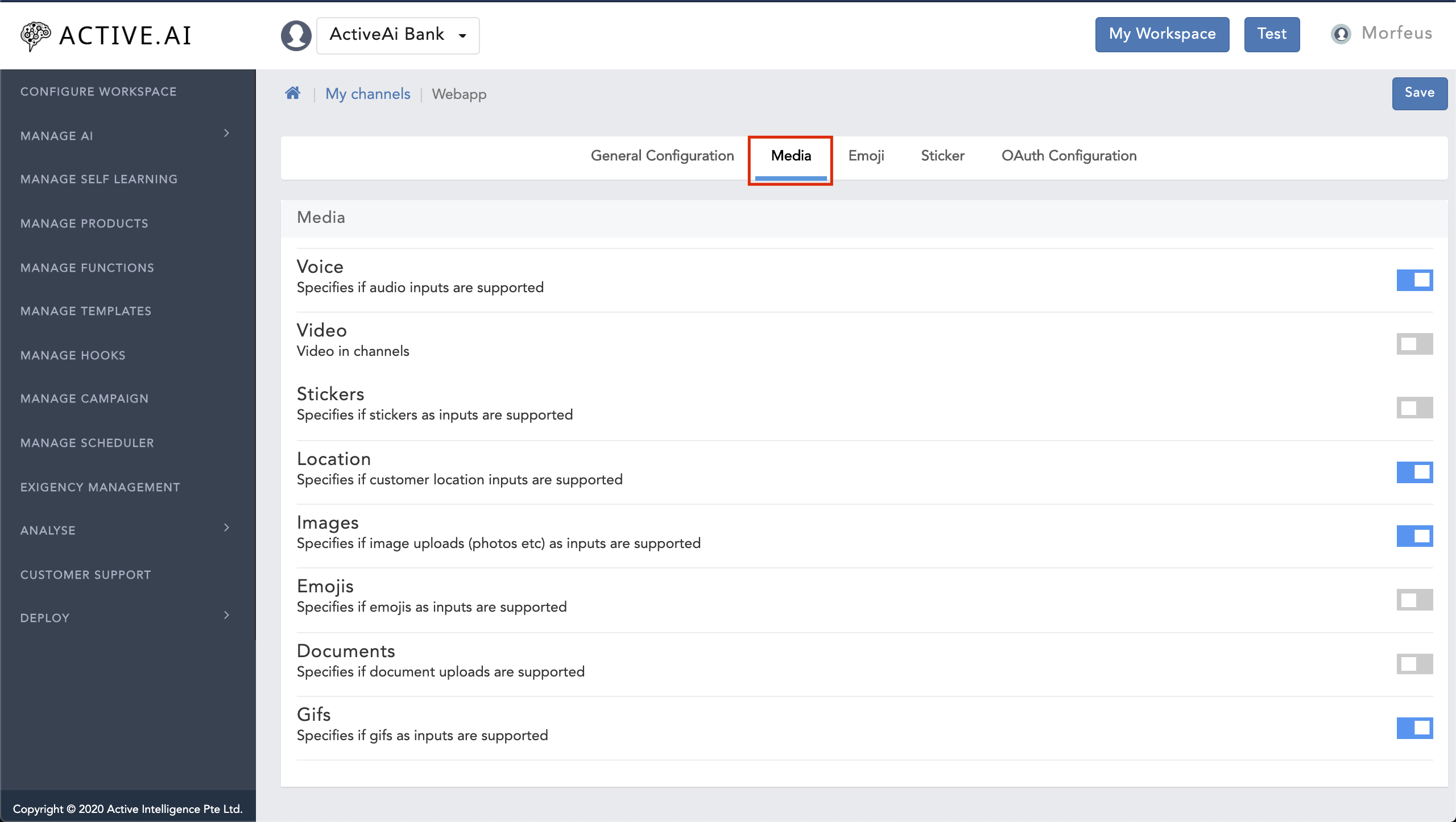

- Media:

- Emoji:

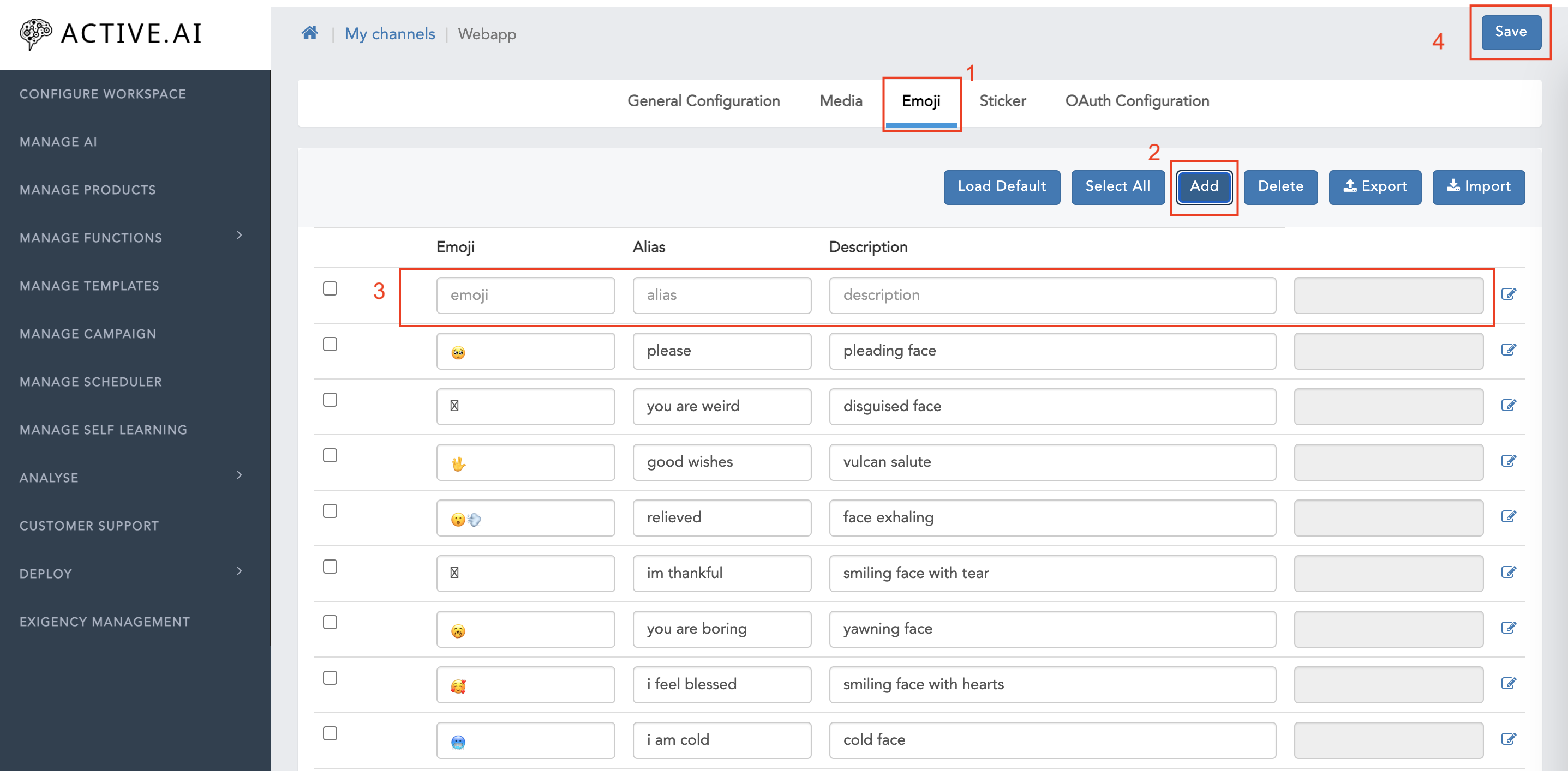

- Goto your workspace

- Click on 'Manage Channels'

- Navigate to 'Enabled'

- Click on the menu icon (On the channel which you want to configure)

- Click on 'Edit Channel'

- Navigate to 'Emojis'

- Click on 'Add'

- Enter all the required details(emojis(unicode characters), alias, description, intent(that you want to show on the particular emojis), etc.)

- Click on 'Save'

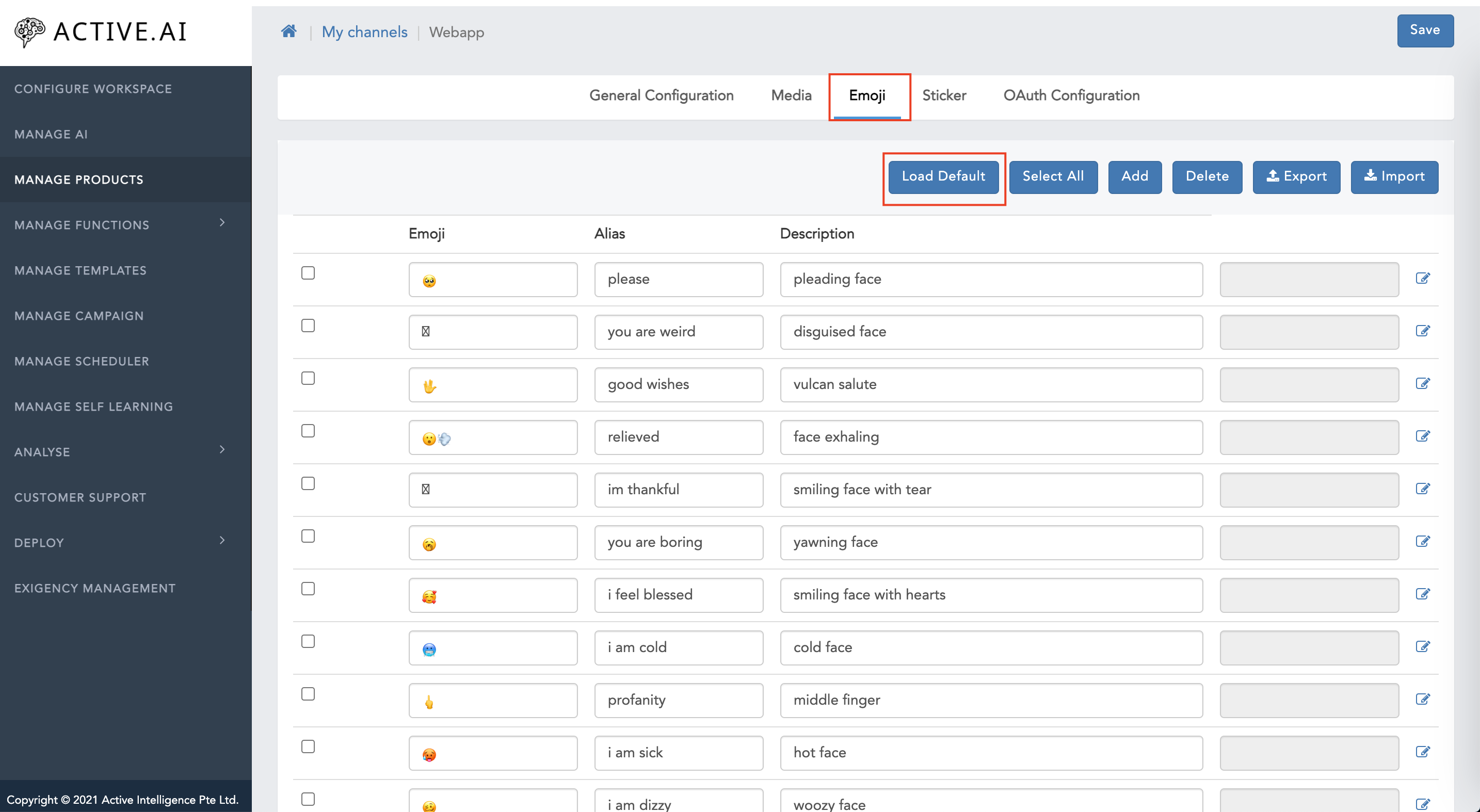

- Goto your workspace

- Click on 'Manage Channels'

- Navigate to 'Enabled'

- Click on the menu icon (On the channel which you want to configure)

- Click on 'Edit Channel'

- Navigate to 'Emojis'

- Click on 'Load Dedaults'

- Goto your workspace

- Click on 'Manage Channels'

- Navigate to 'Enabled'

- Click on the menu icon (On the channel which you want to configure)

- Click on 'Edit Channel'

- Navigate to 'Emojis'

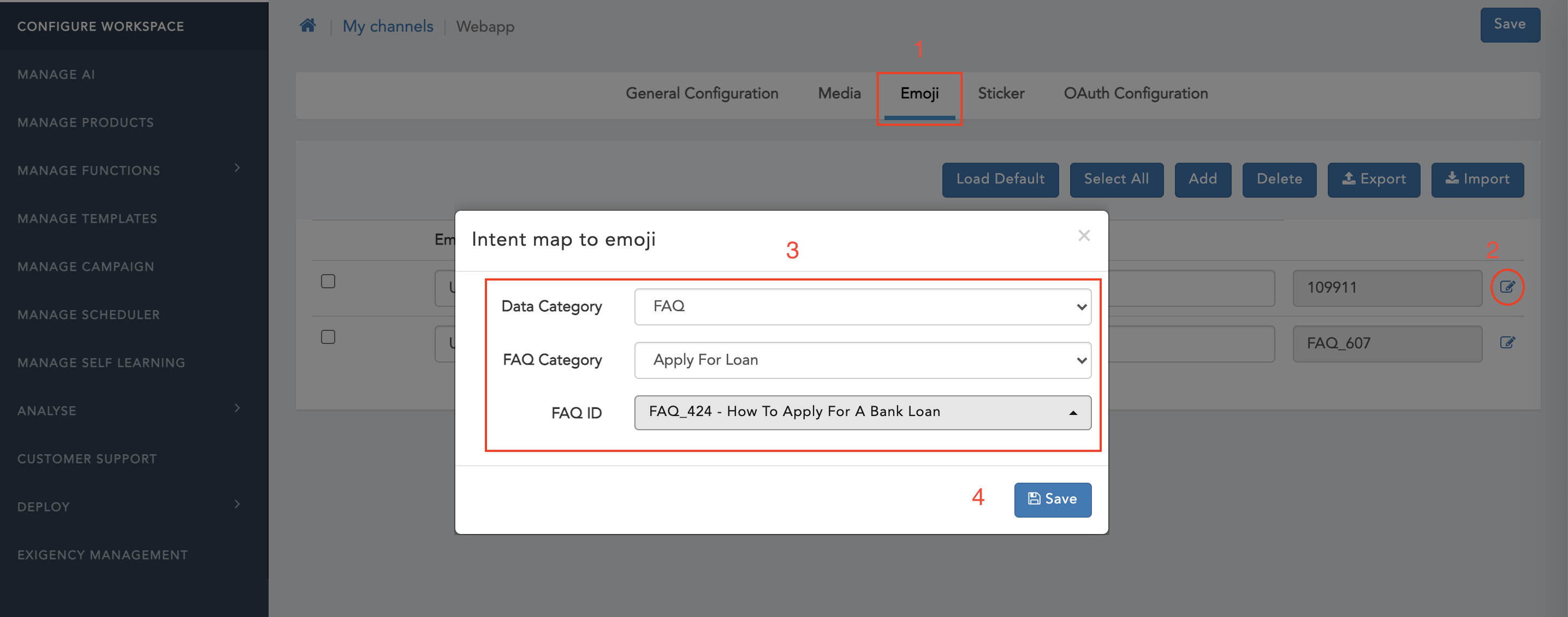

- Click on Edit icon

- Select 'Data Category' as 'FAQ'

- Select 'FAQ Category'

- Select 'FAQ ID'

- Click on save

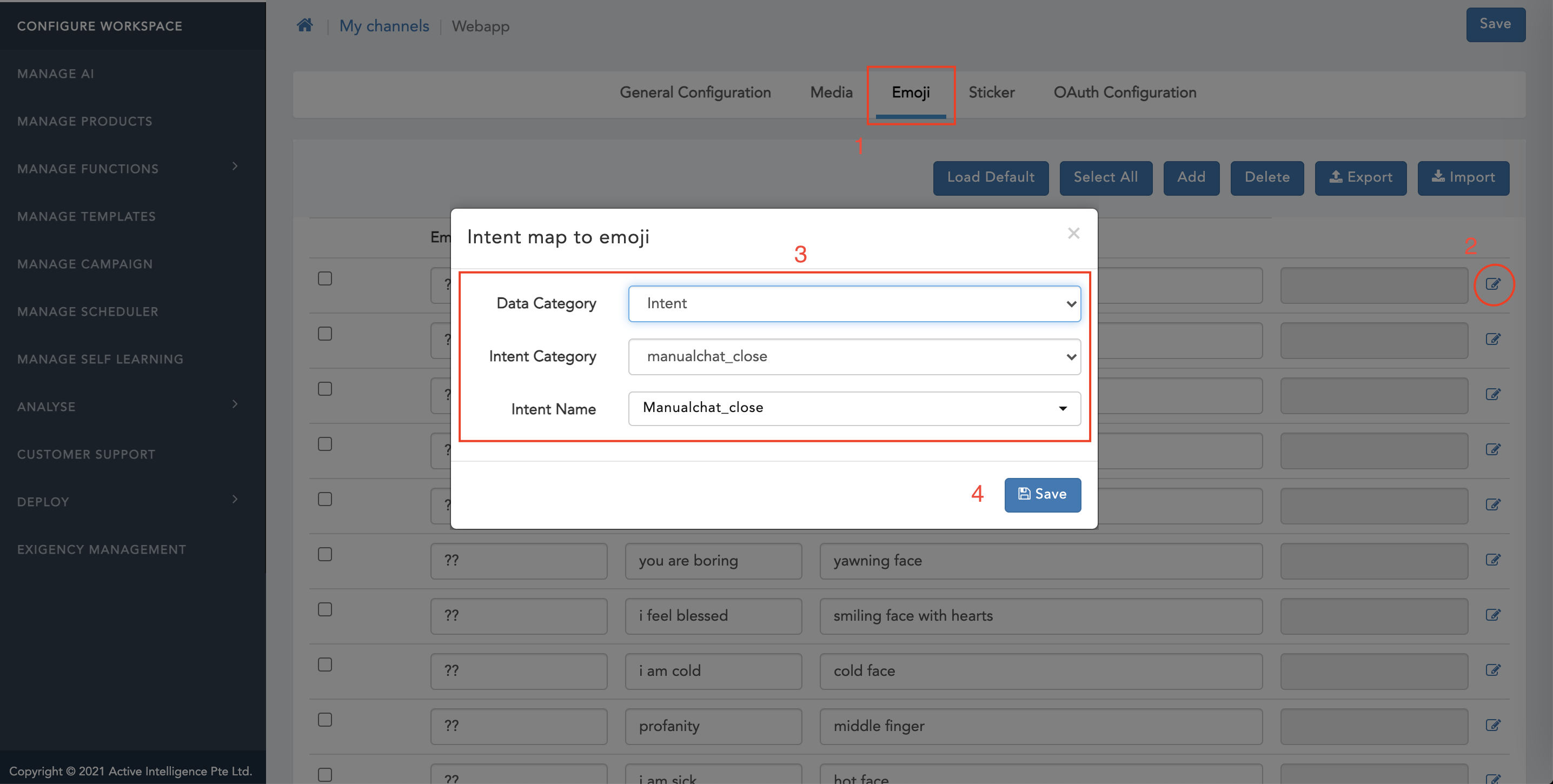

- Click on Edit icon

- Select 'Data Category' as 'Intent'

- Select 'Intent Category'

- Select 'Intent Name'

- Click on save

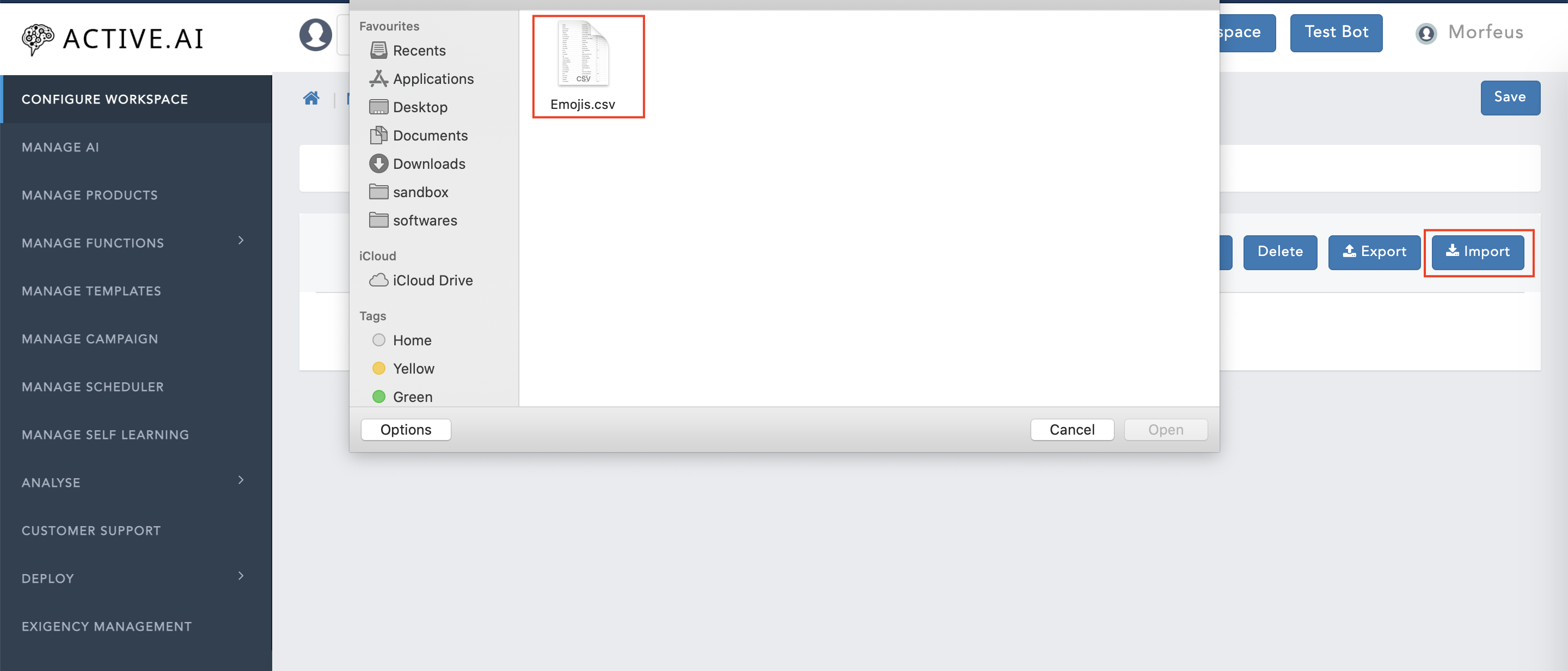

- Goto your workspace

- Click on 'Manage Channels'

- Navigate to 'Enabled'

- Click on the menu icon (On the channel which you want to configure)

- Click on 'Edit Channel'

- Navigate to 'Emojis'

- Click on 'Import'

- Select a CSV file (Which contains Aliases, Description, Emoji, Intent Name, etc. columns)

- Goto your workspace

- Click on 'Manage Channels'

- Navigate to 'Enabled'

- Click on the menu icon (On the channel which you want to configure)

- Click on 'Edit Channel'

- Navigate to 'Emojis'

Click on 'Export' (A CSV file will be downloaded with Aliases, Description, Emoji, Intent Name, etc. columns)

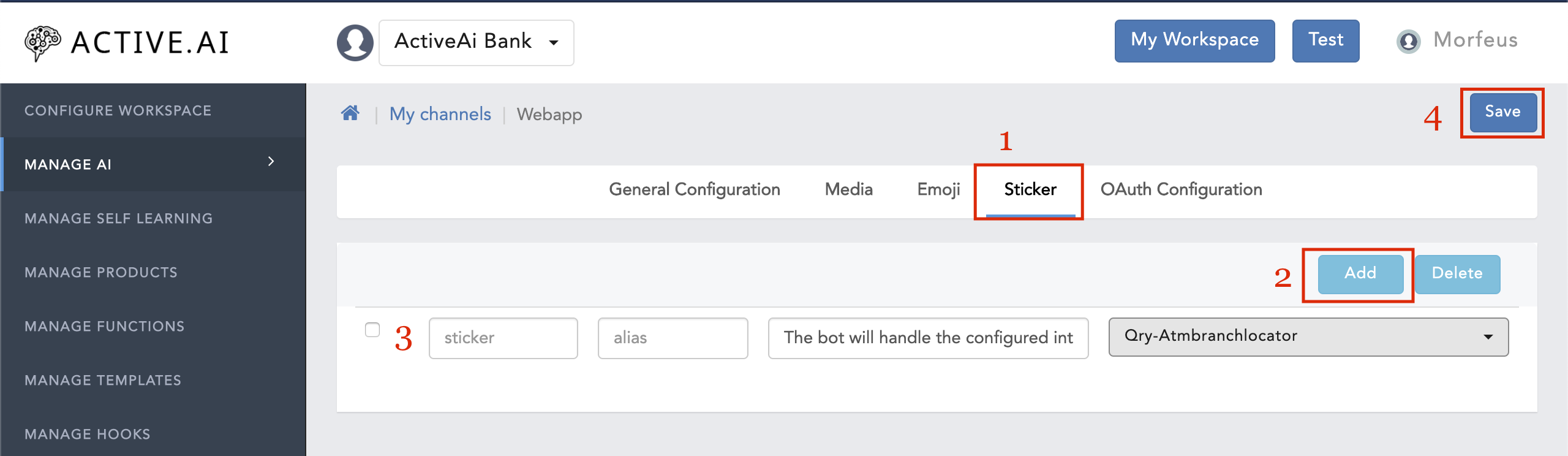

Stickers:

- Goto your workspace

- Click on 'Manage Channels'

- Navigate to 'Enabled'

- Click on the menu icon (On the channel which you want to configure)

- Click on 'Edit Channel'

- Navigate to 'Stickers'

- Click on 'Add'

- Enter all the required details(sticker, alias, description, intent(that you want to show on the particular sticker), etc.)

- Click on 'Save'

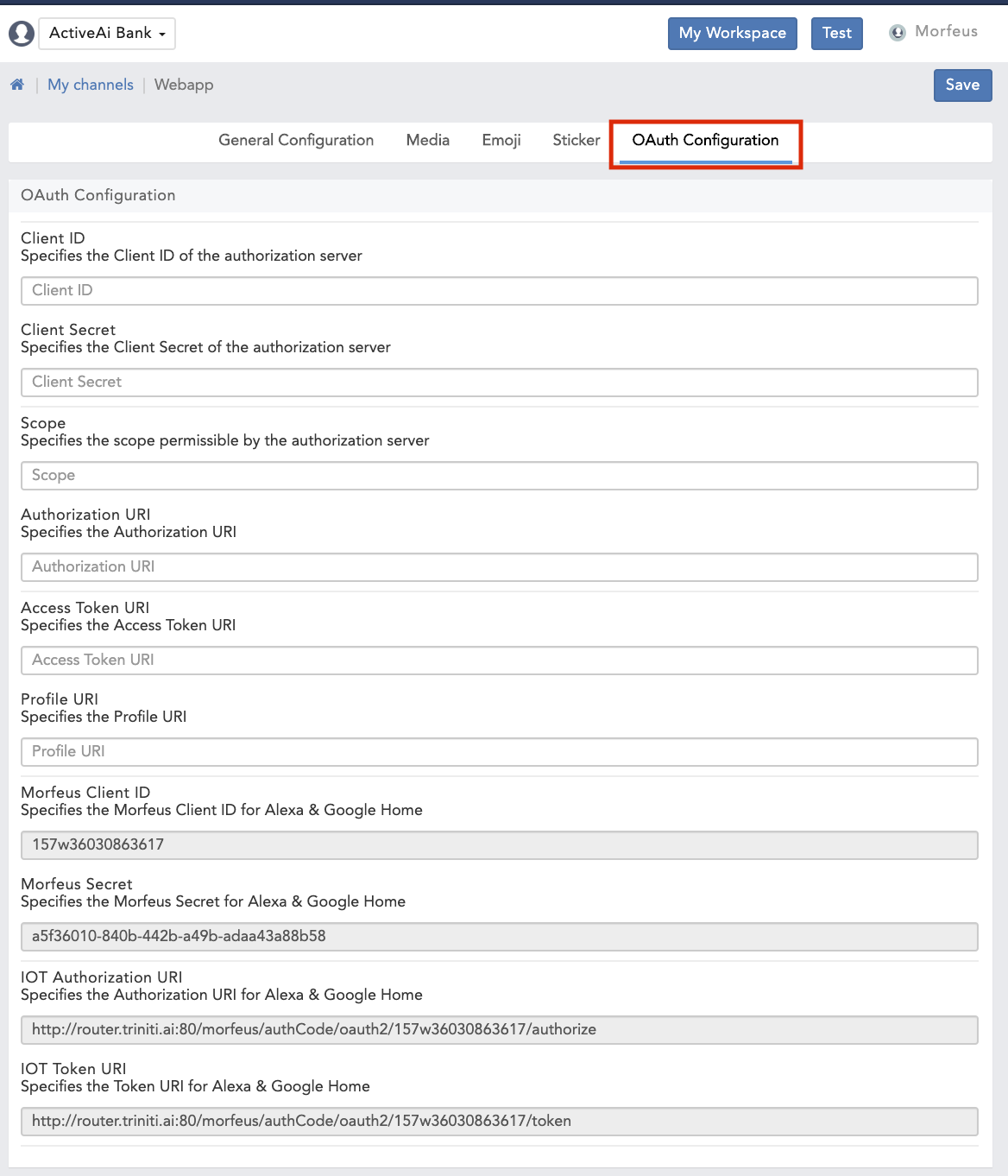

- OAuth Configuration:

- Goto your workspace

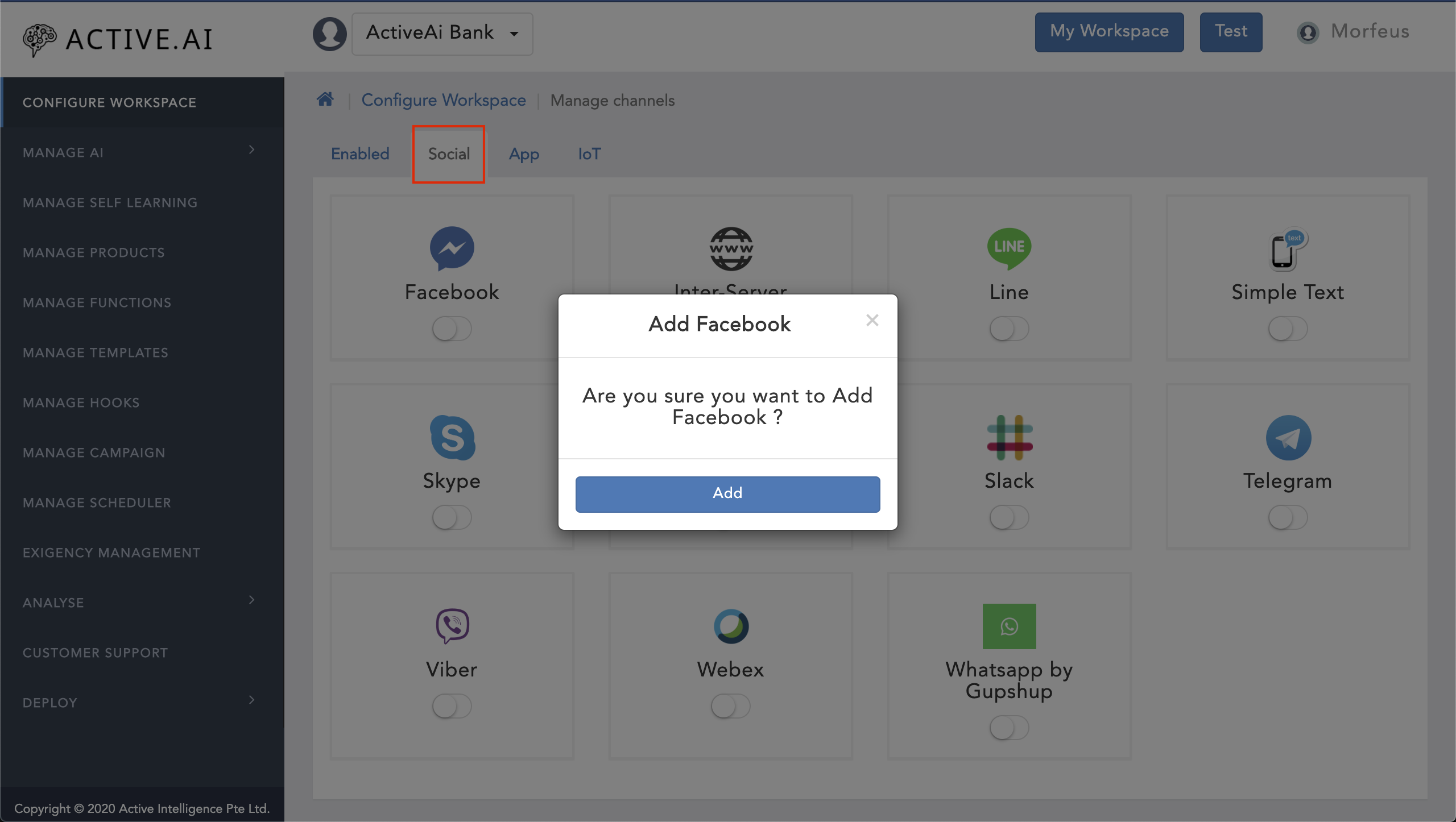

- Click on 'Manage Channels'

- Navigate to 'Social'

- Toggle on the 'Facebook'

- Click on 'Add'

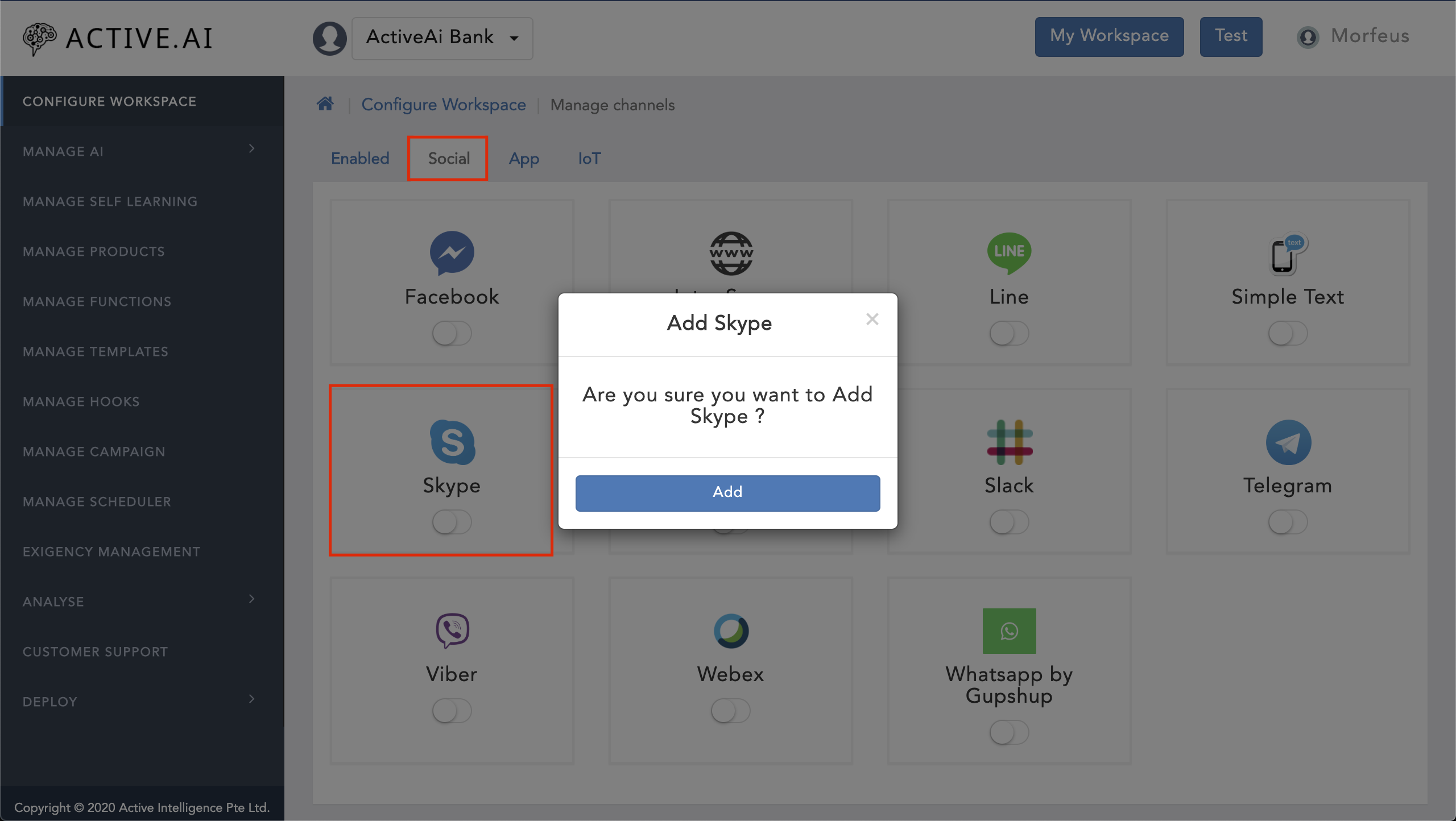

- Goto your workspace

- Click on 'Manage Channels'

- Navigate to 'Social'

- Toggle on the 'Skype'

- Click on 'Add'

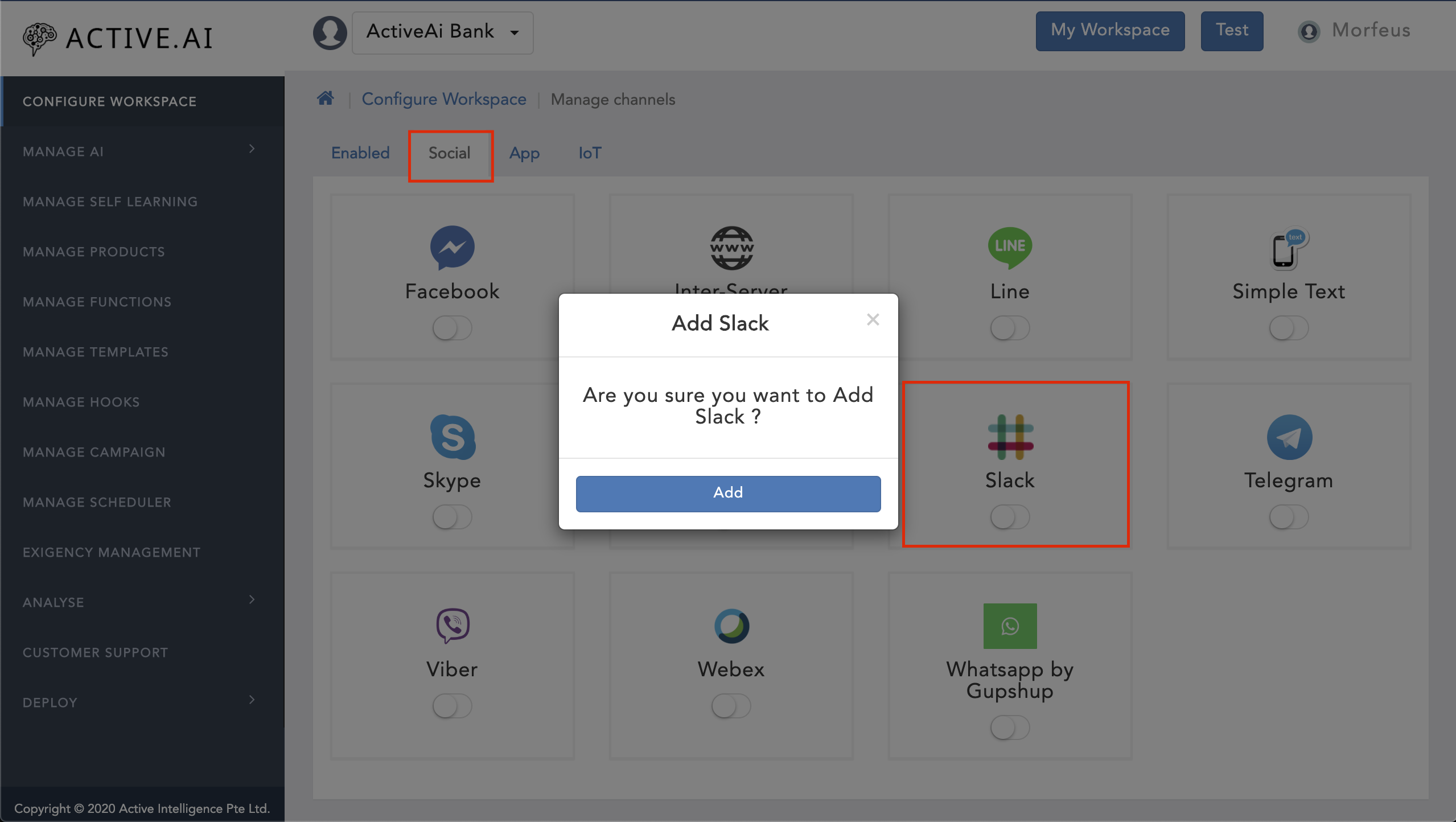

- Goto your workspace

- Click on 'Manage Channels'

- Navigate to 'Social'

- Toggle on the 'Slack'

- Click on 'Add'

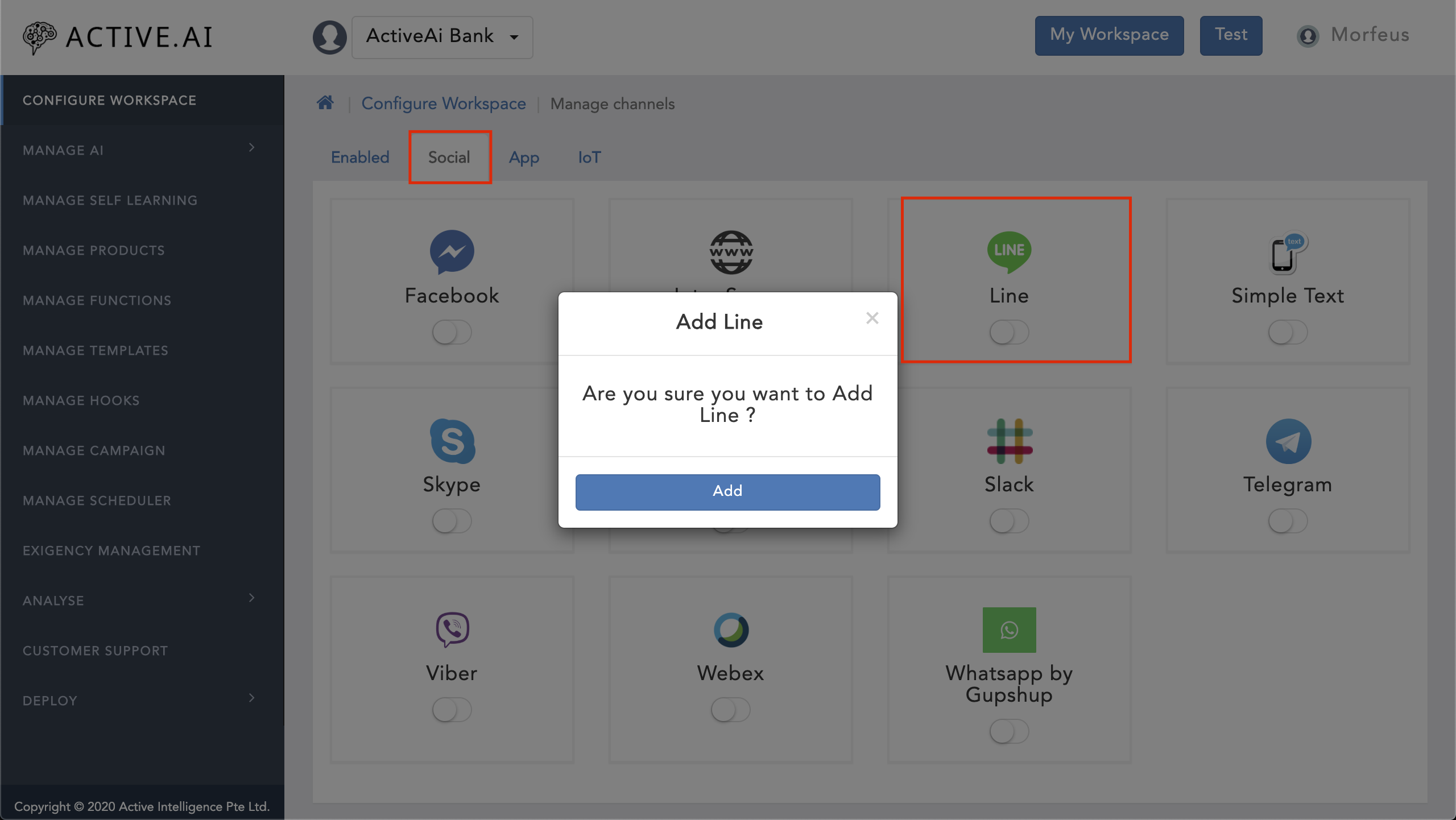

- Goto your workspace

- Click on 'Manage Channels'

- Navigate to 'Social'

- Toggle on the 'Line'

- Click on 'Add'

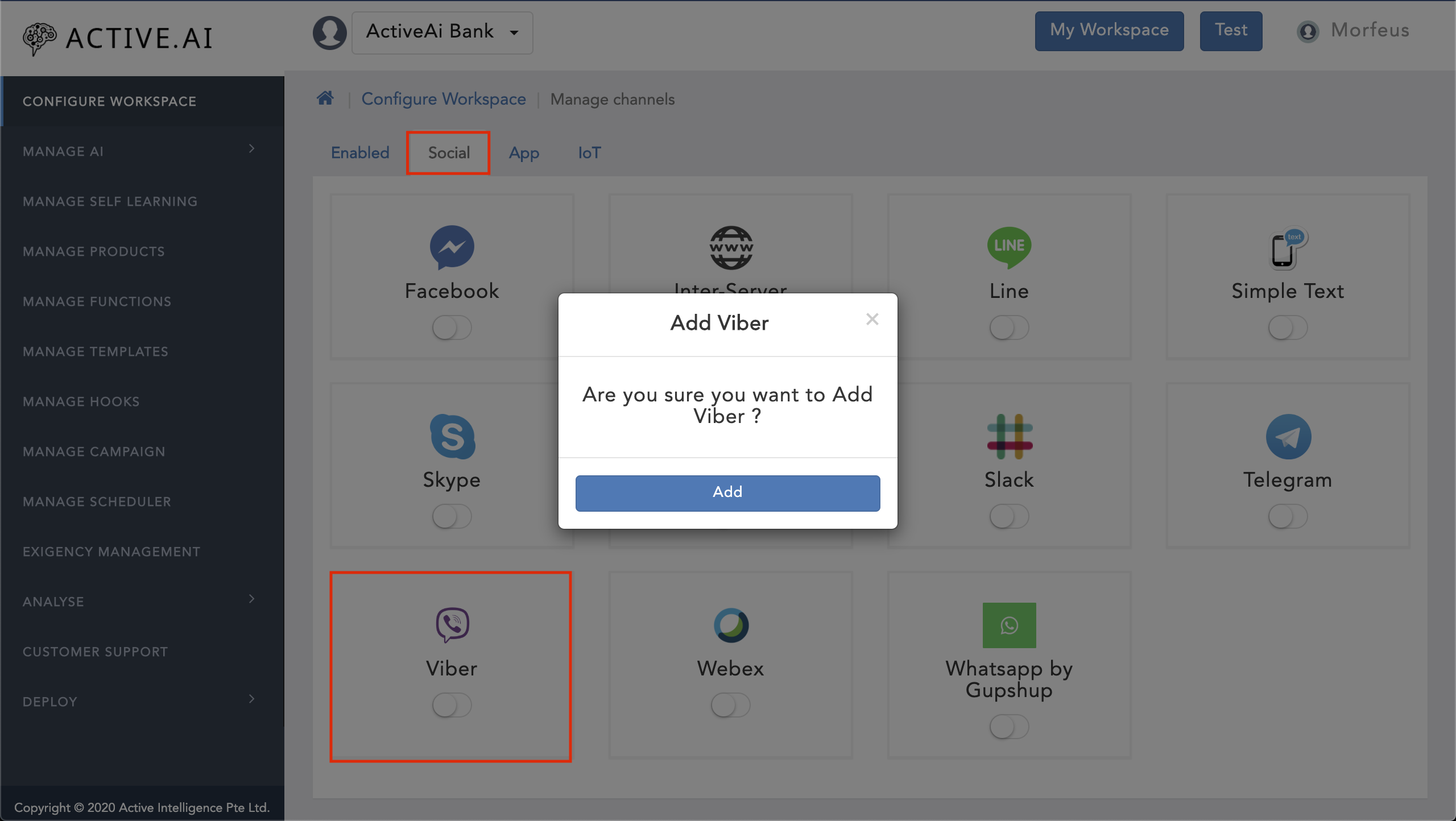

- Goto your workspace

- Click on 'Manage Channels'

- Navigate to 'Social'

- Toggle on the 'Viber'

- Click on 'Add'

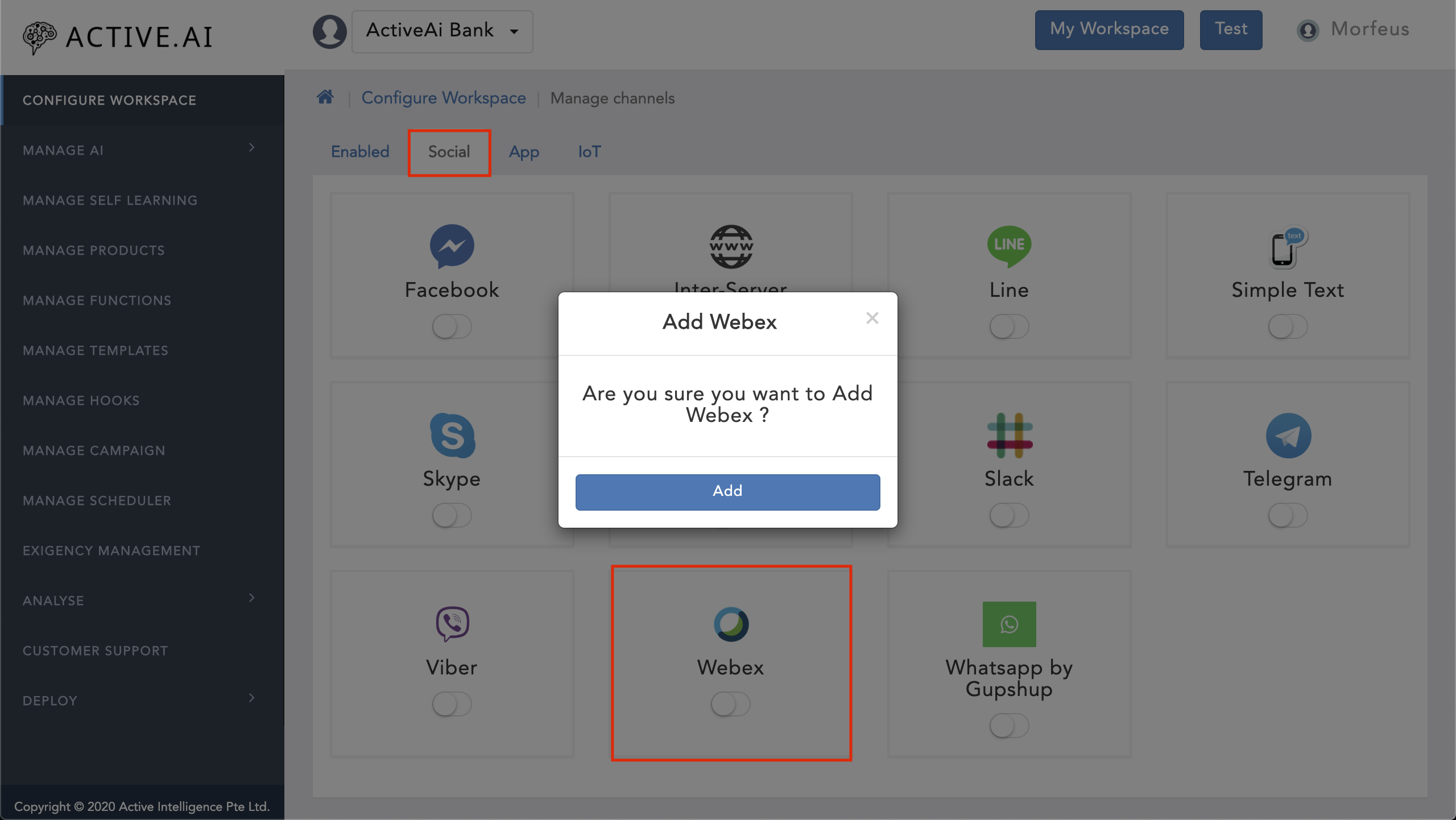

- Goto your workspace

- Click on 'Manage Channels'

- Navigate to 'Social'

- Toggle on the 'Webex'

- Click on 'Add'

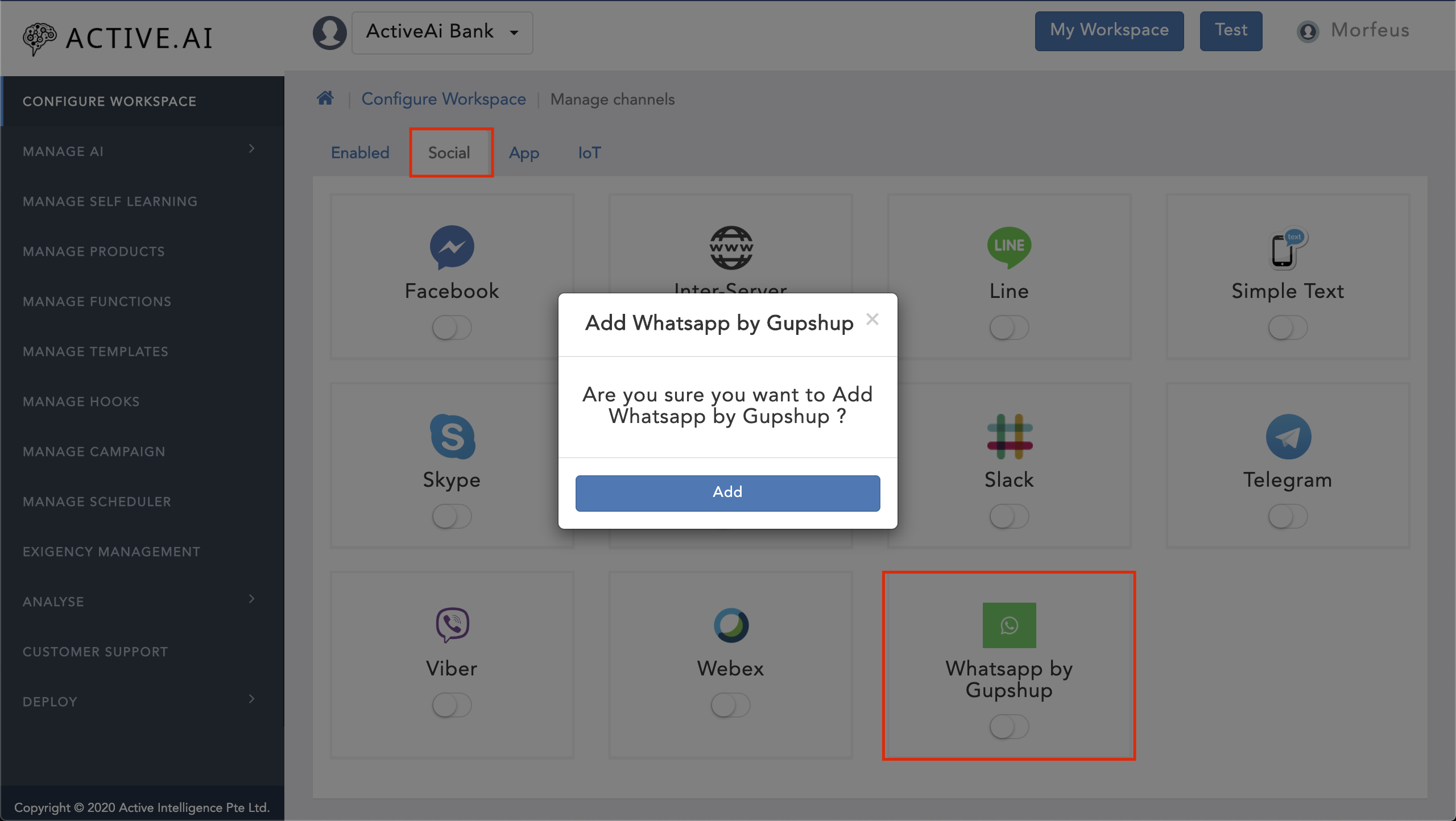

- Goto your workspace

- Click on 'Manage Channels'

- Navigate to 'Social'

- Toggle on the 'Whatsapp by Gupshup'

- Click on 'Add'

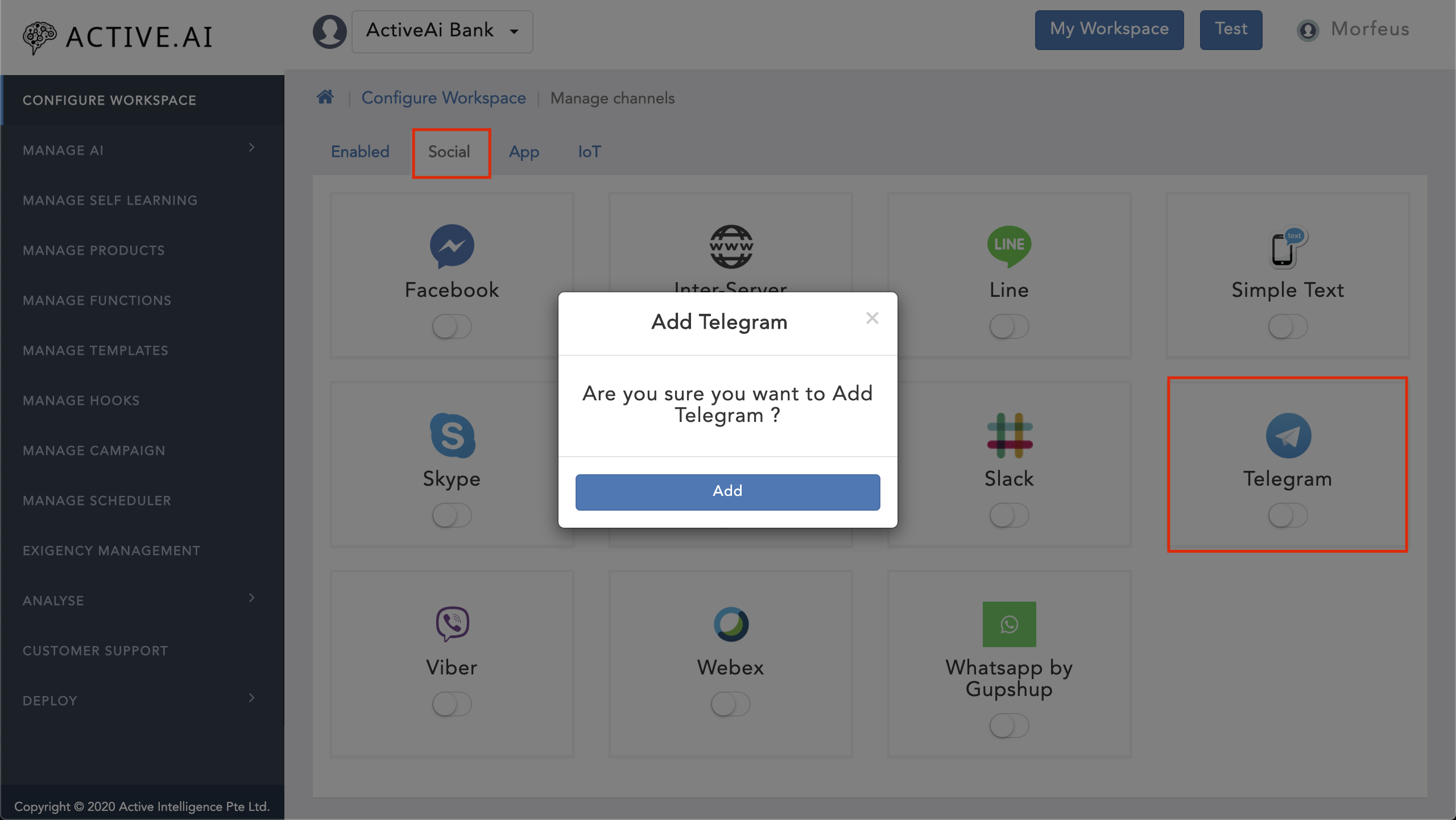

- Goto your workspace

- Click on 'Manage Channels'

- Navigate to 'Social'

- Toggle on the 'Telegram'

- Click on 'Add'

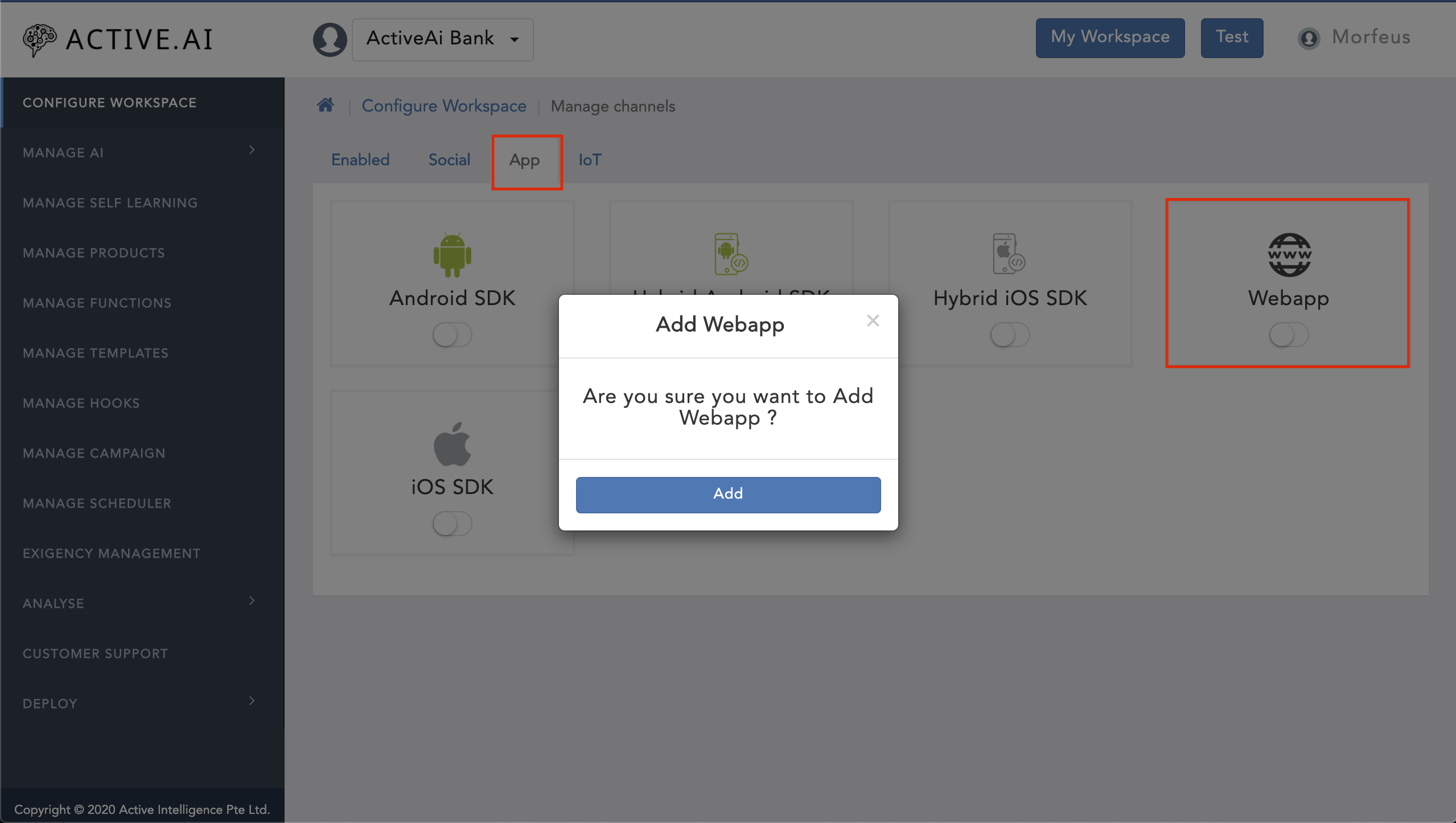

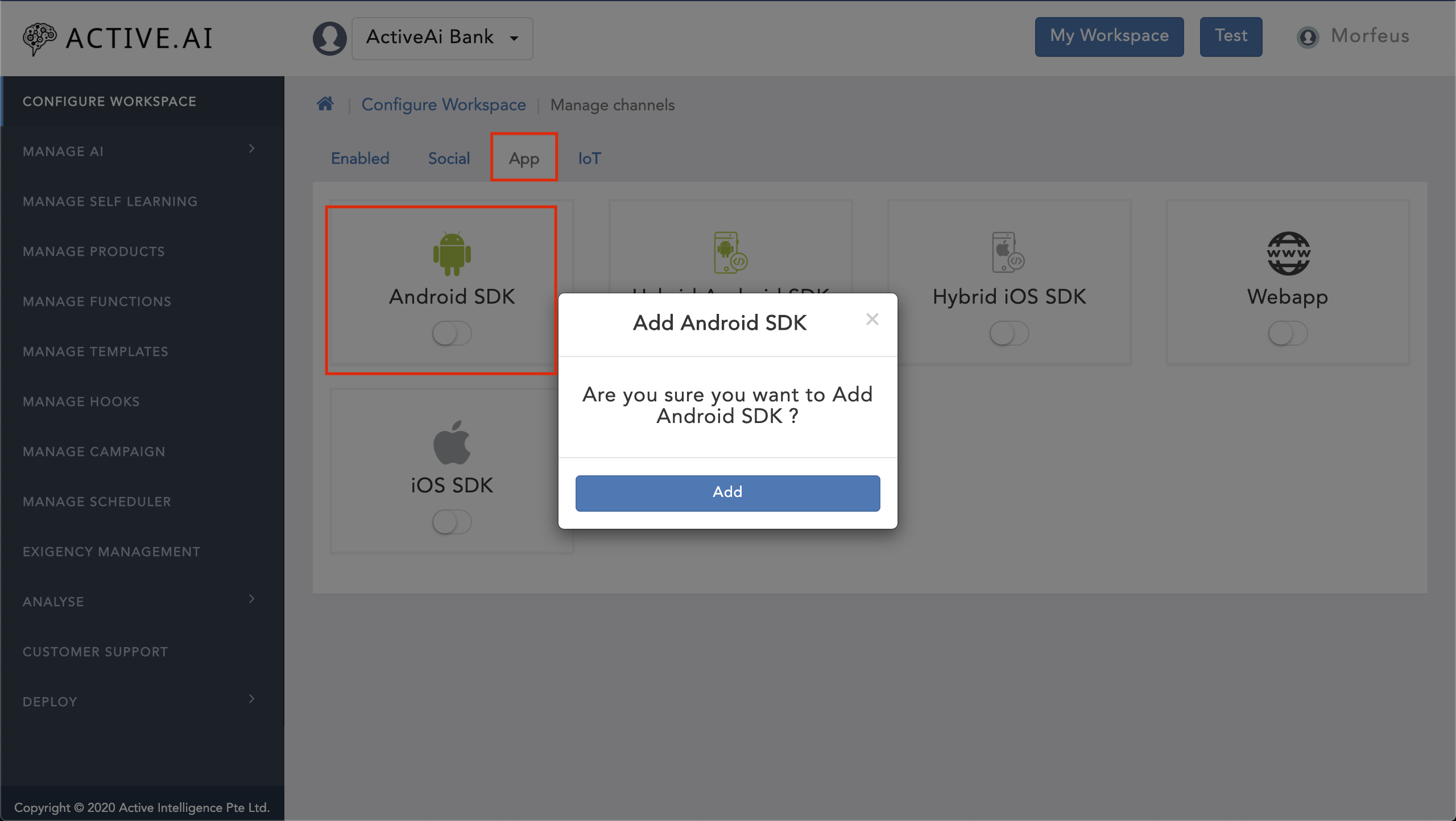

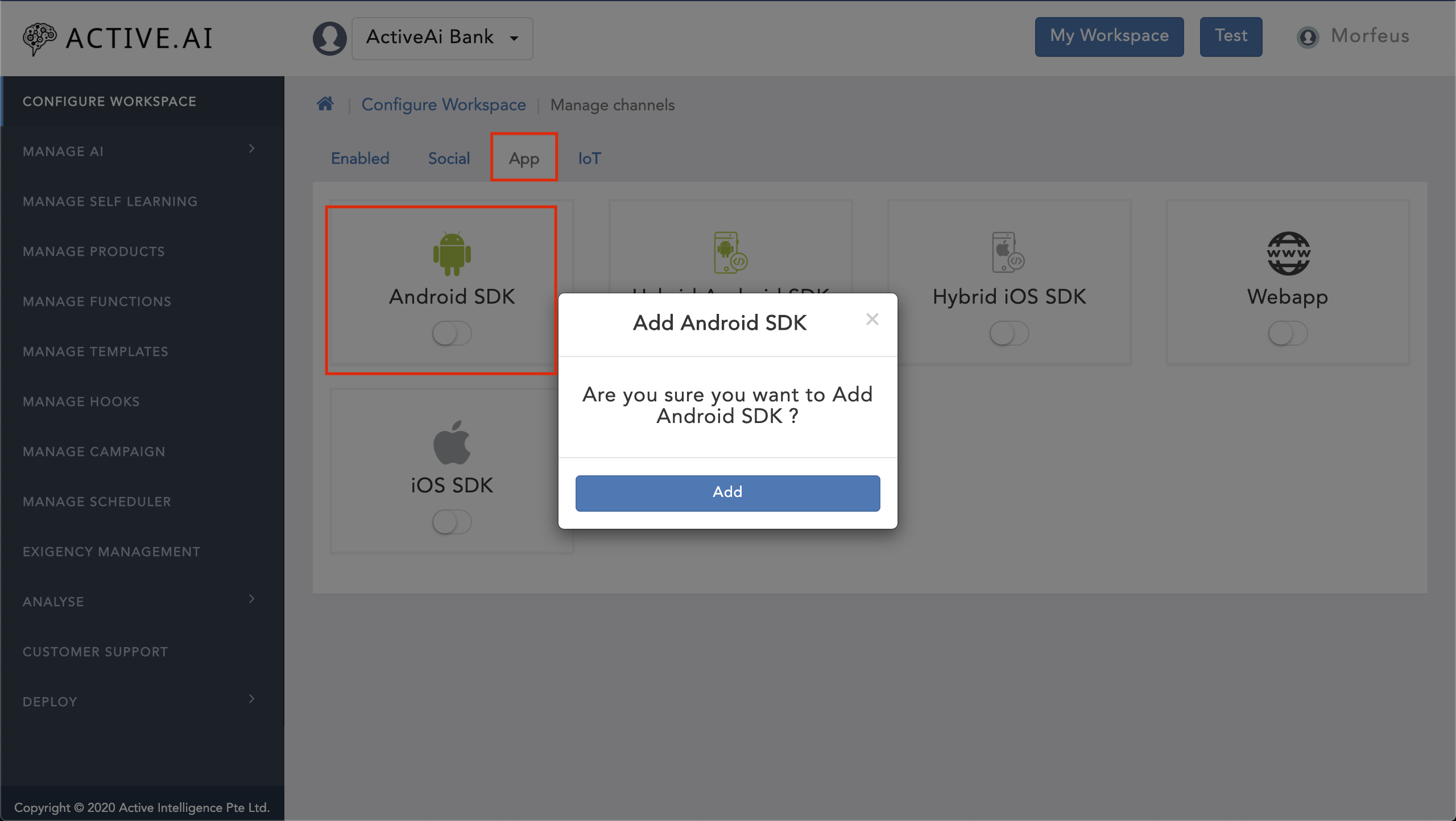

- Goto your workspace

- Click on 'Manage Channels'

- Navigate to 'App'

- Toggle on the 'Webapp'

- Click on 'Add'

- Goto your workspace

- Click on 'Manage Channels'

- Navigate to 'App'

- Toggle on the 'Hybrid Android SDK'

- Click on 'Add'

- Goto your workspace

- Click on 'Manage Channels'

- Navigate to 'App'

- Toggle on the 'Hybrid Android SDK'

- Click on 'Add'

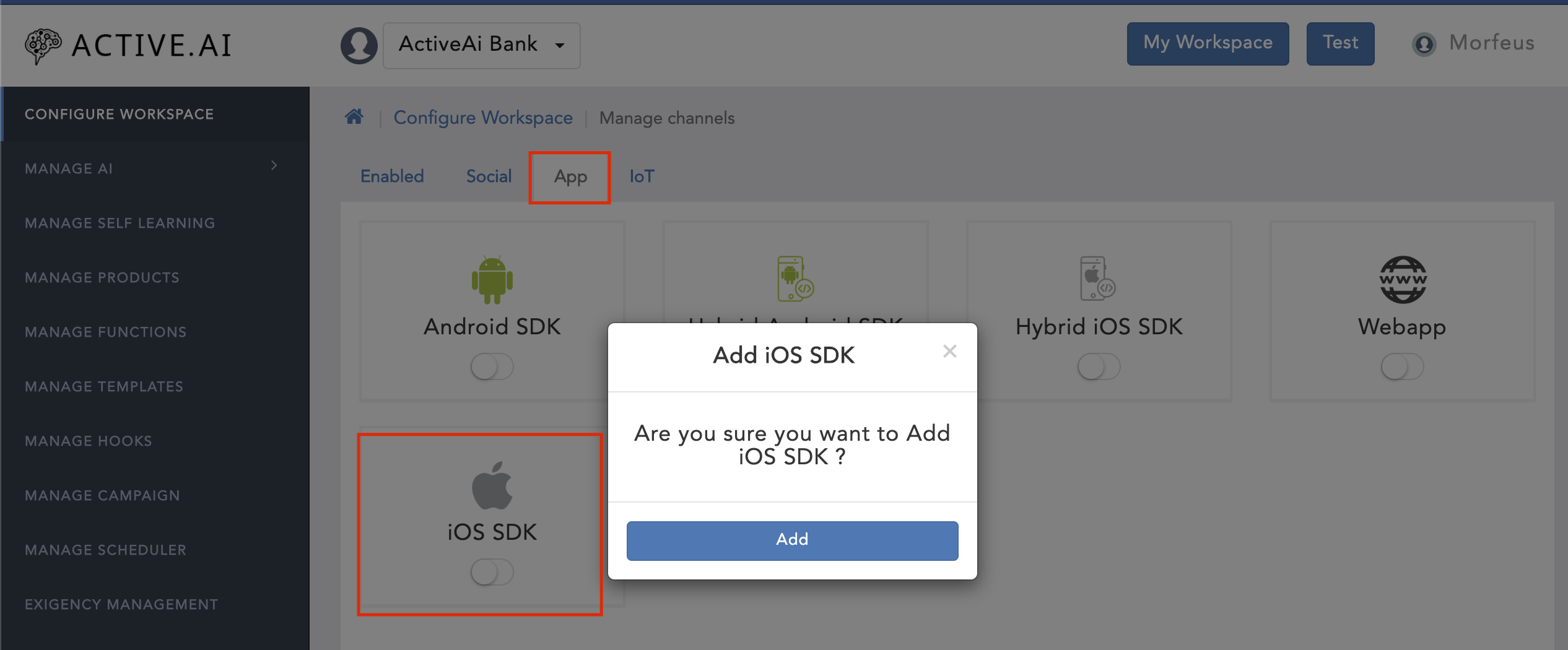

- Goto your workspace

- Click on 'Manage Channels'

- Navigate to 'App'

- Toggle on the 'iOS SDK'

- Click on 'Add'

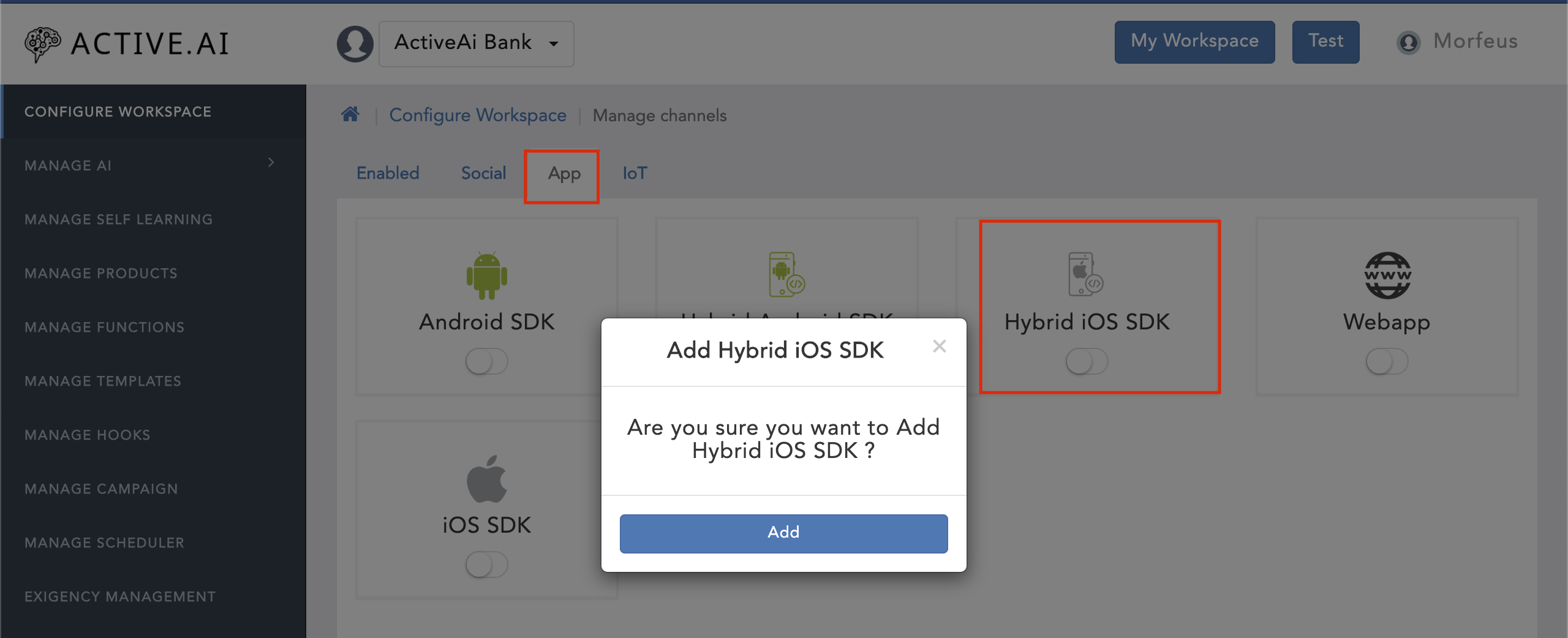

- Goto your workspace

- Click on 'Manage Channels'

- Navigate to 'App'

- Toggle on the 'Hybrid iOS SDK'

- Click on 'Add'

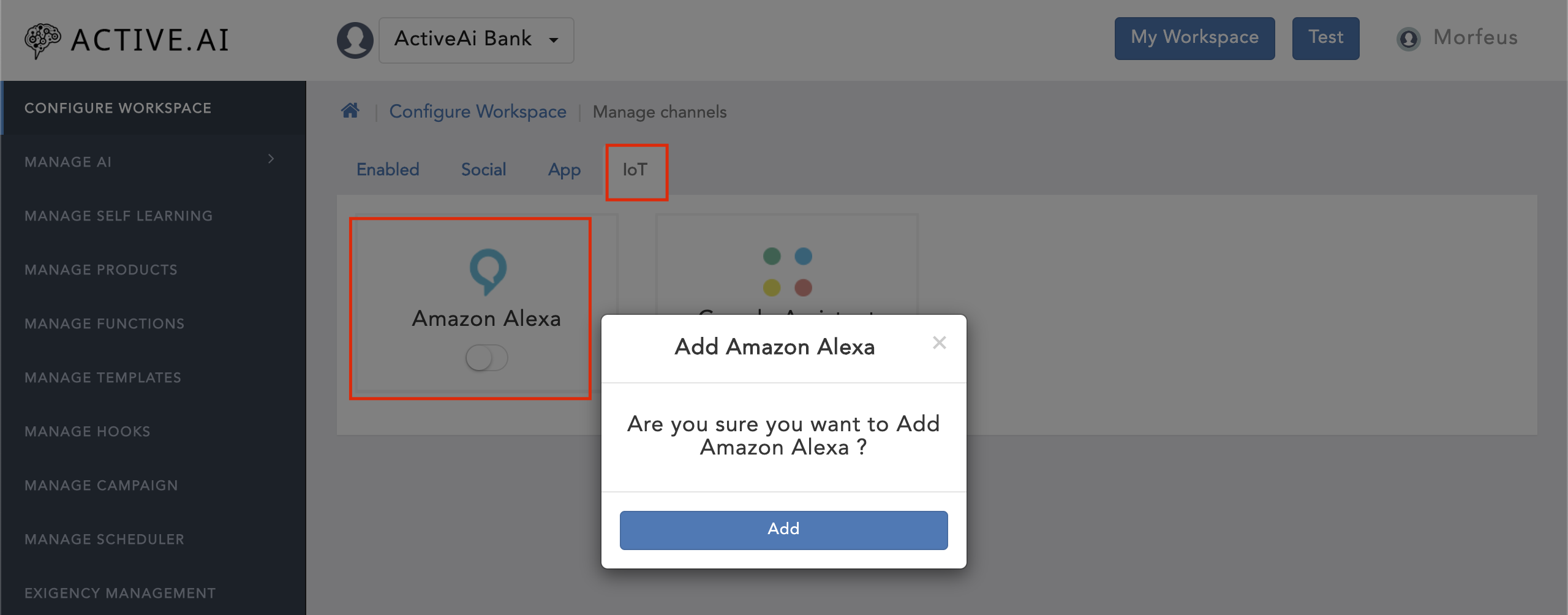

- Goto your workspace

- Click on 'Manage Channels'

- Navigate to 'IoT'

- Toggle on the 'Amazon Alexa'

- Click on 'Add'

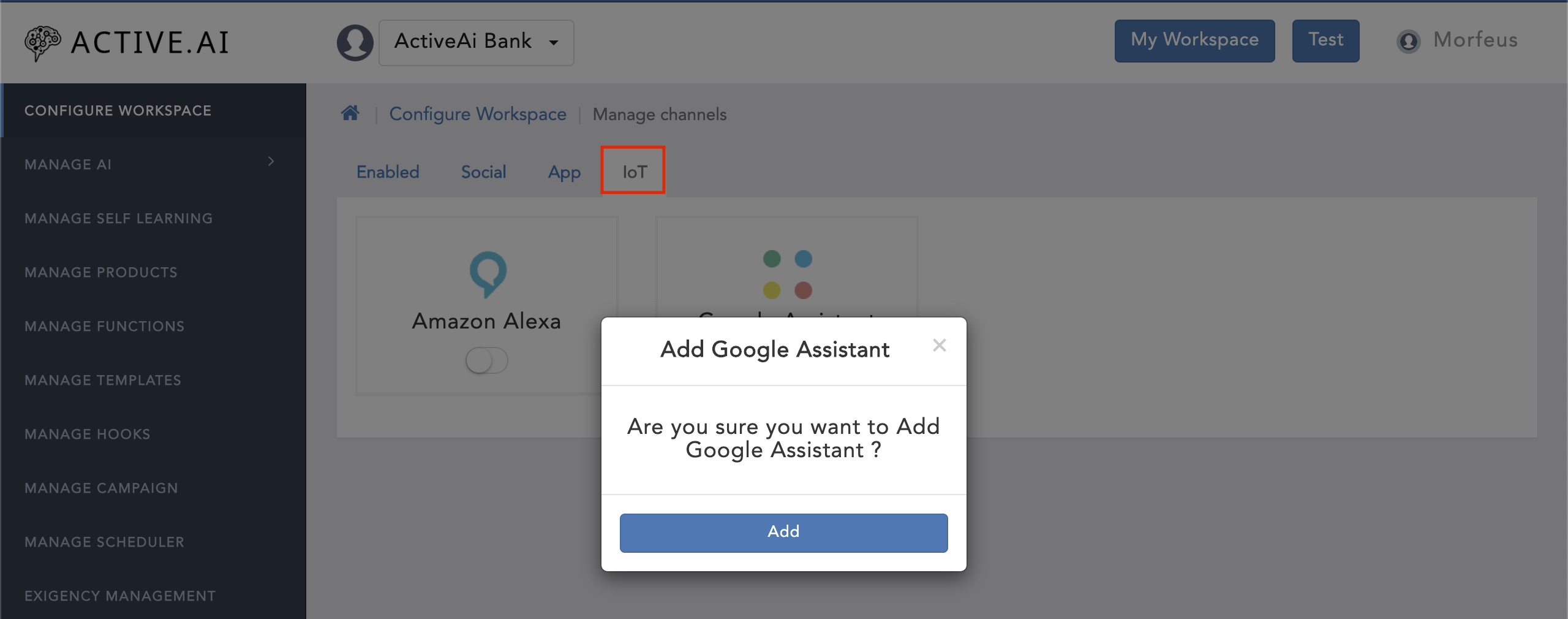

- Goto your workspace

- Click on 'Manage Channels'

- Navigate to 'IoT'

- Toggle on the 'Google Assistant'

- Click on 'Add'

- Goto your workspace

- Click on 'Manage Hooks'

- Click on 'Add'

- Enter all the required details

- Click on Add

- Below are the fields to be configured while creating a record

- If there are many intents and fulfillments it easy to configure using Function module has it provides consolidation of all its modules (messages, rules, templates and data) Configure Functions

- Goto your workspace

- Click on 'Manage Hooks'

- Click on delete icon of the particular hook(Which you want to delete)

- Goto your workspace

- Click on 'Manage Hooks'

- Click on 'Import'

- Click 'Yes' on the pop-up (Are you sure you want to overwrite?)

- Select a JSON file from your system which contains configuration of hooks in JSON format.

- Goto your workspace

- Click on 'Manage Hooks'

- Click on 'Export'

- Goto your workspace

- Click on 'Manage Campaign'

- Click on 'Add'

- Enter all the required details in 'Definition section'

- Enter all the required details in 'Rules section'

- Click on 'Add Campaign'

- Type of campaign: Campaign could be of two types -Internal -External

Start & End Date: This indicates the date from which campaign will start and campaign will end.

Supported Channels: Morfeus is a omnichannel platform, it supports a variety of channels. This toggle provides right to user to push campaign against various channels that are setup in particular workspace.

State- State defines wether a campaign is enabled or disabled.

Converstation Type- Conversation type for a campaign can be of Faq or Transaction. It defines when will the campaign be triggered, wether in Faqs or in Transaction.

Engagement Type - Engagement type decides wether the campaign to be fulfilled by a Hook or a Template.

Engagement Value - Depending on the engagement type you could select the engagemnet value for the campaing from the drop down. It could be either template value or Hook name.

Display when a Customer : This rules provides the ablity to display campaign to customer when customer either starts interacting with bot or when he has finised his interation.

Campaign managament consists of three set of rules

- Context Rules

- Historical Rules

- Derived Rules

- Goto your workspace

- Navigate to 'Manage Scheduler'

- Click on 'Schedule'

- Enter the all the required details(Name, trigger, Start At(date), Class, Description, Interval, etc.)

- Click on Schedule

- Name: The job name which you want to trigger.

- Class: A java class that will be triggered on the schedule. For that create a java class that extends our interface and implements the methods.

- Trigger: It will be a job id for that job you are scheduling.

- Description: The description of the Job.

- Starts At: The date on which you want your job to be triggered.

- Interval: For which interval you want to trigger the scheduled job. like every 2 days, weeks, months, etc. You can configure.

- Goto your workspace

- Navigate to 'Manage Scheduler'

- Select the Scheduler (Which you want to edit)

- Click on the 'Edit' icon under 'Action'

- Edit the scheduler

- Click on Schedule

- Goto your workspace

- Navigate to 'Manage Scheduler'

- Select the Scheduler (Which you want to stop)

- Click on the 'PAUSE' icon under 'Action'

- Click on 'Stop' (on popup)

- Goto your workspace

- Navigate to 'Manage Scheduler'

- Select the Scheduler (Which you want to delete)

- Click on the 'DELETE' icon under 'Action'

- Click on 'Delete' (on popup)

- Goto your workspace

- Click on 'Manage Products'

- Click on 'Functions'

- Click on 'Add Function'

- Enter all the required details

- Click on 'Create & Proceed'

- Configure your function (please refer Configure Function)

- Click on save

- Goto your workspace

- Click on 'Manage Products'

- Click on 'Rules'

- Configure the rules as per your requirements

- Click on Save

- Context Path

- Webview Domain

- Over All daily limit

- RB Configurations

- Encrypt User Password

- Exponent Value for password Encryption

- Modulus Value for password Encryption

- Daily Transaction Count

- Daily Transaction Limit (Amount)

- Maximum Transaction Limit (Amount)

- Minimum Interval between Transactions (mins)

- Minimum Transaction Limit (Amount)

- Biller Type

- Consumer Number Validation Pattern

- Maximum Consumer Reference Number Length

- Minimum Consumer Reference Number Length

- Daily Transaction Count

- Daily Transaction Limit (Amount)

- Maximum Transaction Limit (Amount)

- Minimum Interval between Transactions (mins)

- Minimum Transaction Limit (Amount)

- Daily Transaction Count

- Daily Transaction Limit (Amount)

- Maximum Transaction Limit (Amount)

- Minimum Transaction Limit (Amount)

- Daily DTH Transaction Limit ( Amount )

- Daily Transaction Limit ( Count )

- Minimum Interval between Transactions (mins)

- Minimum Transaction Limit (Amount)

- Per Transaction Limit (Amount)

- Daily Datacard Transaction Limit ( Count )

- Daily Transaction Limit ( Amount )

- Minimum Interval between Transactions (mins)

- Minimum Transaction Limit (Amount)

- Per Transaction Datacard Limit (Amount)

- Daily Transaction Limit ( Amount )

- Daily Transaction Limit ( Count )

- DATA CARD Number Validation Group

- DATA CARD Number Validation Pattern

- DTH Number Validation Group

- DTH Number Validation Pattern

- Minimum Interval between Transactions (mins)

- Minimum Transaction Limit (Amount)

- Mobile Number Validation Group

- Mobile Number Validation Pattern

- Per Transaction Limit (Amount)

- Amount validation for self bank credit card

- Time zone for NEFT timing

- Daily Transaction Count

- Daily Transaction Limit (Amount)

- Daily Transaction Limit (Amount) for IMPS

- IMPS Maximum Transaction Limit (Amount)

- IMPS Minimum Transaction Limit (Amount)

- Minimum Interval between Transactions (mins)

- NEFT Maximum Transaction Limit (Amount)

- NEFT Minimum Transaction Limit (Amount)

- RTGS Maximum Transaction Limit (Amount)

- RTGS Minimum Transaction Limit (Amount)

- Daily Transaction Count

- Daily Transaction Limit (Amount)

- Maximum Transaction Limit (Amount)

- Minimum Interval between Transactions (mins)

- Minimum Transaction Limit (Amount)

- Per Transaction Max Amount

- Per Transaction Min Amount

- Transaction amount per day limit

- Transaction count per day limit

- Transaction frequency

- Per Transaction Max Amount

- Per Transaction Min Amount

- Transaction amount per day limit

- Transaction count per day limit

- Transaction frequency

- Daily Transaction Count

- Daily Transaction Limit (Amount)

- Maximum Transaction Limit (Amount)

- Minimum Interval between Transactions (mins)

- Minimum Transaction Limit (Amount)

- NEFT Saturday Transaction Timings

- NEFT Transaction Timings from Monday to Friday

- RTGS Saturday Transaction Timings

- RTGS Transaction Timings from Monday to Friday

- Per Transaction Max Amount

- Per Transaction Min Amount

- Transaction amount per day limit

- Transaction count per day limit

- Transaction frequency

- Alexa Push Notification title

- Alexa Push Notification title

- Alexa Repeat

- Goto your workspace

- Click on 'Manage Products'

- Click on 'Products'

- Click on 'Add Product'

- Enter all the required details

- Click on Add

- Goto your workspace

- Click on 'Manage Products'

- Click on 'Billing'

- Add biller category or choose from an existing one

- Click on 'Add Biller'

- Enter all the required details (Name, Biller Id, Biller Presence, Late Payment, Customer surcharge, Partial pay, etc.)

- Click on Add

- Goto your workspace

- Click on 'Manage Products'

- Click on 'Recharge'

- Click on 'Add Operator'

- Enter all the required details

- Click on Add

- Goto your workspace

- Click on 'Manage Products'

- Click on 'IFSC Codes'

- Click on 'Add IFSC'

- Enter all the required details

- Click on Add

- Goto your workspace

- Click on 'Manage Products'

- Click on 'Holiday'

- Click on 'Add Holiday'

- Enter all the required details (Holiday date, transaction type, start time, end time, etc.)

- Click on Add

- Goto your workspace

- Click on 'Manage Products'

- Click on 'Customer Segments'

- Click on 'Add Customer Segment'

- Enter all the required details

- Click on Add

- Goto your workspace

- Click on 'Exigency Management'

- Click on 'Add'

- Enter all the required details (Start time, End Time, Notification, Notify by, Title, message, notify channels, etc.)

- Click on Add.

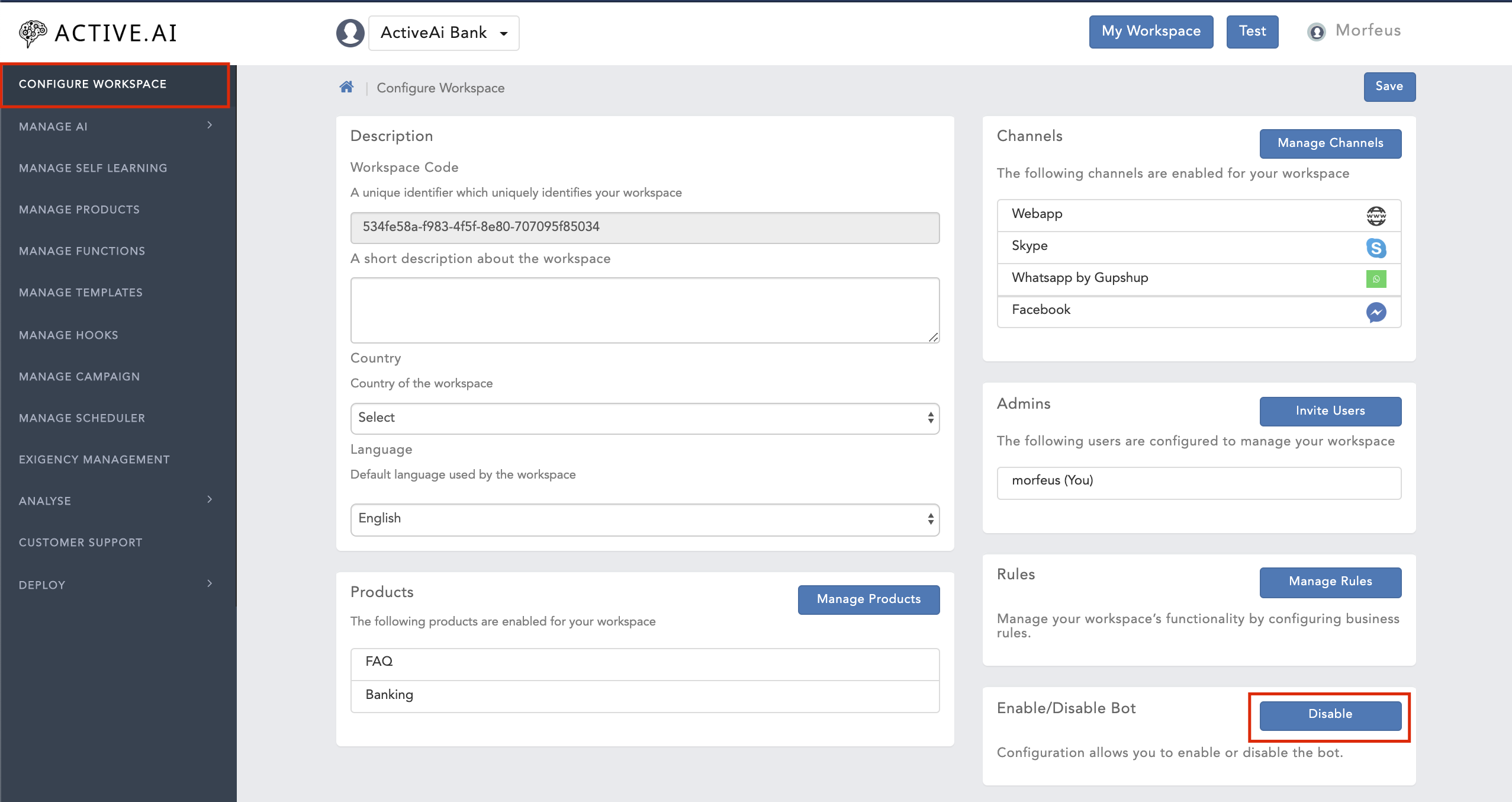

- Goto your workspace

- Enable/Disable bot under 'Configure Workspace' section

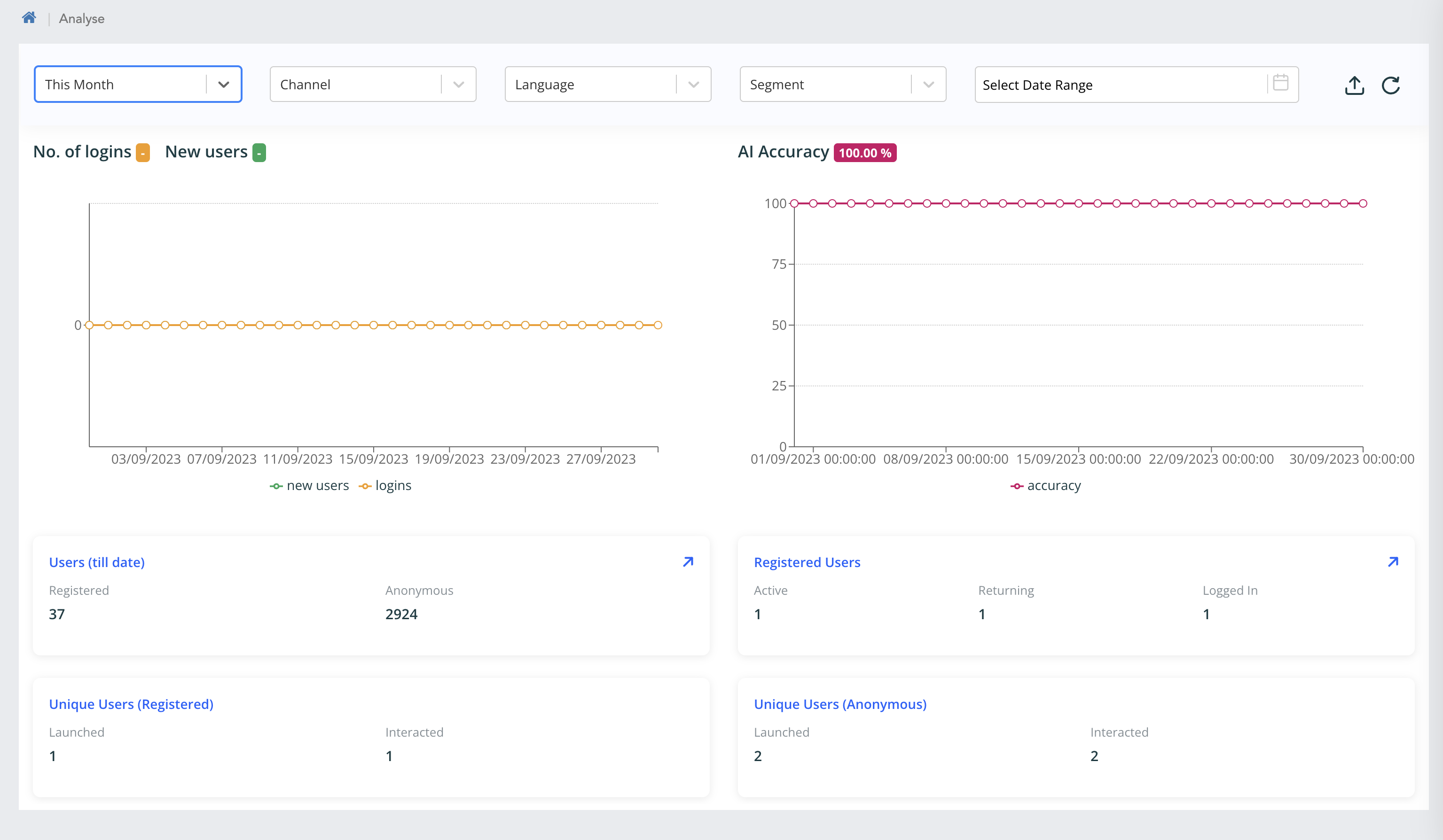

- Registered - Total no. of registered users from inception to till today. If data range is selected, then it is from inception to To-Date.

- Anonymous - Total no. of anonymous users from inception to till today. If data range is selected, then it is from inception to To-Date.

- Active - No. of unique registered user who had interacted with the VA within the filtered date range is considered active

- Returning - No. of unique registered user who had registered in the system earlier to filter date range but interacted with the VA within the filter date range is considered Returning

- Logged in - No. of unique registered logged-in user who had interacted with the VA within the filter date range is considered Logged-in

- Launched - No. of users who just launched the VA (includes post login)

- Interacted - No. of users who just launched & sent messages in VA (includes post login)

- Launched - No. of users who just launched the VA (includes pre login)

- Interacted - No. of users who just launched & sent messages in VA (includes pre login)

- Online - Total number of live chat redirection to agent and connection to live agent established

- Offline - Total number of live chat redirection offline i.e. redirection to live agent triggered but connection to live agent was not established, agent is offline.

- How users interaction and AI is performing

- No. of logins

- New Users

- AI Accuracy

- Users(till date)

- Registered users

- Unique users

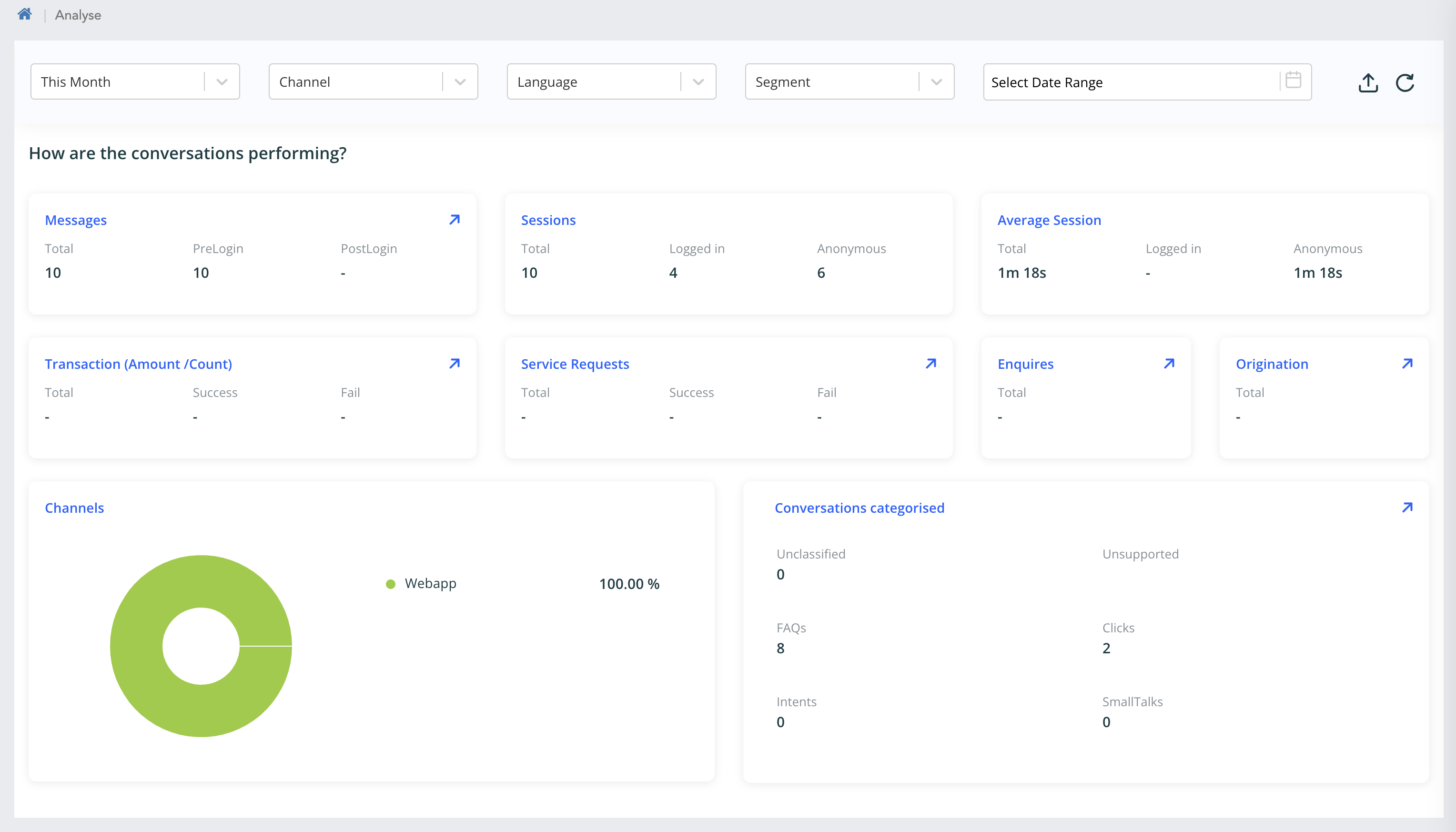

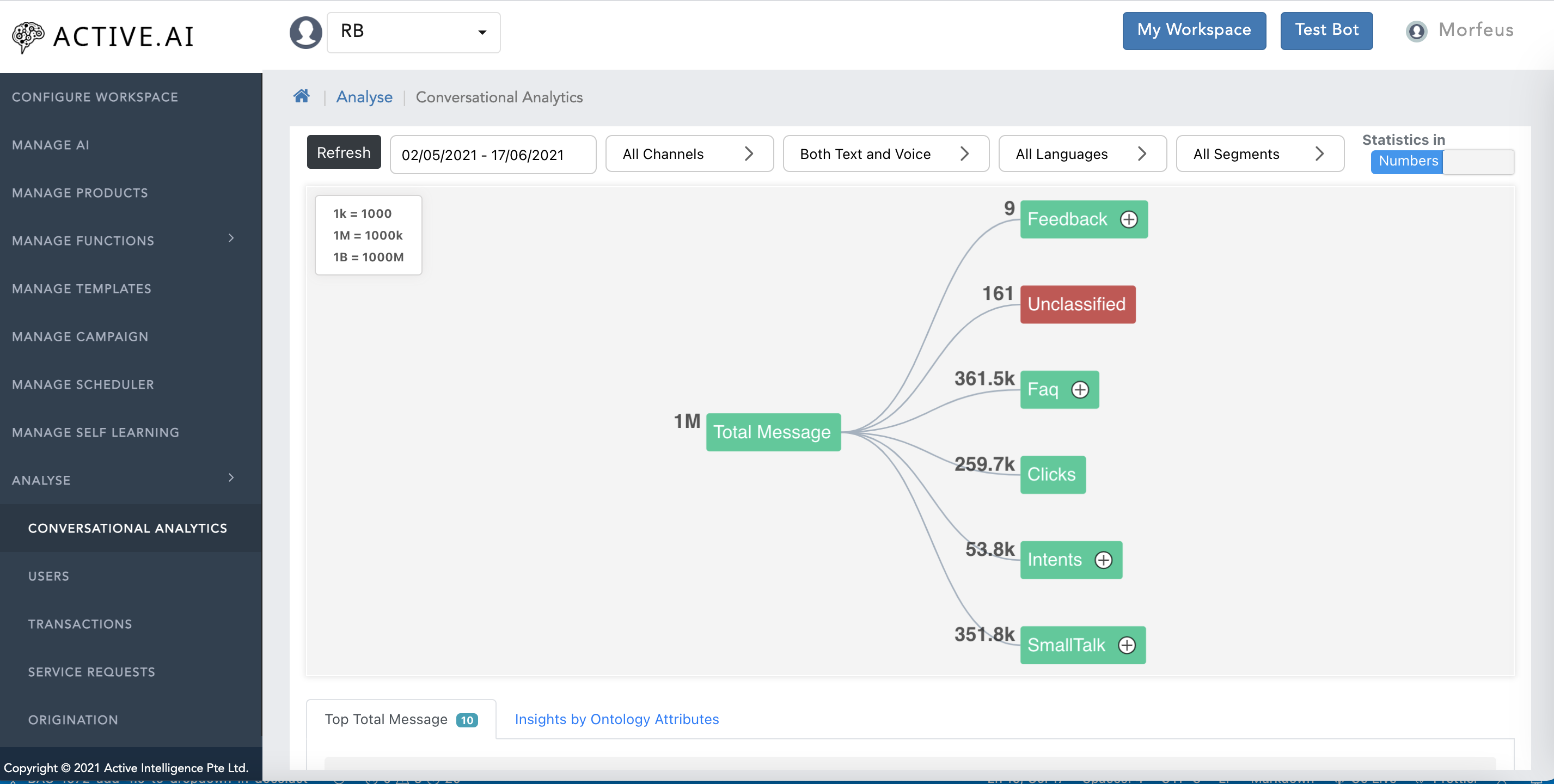

- How are the conversations performing?

- Messages

- Sessions

- Transaction Amount

- Transaction count

- Service Requests

- Enquiry

- Origination

- Avg. Session Time

- Channels

- Conversations categorised

- How are the users interacting?

- Feedback

- Sentiment

- LiveChat Redirections

- Anonymous users

- Goto your workspace

- Navigate to 'Analyse'

- Click on 'Users'

- Registered users

- Goto your workspace

- Navigate to 'Analyse'

- Click on 'Users'

- Select 'Registered in the dropdown'

- We can search with customer ID

- Registration Date - Date filter based on user registration date

- Last Access Date - Date filter based on user last access date

- Active Date - Filter all user active in given date range